Seven examples of AI-assisted propaganda in politics

Key Points

- As the output of AI models has become more realistic, AI-generated media is creeping into everyday politics, as these examples show.

- Examples of the use of AI for political manipulation include the election campaign of a mayoral candidate in Toronto and the use of AI-generated images by the New Zealand National Party.

- AI is also being used for political manipulation beyond images, such as the alleged cloning of a mayoral candidate's vote in Chicago.

Update –

- Added Microsoft's accusation of election interference from China using AI imagery.

As the output of AI models has become more realistic, AI-generated media is creeping into everyday politics, as these examples show.

A new era of AI-driven "fake news" has begun, not always as harmless as images of the Pope suddenly dressed in surprisingly fashionable clothes. They can also cause serious confusion, as in the case of the images purporting to show the alleged arrest of Donald Trump, or the fake image of a fire near the Pentagon.

In this article, we collect examples of AI being used for political manipulation. This article will be updated regularly.

Toronto mayoral candidate Anthony Furey

Toronto is about to elect a new mayor. Anthony Furey, who says he has worked in journalism and broadcasting for 15 years himself, has recognized the potential of AI image generators and is using them to illustrate parts of his campaign. One of his core themes is: He wants to rid Toronto's streets of homeless people.

For example, one image from his campaign shows a Toronto street with people camping out without shelter. Another image shows a man and a woman, but is quickly revealed to be AI-generated: the woman has three arms.

The source of the various images in the digital brochure is unclear. The image of the three arms has been replaced by a less conspicuous image, also generated by AI.

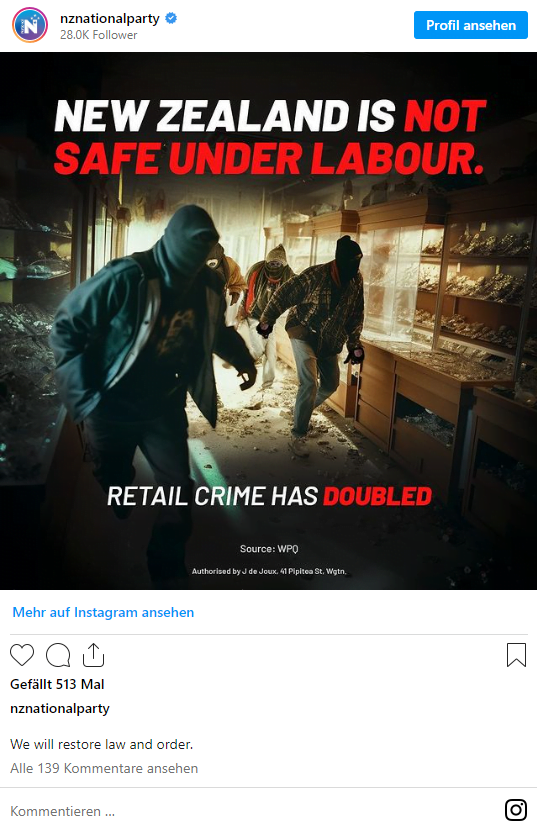

New Zealand National Party

The image used by the conservative right-wing New Zealand National Party on Instagram gives a more realistic feel. "New Zealand is not safe under Labour," reads the caption on a picture of a faceless burglar. There is no reference to the origin of the image. Voters' reactions in the comments are mixed, with some condemning the "Trump-like" policy and others welcoming the use of modern technology.

Chicago mayoral candidate

The use of AI for political manipulation is not limited to image generation. According to the New York Times, a candidate for mayor in Chicago complained that a seemingly legitimate media outlet cloned his voice on Twitter. In this way, he says, he was tricked into saying that he supported police violence. Whether it was a hoax cannot be verified.

Pentagon

In May 2023, images of a burning building, supposedly close to the Pentagon, circulated on Twitter from accounts that at first seemed trustworthy because they were marked with a blue checkmark.

However, since Elon Musk took over Twitter, this is no longer a reliable sign of legitimate information, since anyone can buy an account for eight dollars a month. The alleged attack on the Pentagon caused a stir and even briefly sent the Dow Jones plummeting.

Fake AI image of Pentagon exploding goes viral on Twitterhttps://t.co/xUcUwmZR3u

— The Independent (@Independent) May 23, 2023

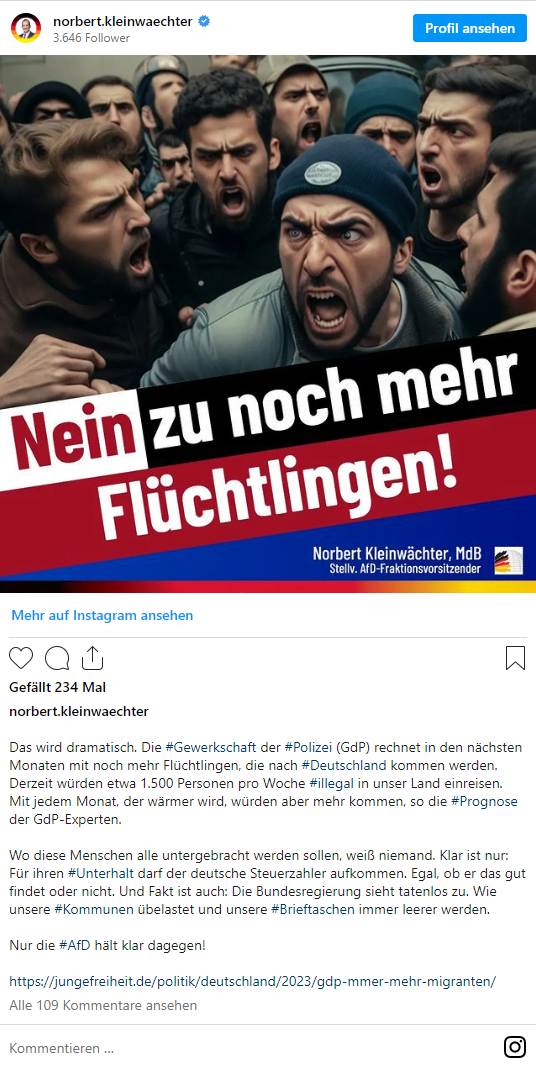

Alternative für Deutschland (AfD)

In March 2023, AfD deputy parliamentary group leader Norbert Kleinwächter drew attention to himself with an AI image. "No to even more refugees," his Instagram post reads, showing angry people with their mouths agape. It wasn't the last AI image on Kleinwächter's channel; he also likes to caricature political opponents using Midjourney.

Arrest of Donald Trump

AI can create images in seconds that look like real photos at first glance. This allows malicious users to seize on breaking news and quickly spread visualizations.

For example, when the public was discussing the possible arrest of former U.S. President Donald Trump, partially photorealistic images appeared on social media shortly thereafter, purportedly confirming it.

This was triggered by Eliot Higgins, founder of the Dutch research organization Bellingcat, who visualized his arrest fantasy with Midjourney and shared the results on Twitter.

Allegations of Chinese election influence through AI images

According to a Microsoft report, the company's researchers have identified a network of fake Chinese social media accounts that appear to be using AI to try to influence U.S. voters. The accounts have allegedly been posting politically charged content in English since at least March 2023, pretending to be U.S. citizens.

One example, according to Microsoft, is a "very likely" AI-generated image of the Statue of Liberty with an assault rifle and the text "Democracy & Liberties: Everything is being thrown away." At least the text must have been added manually.

According to Microsoft, the activities of these accounts are similar to a previously known Chinese information operation that the U.S. Department of Justice has attributed to an elite unit of China's Ministry of Public Security. However, the report is short on details.

The accounts attempted to appear American by claiming to be in the U.S., posting American political slogans, and using hashtags on domestic issues, Microsoft wrote, seeing the campaign as a threat to the integrity of the 2024 U.S. elections. China has denied the allegations as biased and malicious.

Meanwhile, Google has prepared for AI-generated content in the US election campaign with a new advertising policy. It requires "synthetic content" to be prominently labeled.

Is it allowed to use AI image models for political campaigns?

Midjourney, the AI image model that is currently considered the most powerful of its kind and that has come closer to photorealism in recent versions, explicitly forbids the use of its images for political purposes in its terms of use.

However, the operators of Midjourney - if they become aware of such cases - can do little about it, except to moderate the prompts and block the corresponding accounts. Such consequences are excluded in the open-source model of Stable Diffusion, which is slowly reaching a similar level of quality with SDXL.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now