GPT-4 could be the great skill leveler for consultants, study shows

The consulting industry is about to be disrupted by large language models such as GPT-4, according to a study.

The study was conducted by the Boston Consulting Group together with researchers from Harvard Business School, MIT Sloan, Warwick Business School, and the Wharton School.

It analyzed the work of 758 randomly selected Boston Consulting Group consultants. Some were allowed to use GPT-4, while others worked without AI. The consultants using AI had access to the generally available GPT-4 via an API, without any special prompting or fine-tuning.

The great skill leveler

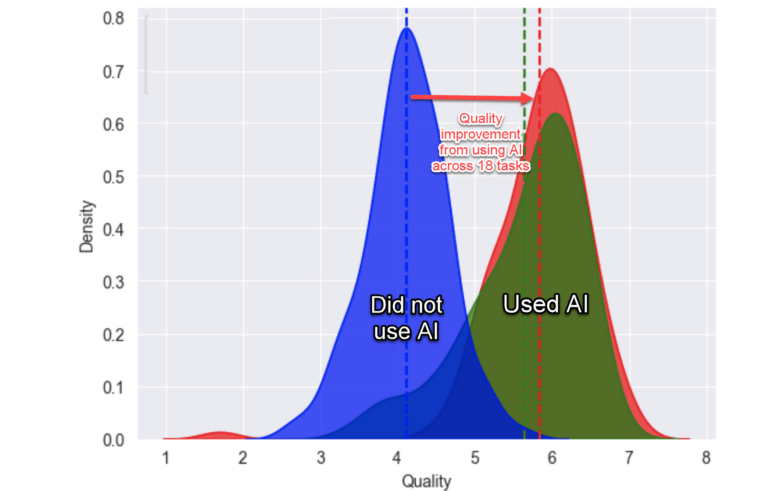

The team compared 18 typical real-world consulting tasks for a fictitious shoe company: writing press releases, conducting market analysis, developing creative ideas for new products, writing inspirational speeches, and so on.

The results, evaluated by humans and GPT-4, were identical. On average, consultants working with GPT-4 completed 12.2 percent more tasks, acted 25.1 percent faster, and achieved 40 percent better results than their non-AI counterparts.

"Consultants using ChatGPT-4 outperformed those who did not, by a lot. On every dimension," writes Ethan Mollick of the Wharton School, who participated in the study.

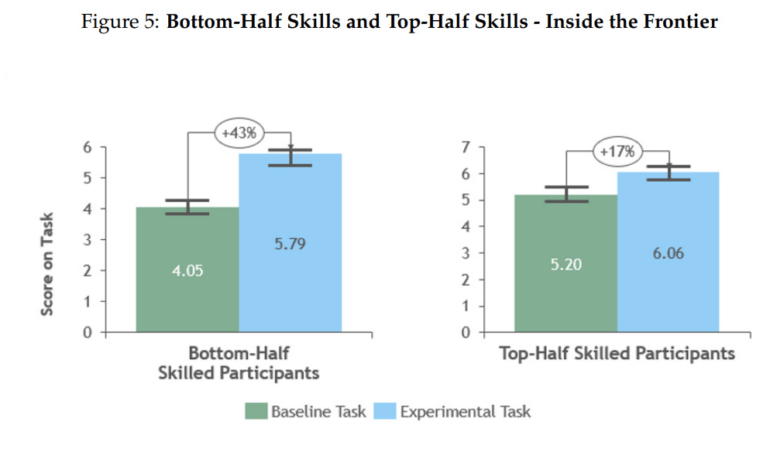

The study also found that advisors who underperformed without AI particularly benefited from using AI. With GPT-4's help, they achieved a 43 percent increase in performance, while high-performing advisors improved by 17 percent. This ability to level the playing field is still underappreciated, Mollick writes.

In addition, the research team identified two usage patterns: advisors who outsourced individual tasks to AI ("centaurs") and advisors who fully integrated AI into their workflow ("cyborgs"). Both benefited from the use of AI.

The Jagged Frontier of AI

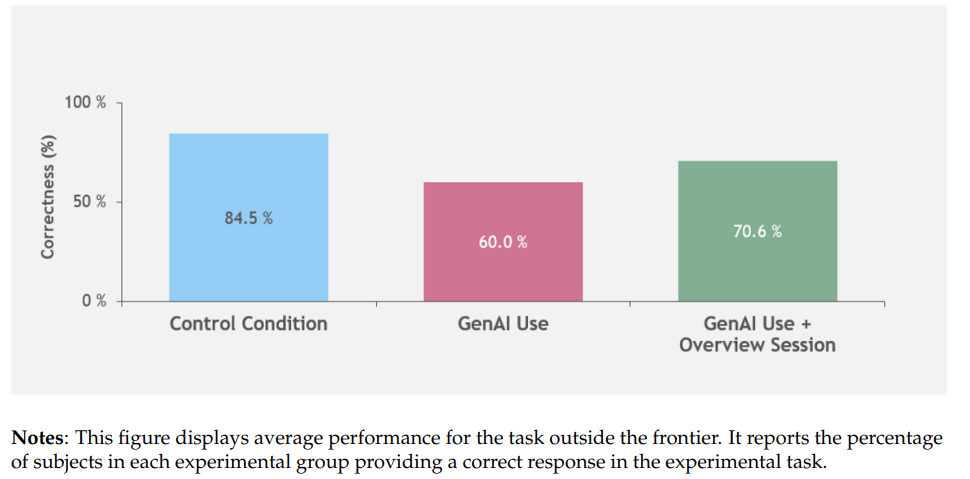

But the study also shows that while generative AI excels at many tasks, it fails at certain problems. The researchers call this problem the "jagged frontier" of AI capabilities.

On tasks outside this range, advisors with AI performed nearly 25 percent worse than advisors without AI because GPT-4 provided unreliable or incorrect information. As a result, the researchers caution against blindly using AI.

"On some tasks AI is immensely powerful, and on others it fails completely or subtly. And, unless you use AI a lot, you won’t know which is which," Mollick writes.

Overall, however, Mollick says most advisors have been able to confidently navigate the frontier and leverage the positive aspects of AI in their work without being affected by the negative effects.

Mollick expects AI's current frontiers to continue to expand, and its capabilities to evolve and improve in the future. The researcher believes that at least two companies will release more powerful models than GPT-4 in the next year.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.