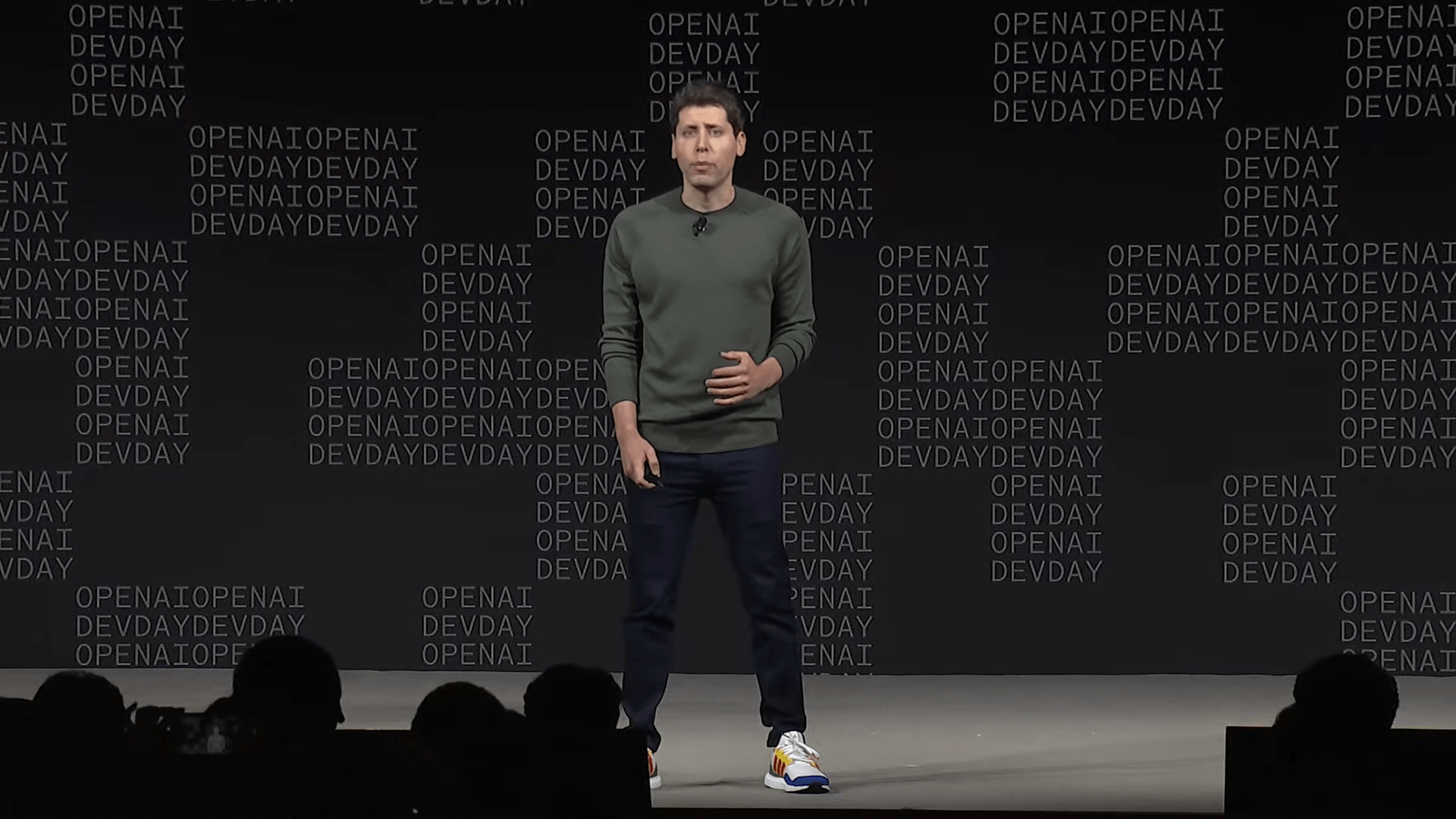

In an email to Elon Musk prior to founding OpenAI, Sam Altman outlined his vision for the organization and promised to create the first AGI that would benefit everyone.

As part of Elon Musk's lawsuit against Sam Altman and OpenAI, Musk's lawyers are citing an email from the summer of 2015 as evidence that provides an interesting glimpse into the period before OpenAI was founded in December 2015.

"The mission would be to create the first general AI and use it for individual empowerment," Altman wrote. OpenAI's recent move to develop individual AI agents seems to pick up on that promise.

Altman recommended starting with a small, elite team of up to ten people housed in a spare building in Mountain View. He also proposed a five-person leadership structure, including himself and Musk, to steer the foundation's proprietary AI technology for global benefit.

It is also clear from the email that Altman was keen for Musk to be involved, albeit in a small capacity, to attract top talent. In addition, Musk was to meet with employees once a month to discuss progress.

"Even if you can't really spend time on it but can be publicly supportive, that would still probably be really helpful for recruiting," Altman wrote.

He outlined a compensation model for researchers that separates income from work to avoid conflicts of interest. In the current model of OpenAI's for-profit unit, which was founded in 2019, employees earn money through equity holdings. Altman says he owns no shares in OpenAI.

In addition, Altman suggested postponing the release of a regulatory letter on security to align it with OpenAI's progress. He could then publish the letter with a message like, "now that we are doing this, I've been thinking a lot about what sort of constraints the world needs for safety," Altman proposed.

Elon Musk agreed with Altman on all points.

From: Elon Musk

Sent: Wednesday, June 24, 2015 11:06 PM

To: Sam Altman

Subject: Re: Al labAgree on all

On Jun 24, 2015, at 10:24 AM, Sam Altman wrote:

1) The mission would be to create the first general AI and use it for individual empowerment i.e., the distributed version of the future that seems the safest. More generally, safety should be a first-class requirement.2) I think we'd ideally start with a group of 7-10 people, and plan to expand from there. We have a nice extra building in Mountain View they can have.

3) I think for a governance structure, we should start with 5 people and I'd propose you, and me. The technology would be owned by the foundation and used "for the good of the world", and in cases where it's not obvious how that should be applied the 5 of us would decide. The researchers would have significant financial upside but it would be uncorrelated to what they build, which should eliminate some of the conflict (we'll pay them a competitive salary and give them YC equity for the upside). We'd have an ongoing conversation about what work should be open-sourced and what shouldn't. At some point we'd get someone to run the team, but he/she probably shouldn't be on the governance board.

4) Will you be involved somehow in addition to just governance? I think that would be really helpful for getting work pointed in the right direction getting the best people to be part of it. Ideally you'd come by and talk to them about progress once a month or whatever. We generically call people involved in some limited way in YC "parl-time partners" (we do that with Peter Thiel for example, though at this point he's very involved) but we could call it whatever you want. Even if you can't really spend time on it but can be publicly supportive, that would still probably be really helpful for recruiting.

5) I think the right plan with the regulation letter is to wait for this to get going and then I can just release it with a message like "now that we are doing this, I've been thinking a lot about what sort of constraints the world needs for safety." I'm happy to leave you off as a signatory. I also suspect that after it's out more people will be willing to get behind it.

Sam

Musk now accuses Altman of turning OpenAI into a closed-source subsidiary of Microsoft, in violation of the founding agreement to develop AGI as open source and make it available to the public. The secrecy surrounding GPT-4 is for commercial, not safety, reasons, according to Musk's lawsuit.

The new board of directors, appointed after Altman's dismissal and reinstatement, was hand-picked by Altman and had no substantive AI expertise. Musk believes that the new board will not be able to independently decide if and when OpenAI has achieved AGI.

Musk resigned from OpenAI in February 2018. OpenAI said at the time that Musk's departure from the board had to do with avoiding conflicts of interest in his role as CEO of Tesla, which is also researching AI. Musk donated about $44 million to OpenAI between 2016 and 2020, according to the lawsuit.