Large AI networks like language models make mistakes or contain outdated information. MEND shows how to update LLMs without changing the whole network.

Large AI models have become standard in many AI applications, such as natural language processing, image analysis, and image generation. The models, such as OpenAI's GPT-3, often have more diverse capabilities than small, specialized models and can be further improved via finetuning.

However, even the largest AI models regularly make mistakes and additionally contain outdated information. GPT-3's most recent data is from 2019 - when Theresa May was still prime minister.

Large AI models must become updatable and correctable

To re-train GPT-3 would be an enormous effort and is not a solution - neither economically nor in principle, since the model would be outdated again within a few weeks. In addition, many flaws only become visible during use and not during the curation of the training data.

Ideally, therefore, large AI models should be able to be corrected by developers and/or users in such a way that the model remains intact except for the changes.

However, such targeted corrections are extremely difficult. Researchers at Stanford University have now published a paper that addresses this problem. They say the decentralized black-box nature of the representations learned by large neural networks is the biggest obstacle. Previously known solutions for smaller neural networks do not translate to large models, the team says.

If presented with only a single problematic input and new desired output, fine-tuning approaches tend to overfit; other editing algorithms are either computationally infeasible or simply ineffective when applied to very large models.

From the paper

MEND relies on small auxiliary networks

A successful modification would have to meet three conditions: reliability, locality, and generality.

- It must reliably change the output for a previously problematic input ("Who is the prime minister of the UK?"),

- while minimally affecting the model's output for unrelated inputs ("What sports team does Messi play for?"),

- and at the same time producing correct output for related inputs ("Who is the UK PM?").

As a solution, the team proposes "Model Editor Networks with Gradient Decomposition" (MEND). Instead of fine-tuning a large model directly, MEND trains small model editor networks (multilayer perceptrons) that make changes to the weights of the large model. To do this, they use a low-dimensional representation of the fine-tuning gradient of each correction.

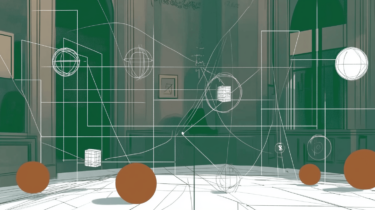

Video: Mitchell et al.

In their paper, the researchers show that MEND can be trained for large models with more than ten billion parameters within one day on a single GPU. It can then perform quick edits on T5, GPT, BERT, or BART models. The team also shows that MEND is likely to scale to model sizes of hundreds of billions of parameters.

For more information, see the MEND project page. The code is available on GitHub.