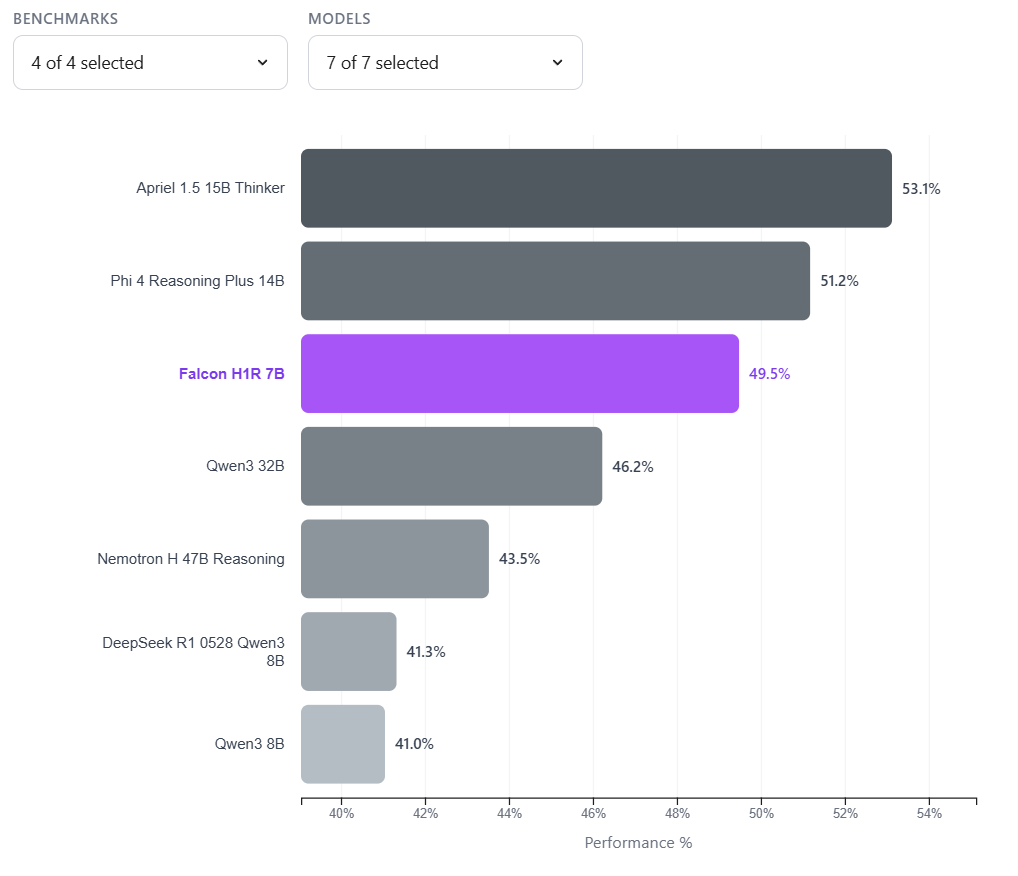

Abu Dhabi's TII claims its Falcon H1R 7B reasoning model matches rivals seven times its size

The Technology Innovation Institute (TII) from Abu Dhabi has released Falcon H1R 7B, a compact reasoning language model with 7 billion parameters. TII says the model matches the performance of competitors two to seven times larger across various benchmarks, though as always, benchmark scores only loosely correlate with real-world performance, especially for smaller models. Falcon H1R 7B uses a hybrid Transformer-Mamba architecture, which lets it process data faster than comparable models.

The model is available as a complete checkpoint and quantized version on Hugging Face, along with a demo. TII released it under the Falcon LLM license, which allows free use, reproduction, modification, distribution, and commercial use. Users must follow the Acceptable Use Policy, which TII can update at any time.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now