The Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT has developed a new way for LLMs to explain the behavior of other AI systems.

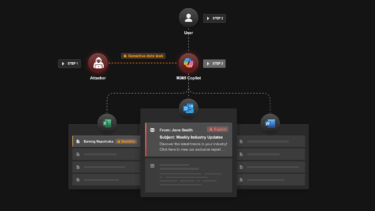

The method is called Automated Interpretability Agents (AIAs), pre-trained language models that provide intuitive explanations for computations in trained networks.

AIAs are designed to mimic the experimental process of a scientist designing and running tests on other computer networks.

They provide explanations in various forms, such as language descriptions of system functions and errors, and code that reproduces system behavior.

According to MIT researchers, AIAs differ from existing interpretive approaches. They actively participate in hypothesis generation, experimental testing, and iterative learning. This active participation allows them to refine their understanding of other systems in real time and gain more in-depth insight into how complex AI systems work.

The FIND benchmark

A key contribution of the researchers is the FIND (Function Interpretation and Description) benchmark. The benchmark contains a test bed of functions that resemble the computations in trained networks. These functions come with descriptions of their behavior.

Researchers often do not have access to ground-truth labels of functions or descriptions of learned computations. The goal of FIND is to solve this problem and provide a reliable standard for evaluating interpretability procedures.

One example in the FIND benchmark is synthetic neurons. These mimic the behavior of real neurons in language models and are selective for certain concepts, such as "ground transportation."

AIAs gain black-box access to these neurons and design inputs to test the neurons' responses. For example, they test a neuron's selectivity for "cars" versus other modes of transportation.

To do this, they determine whether a neuron responds more strongly to "cars" than to other inputs. They then compare their descriptions with the ground truth descriptions of the synthetic neurons, i.e. "ground transportation." This is how they work out the roles of individual neurons.

However, since the models that provide the explanations are themselves black boxes, external evaluation of the interpretation methods is becoming increasingly important.

The FIND benchmark aims to address this need by providing a set of functions with a known structure, modeled on observed behavior, and covering various domains, from mathematical reasoning to symbolic operations on strings.

Sarah Schwettmann, co-author of the study, emphasizes the benefits of this approach. AIAs are able to generate and test hypotheses independently. This allows them to uncover behaviors that would otherwise be difficult to detect.

Benchmarks with "ground truth" answers have driven the evolution of language models. Schwettmann hopes that FIND can play a similar role in interpretability research.

Currently, the FIND benchmark shows that the AIA approach needs to be improved. It cannot describe nearly half of the functions in the benchmark.

AIAs would miss finer details, "particularly in function subdomains with noise or irregular behavior." Nevertheless, AIAs showed better performance than existing interpretation methods.

The researchers are also working on a toolkit to improve the ability of AIAs to conduct more accurate experiments with neural networks. The toolkit aims to provide AIAs with better tools for selecting inputs and refining hypothesis tests.

The team is also focusing on the practical challenges of AI interpretability by investigating how to ask the right questions when analyzing models in real-world scenarios. The goal is to develop automated interpretability procedures.

These methods aim to enable humans to check and diagnose AI systems for possible errors, hidden biases, or unexpected behavior before they are deployed. As a next step, the researchers want to develop near-autonomous AIAs that would test other AI systems. Human scientists would supervise and advise them. Advanced AI systems could then develop new experiments and questions that go beyond the original thinking of human scientists.