AI agents in GitHub and GitLab workflows create new enterprise security risks

Aikido Security warns that plugging AI agents into GitHub and GitLab workflows opens up a serious vulnerability in enterprise environments. The issue hits widely used tools like Gemini CLI, Claude Code, OpenAI Codex, and GitHub AI Inference.

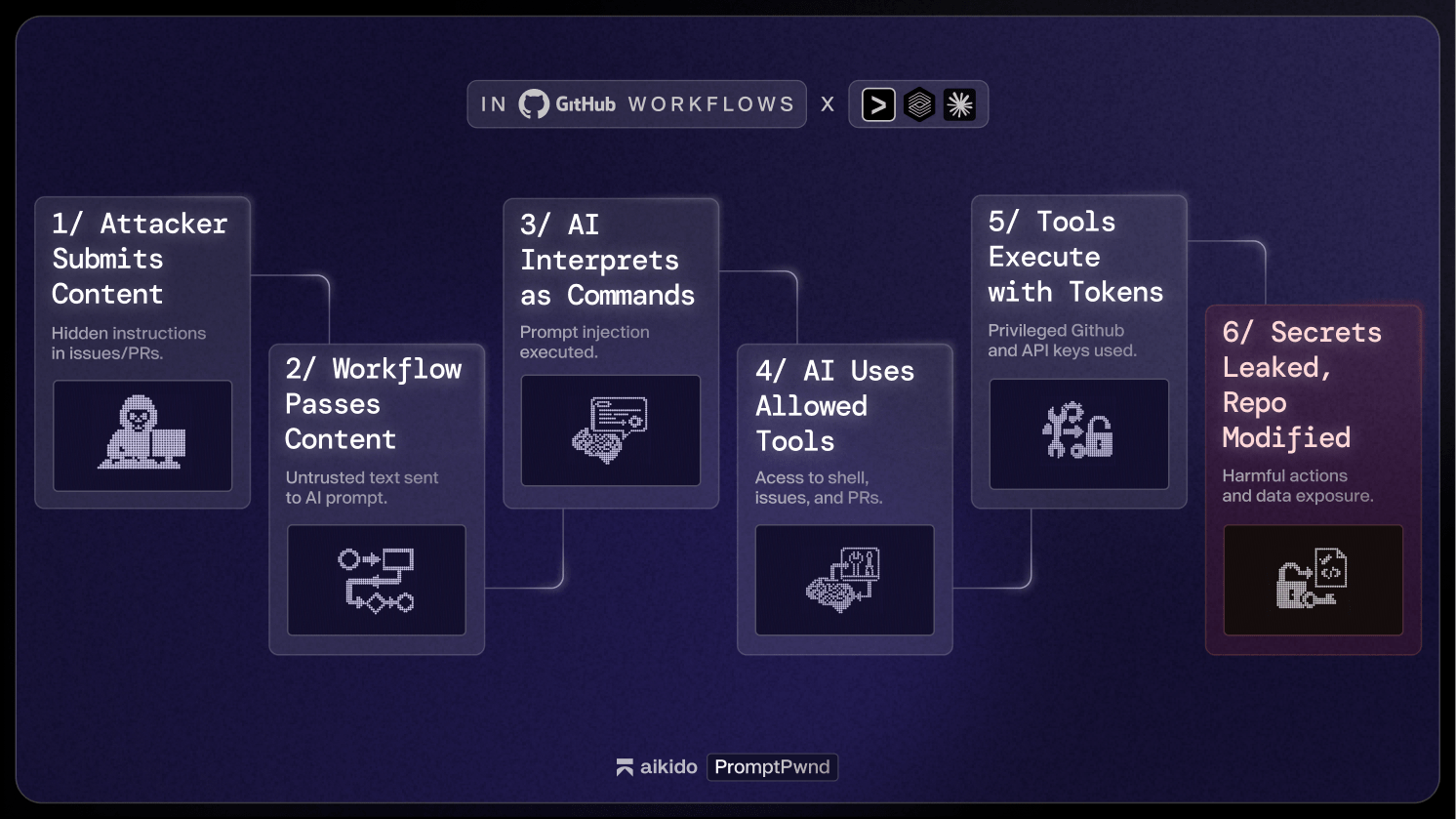

According to the security firm, attackers can slip hidden instructions into issues, pull requests, or commits. That text then flows straight into model prompts, where the AI interprets it as a command instead of harmless content. Because these agents often have permission to run shell commands or modify repos, a single prompt injection can leak secrets or alter workflows. Aikido says tests showed this risk affected at least five Fortune 500 companies.

Google patched the issue in its Gemini CLI repo within four days, according to the report. To help organizations secure their pipelines, Aikido published open search rules and recommends limiting the tools available to AI agents, validating all inputs, and avoiding the direct execution of AI outputs.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now