AI coding can make developers slower even if they feel faster

Update –

- Added perspective from study participant Quentin Anthony

Update from July 13, 2025:

Quentin Anthony, one of the 16 developers involved in the METR study, shared his take on the results on X. Unlike most participants, Anthony managed to cut his task completion time by 38 percent with AI—making him a notable outlier in the overall findings.

Anthony attributes the wider productivity drop not to a lack of skill, but to the way developers approach AI tools. "We like to say that LLMs are tools, but treat them more like a magic bullet," he says. Because these models often feel tantalizingly close to a solution, developers end up spending too much time with them rather than moving efficiently toward their goal.

He also highlights technical boundaries. Large language models, he argues, have uneven strengths: they're effective for writing test code but fall short on low-level system work such as GPU kernel programming or synchronization logic. Anthony points to the phenomenon of "context rot", where LLMs become less reliable as chats grow longer or drift off-topic—an issue that can steer developers into unproductive loops.

His advice: start new chats frequently, choose models based on their specific strengths, and set firm limits on how much time to spend interacting with LLMs.

Anthony himself uses different models for different jobs—Gemini for code comprehension, Claude for refactoring and debugging. He prefers direct API access over IDE plugins to better control exactly what the model sees. As he puts it, "LLMs are a tool, and we need to start learning its pitfalls and have some self-awareness."

Original article from July 11, 2025:

A new study finds that experienced open-source developers actually work more slowly with AI coding tools, even though they believe they're moving faster.

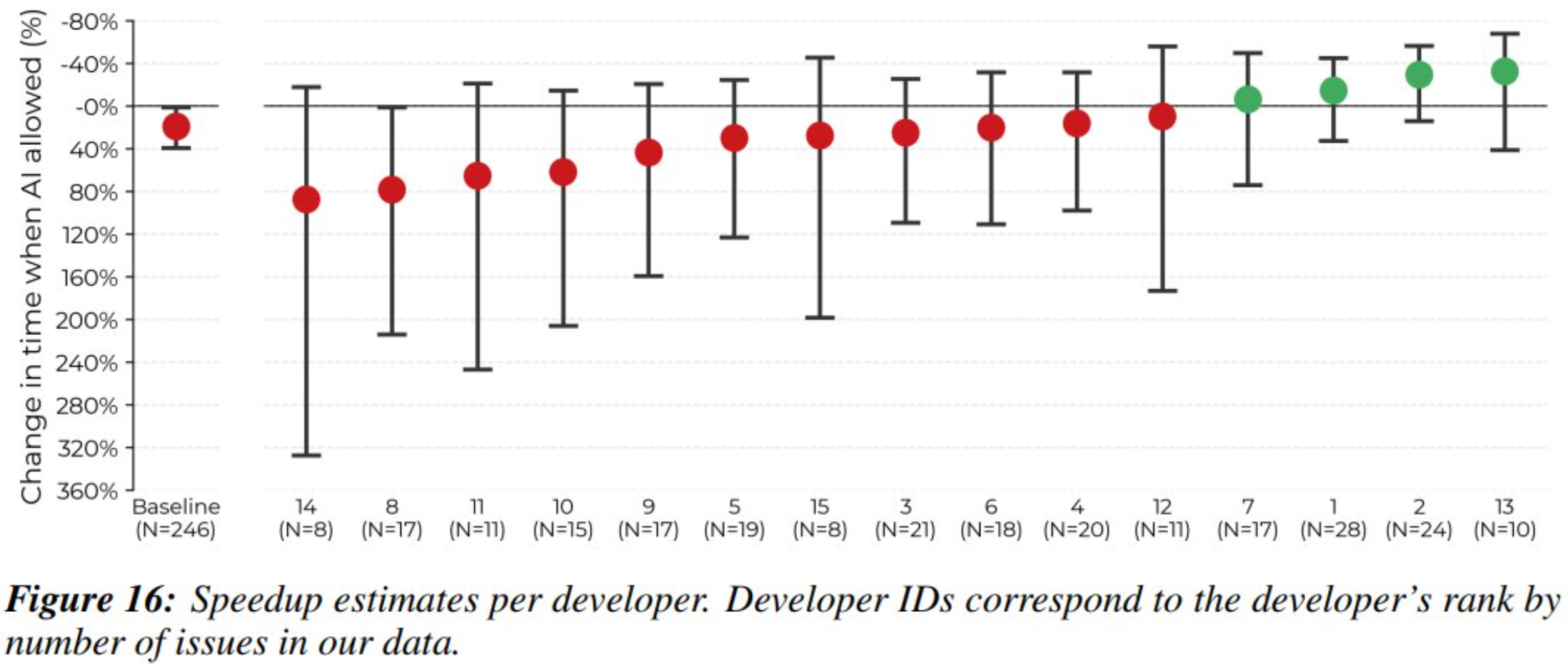

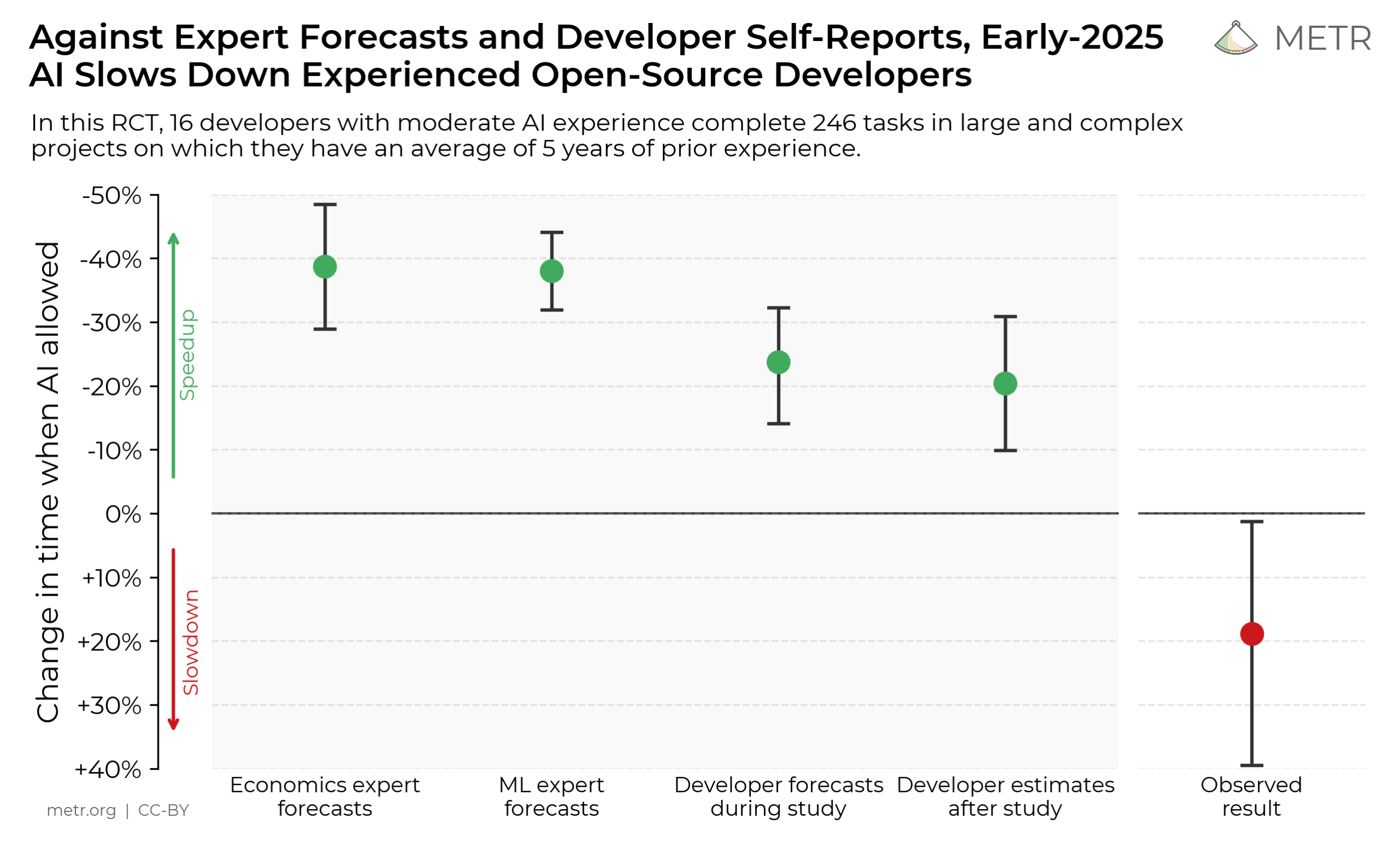

Researchers at the METR institute ran a randomized trial in early 2025 to see how advanced AI tools affect the productivity of seasoned open-source developers. On average, developers took 19 percent longer to complete real-world tasks when using AI, even though they thought the opposite was true.

The perception gap: Fast feels slow

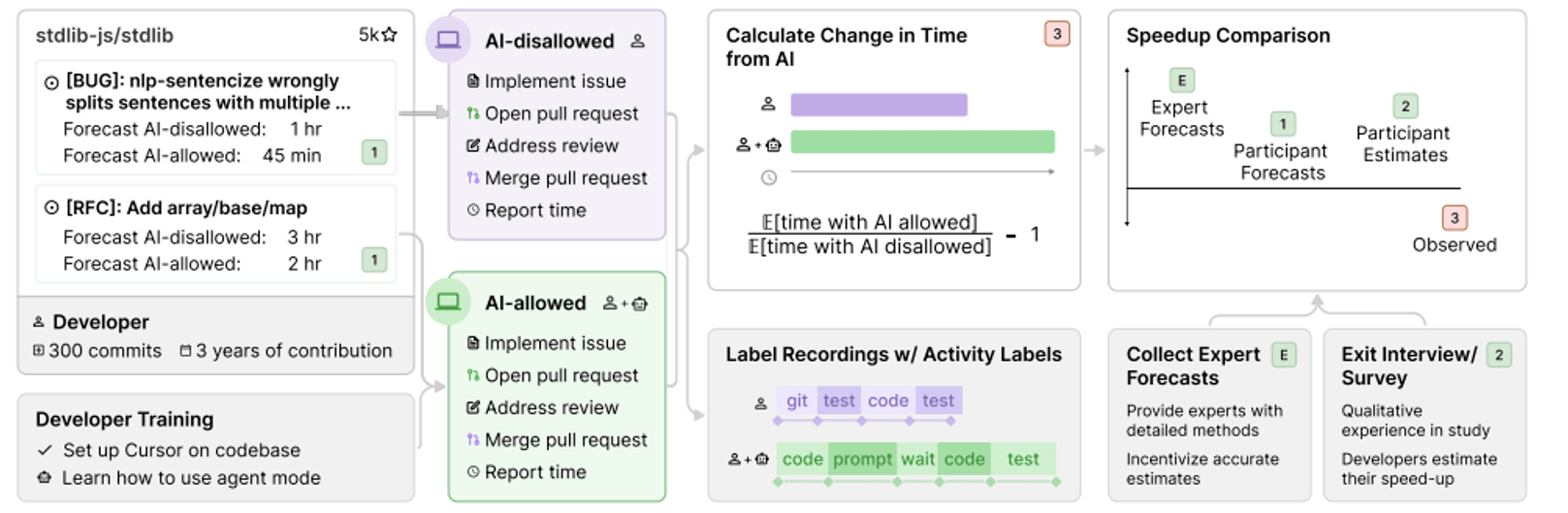

The study followed 16 experienced developers as they tackled 246 real tasks from their own complex open-source projects. Before starting, the developers predicted that AI would make them 24 percent faster.

To measure the true impact, each task was randomly assigned to one of two groups: a control group working without generative AI and an experimental group using AI assistants, mainly Cursor Pro with leading models like Claude 3.5 and Claude 3.7 Sonnet.

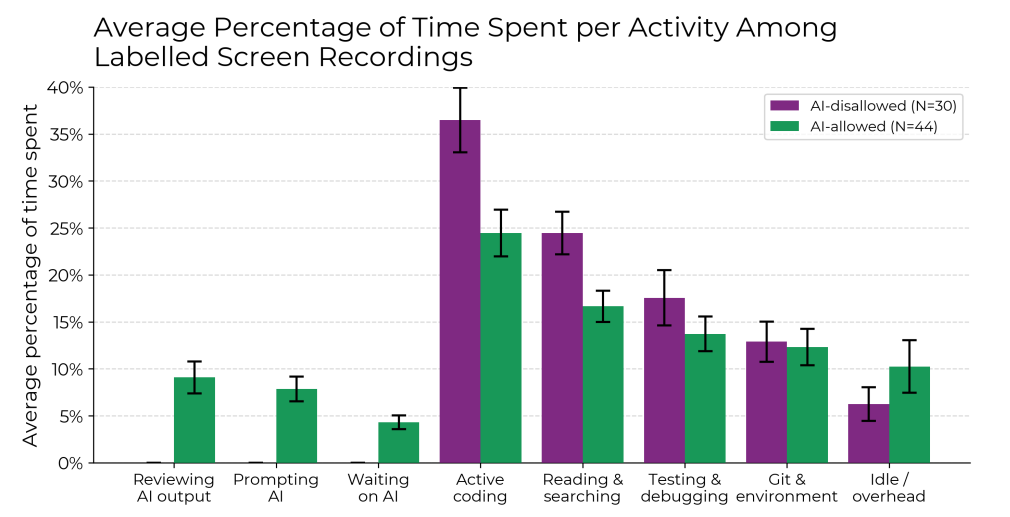

Developers recorded their screens and logged how long each task took. To account for varying difficulty levels, the researchers used a statistical method that included the developers' own time estimates for each task. This let them isolate how much AI use actually changed working time, regardless of whether a task was easy or hard.

The key finding: while developers consistently expected to save time, the data showed the opposite. Even after finishing their tasks, they still believed AI had made them 20 percent faster, despite actually taking longer.

Real-world impact requires new measurement methods

METR argues that these results show the need for new ways to measure the real-world effects of generative AI. Popular benchmarks like SWE-Bench or RE-Bench typically focus on isolated, context-free tasks and algorithmic evaluation, which can distort the picture. In contrast, randomized control trials like this one test real tasks in realistic settings, giving a fuller view of how AI helps—or hinders—developers in everyday work.

I asked our AI developer whether the results matched his impressions from his day-to-day work. He thinks they are plausible, especially in the context of mature, complex projects with high-quality requirements and numerous implicit rules, such as in open-source projects. Here, AI tools could cause additional explanation and control effort.

The situation is different for new projects or rapid prototyping, as well as when working with previously unknown frameworks. In such scenarios, AI tools could play to their strengths and actually support developers.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.