AI decrypts passwords using only keyboard sounds

British researchers have developed an AI model called CoAtNet that can decode sensitive data such as passwords from the sounds of keystrokes with up to 95 percent accuracy.

The research is based on the finding that each key on a keyboard produces a unique acoustic signal. By recording these keystroke sounds with a microphone and then analyzing them with CoAtNet, these signals can be converted back into letters and passwords.

From recording to prediction

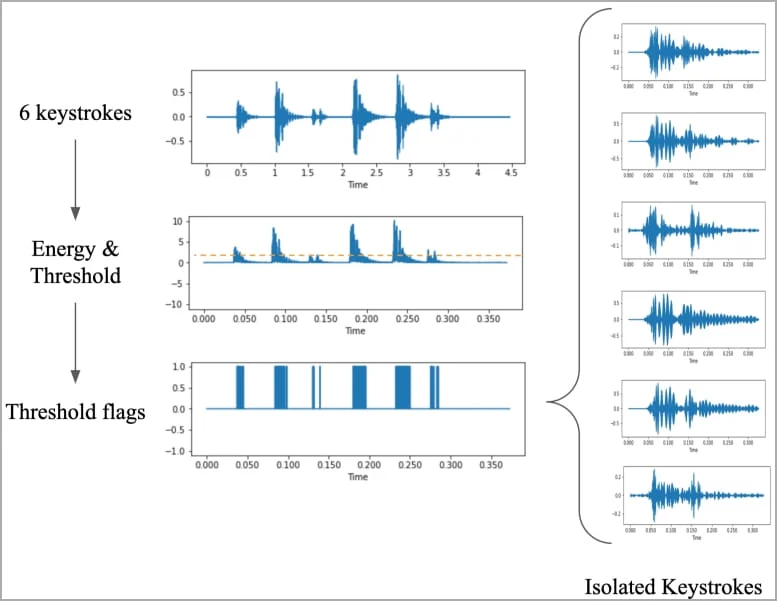

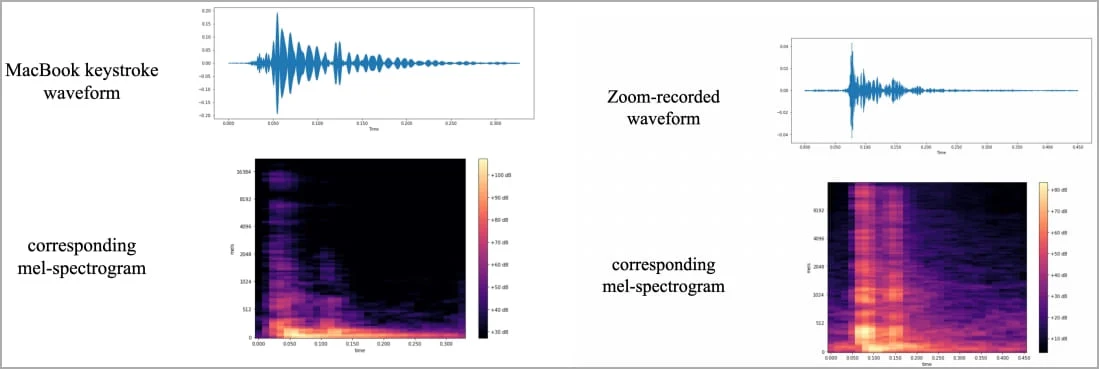

The research began with the recording of 36 keys on a MacBook Pro keyboard with an M1 chip and a 16-inch screen, each of which was pressed 25 times. These recordings were converted into waveforms and spectrograms to visualize the differences between each keystroke. The researchers then used this visual data to train the CoAtNet image model.

The researchers placed an iPhone 13 Mini next to a MacBook Pro with an M1 chip and a 16-inch screen to record keystrokes. With this setup, the system achieved up to 95 percent accuracy.

They also showed that their method worked with videoconferencing platforms such as Zoom and Skype, with slightly lower but still high accuracy rates of 93 and 91.7 percent, respectively.

Gateway for cyber attacks

This acoustic attack could compromise passwords, messages, and other sensitive information. Previous similar studies have shown that this form of attack also works on laptops and keyboards from other manufacturers.

Zoom recommends muting the microphone by default, or at least while typing, to increase security. Background noise suppression can also be beneficial.

To increase security, the researchers suggest switching to ten-finger typing, which significantly reduces the acoustic detection rate of individual keys.

In addition, the use of password managers can help minimize the risk of keyboard espionage by eliminating the need to type passwords.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.