Leading AI companies and research labs, such as OpenAI, Amazon, Google, Meta, and Microsoft are making voluntary commitments to improve the safety, security, and trustworthiness of AI technology and services.

Coordinated by the White House, these actions aim to promote meaningful and effective AI governance in the United States and around the world. As part of their voluntary commitments, the companies plan to report system vulnerabilities, use digital watermarking for AI-generated content, and disclose technology flaws impacting fairness and bias.

The voluntary commitments released by the White House aim to improve various aspects of AI development:

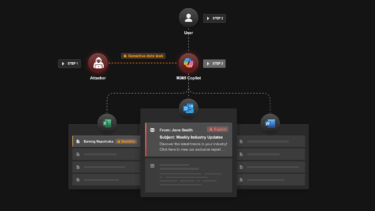

- Safety: Companies commit to internal and external red-teaming of models or systems to identify misuse, societal risks, and national security concerns.

- Security: Investments in cybersecurity and insider threat safeguards will protect proprietary and unpublished model weights.

- Trust: Develop and deploy mechanisms for users to understand whether audio or visual content is AI-generated, publicly report model capabilities and limitations, and prioritize research on societal risks posed by AI systems.

- Tackle society's biggest challenges: Companies will develop and deploy cutting-edge AI systems to help solve critical problems such as mitigating climate change, detecting cancer early, and combating cyber threats.

These immediate steps are intended to address potential risks while waiting for Congress to pass AI regulations. Critics argue that merely pledging to act responsibly may not be enough to hold these companies accountable.