AI-powered medical diagnosis gets a transparency boost with new 'Chain of Diagnosis' method

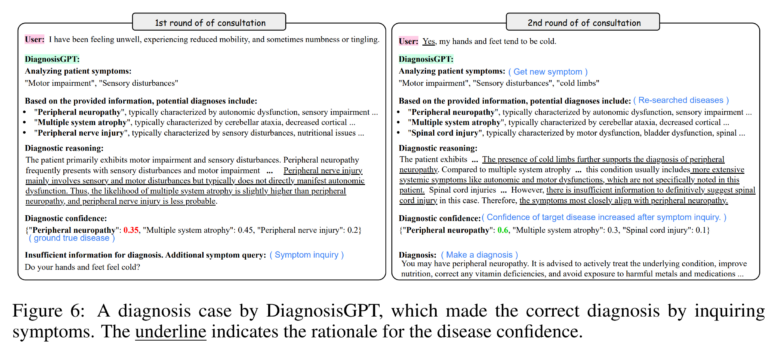

A research team from the Shenzhen Research Institute of Big Data and the Chinese University of Hong Kong has developed an AI system called DiagnosisGPT. The system aims to make AI diagnoses transparent and understandable. It can identify over 9,600 diseases and outperforms other AI models in diagnostic tests.

According to the researchers, DiagnosisGPT can recognize more than 9,600 diseases and make the diagnostic process transparent for doctors and patients. The system is based on the "Chain of Diagnosis" (CoD) method. This breaks down the diagnostic process into individual, transparent steps:

1. Summarize the patient's symptoms

2. Suggest possible diseases

3. Analyze which diseases are most likely

4. Provide probabilities for each candidate

5. Make a diagnosis based on this assessment

6. Ask for additional symptoms

This focus on human-like diagnostic decisions sets the system apart from other approaches like Google's MedLM models or Microsoft's Medprompt.

To train DiagnosisGPT, the researchers created a synthetic dataset with over 48,000 synthetic patient cases, based on medical encyclopedias. They used Yi-6B and Yi-34B as base models.

DiagnosisGPT beats Claude 3 Opus in benchmarks

In tests with public diagnostic datasets, DiagnosisGPT performed better than other large language models like GPT-4 or Claude 3 Opus. It achieved an accuracy of up to 76% on two datasets. It also achieved the best results in the new DxBench dataset with 1,148 cases, which the team created using real patient data. The probabilities displayed by DiagnosisGPT also correlate with the accuracy of the diagnosis: If the displayed probability is above 55%, the diagnosis is correct in over 90% of cases.

By adjusting the probability threshold, the trade-off between speed and accuracy can be controlled: The higher the required probability, the more questions the system asks to become more certain - but this takes more time.

The researchers see CoD as a way to make AI-assisted diagnoses more practical through interpretability. Systems like DiagnosisGPT are intended to support doctors, not replace them - the final diagnosis should still be made by a human. At the same time, they point out that DiagnosisGPT is currently intended for research only, as there is a risk of misdiagnosis.

The models are available via GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.