AI speedruns by reading the game manual

Using a game manual, an AI learned an old Atari game several thousand times faster than with older methods. This approach could be useful in other areas as well.

In March 2020, DeepMind scientists unveiled Agent57, the first deep reinforcement learning (RL)-trained model to outperform humans in all 57 Atari 2600 games.

For the Atari game Skiing, which is considered particularly difficult and requires the AI agent to avoid trees on a ski slope, Agent57 needed a full 80 billion training frames - at 30 frames per second, that would take a human nearly 85 years.

AI learns to game 6,000 times faster

In a new paper, "Read and Reap the Rewards," researchers from Carnegie Mellon University, Ariel University, and Microsoft Research show how this training time can be reduced to as little as 13 million frames - or five days.

The Read and Reward Framework uses human-written game instructions like the game manual as a source of information for the AI agent. According to the team, the approach is promising and could significantly improve the performance of RL algorithms on Atari games.

Extracting information, making inferences

The researchers cite the length of the instructions, which are often redundant, as a challenge. In addition, they say, much of the important information in the instructions is often implicit and only makes sense if it can be related to the game. An AI agent that uses instructions must therefore be able to process and reason about the information.

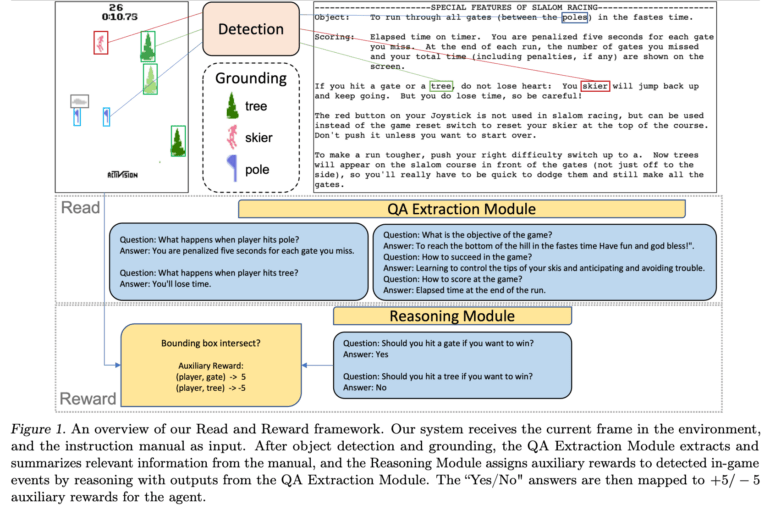

The framework, therefore, consists of two main components: the QA Extraction module and the Reasoning module. The QA Extraction module extracts and groups relevant information from the instructions by asking questions and extracting answers from the text. The Reasoning module then evaluates object-agent interactions based on this information and assigns help rewards for recognized events in the game.

These help rewards are then passed to an A2C-RL (Advantage Actor Critic) agent, which was able to improve its performance in four games in the Atari environment with sparse rewards. Such games often require complex behavior until the player is rewarded - so the rewards are "sparse", and an RL agent that proceeds only by trial and error does not receive a good learning signal.

Reap the rewards outside of Atari Skiing

By using the instructions, the number of training frames required can be reduced by a factor of 1,000, the authors write. In an interview with New Scientist, first author Yue Wu even speaks of a speed-up by a factor of 6,000. Whether the manual comes from the developers themselves or from Wikipedia is irrelevant.

According to the researchers, one of the biggest challenges is object recognition in Atari games. In modern games, however, this is not a problem because they provide object ground truth, they write. In addition, recent advances in multimodal video-language models suggest that more reliable solutions will soon be available that could replace the object recognition part of the current framework. In the real world, advanced computer vision algorithms could help.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.