Google researchers show real-time robot control via interactive language

A Google research team demonstrates that up to four visuomotor robotic arms can be precisely controlled in real time using natural language.

Advances in large language models (LLMs) have led to powerful text generators recently. But these are just one of many use cases for natural language processing: combined with other data in multimodal architectures, language understanding helps machines better understand humans without code. Current text-to-x generators illustrate this, and now Google is doing the same with complex voice control of a robotic arm equipped with a video camera.

Interactive language for real-time commands to real-world robots

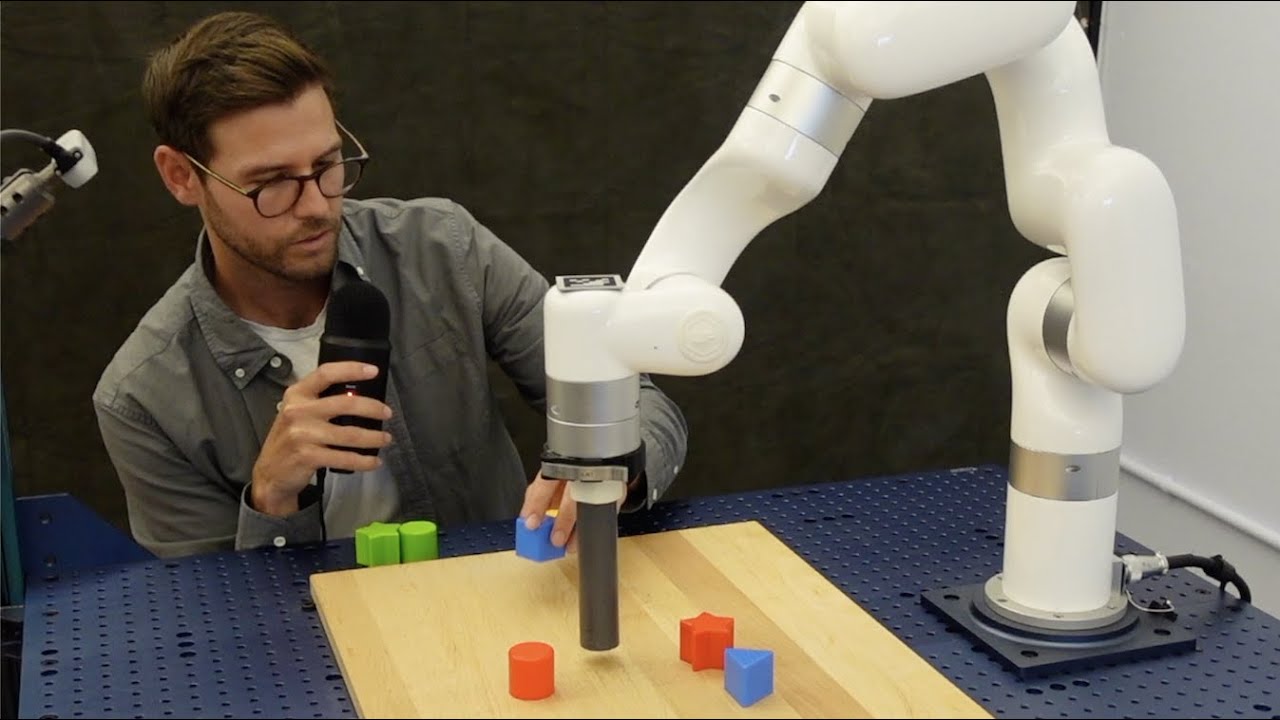

In the research paper, "Interactive Language: Talking to Robots in Real Time," Google's research team presents a framework for building interactive robots that can be instructed in real-time and in natural language. The robot acts solely based on speech input combined with an RGB image from the camera embedded in the arm (640 x 360 pixels).

The team uses a Transformer-based architecture for language-conditioned visuomotor control, which it trained with imitation learning on a dataset of hundreds of thousands of annotated motion sequences.

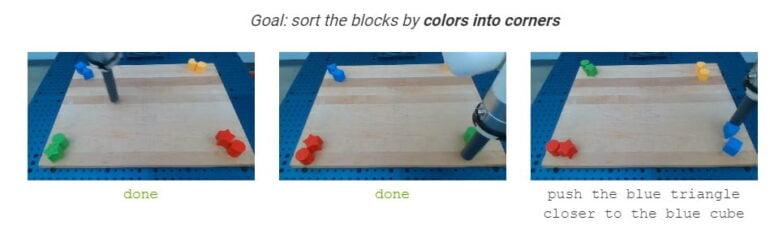

According to the researchers, the system can translate more than 87,000 natural language strings into robotic actions in real time with a success rate of about 93.5 percent. This includes complex commands such as "make a smiley face out of blocks" or sorting colors and shapes. The following video shows the model with the speech-controlled robot arm in action.

Interactive human guidance allows the arm to achieve "complex goals with long horizons," the team writes. The human operator gives sequential commands until the robotic arm reaches the target. Commands can be given in different orders and with extensive vocabulary.

In experiments, the research team also succeeded in controlling four robot arms simultaneously by speech. This shows that the previous assumption of undivided attention of the operator for the correction of online robot behavior can be relaxed, the team writes.

A step towards more useful everyday robots

In particular, the research team sees the open-source language-table dataset with a benchmark for simulated multitask imitation learning as a contribution to human-robot interaction research. According to the researchers, the dataset includes nearly 600,000 simulated and real-world robot motion sequences described with natural language. It is significantly larger than previously available datasets.

However, the researchers write that there are still numerous limitations to human-robot collaboration, such as intention recognition, nonverbal communication, and joint physical execution of tasks by humans and robots. Future research could extend the interactive language approach to useful real-time assistive robots, for example.

"We hope that our work can be useful as a basis for future research in capable, helpful robots with visuo-linguo-motor control," the team writes.

Author Pete Florence raised the prospect on Twitter that Google's robotics division will soon share the data, models and simulation environments used with the research community.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.