Alibaba's new math-optimized AI models Qwen2-Math school other top LLMs on math tasks

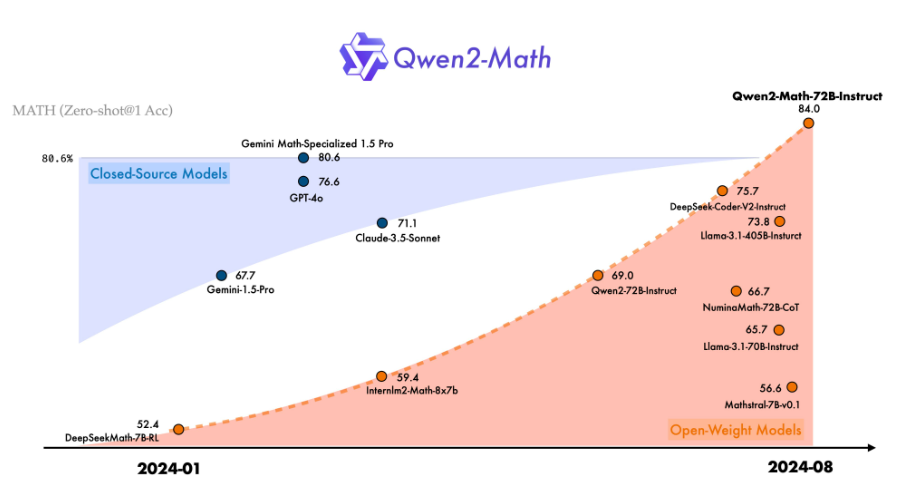

Alibaba Cloud has unveiled a new series of language models called Qwen2-Math, optimized for mathematical tasks. In benchmarks, these models perform better than general-purpose large language models like GPT-4 and Claude.

Qwen2-Math and Qwen2-Math-Instruct models are available in sizes ranging from 1.5 to 72 billion parameters. They're based on the general Qwen2 language models but underwent additional pre-training on a specialized math corpus.

This corpus includes high-quality mathematical web texts, books, code, exam questions, and math pre-training data generated by Qwen2. Alibaba claims this allows Qwen2 Math models to surpass the mathematical capabilities of general-purpose LLMs like GPT-4.

In benchmarks such as GSM8K, Math, and MMLU-STEM, the largest model, Qwen2-Math-72B-Instruct, outperforms models like GPT-4, Claude-3.5-Sonnet, Gemini-1.5-Pro, and Llama-3.1-405B. It also achieves top scores in Chinese math benchmarks like CMATH, GaoKao Math Cloze, and GaoKao Math QA.

Alibaba reports that case studies with Olympic math problems show Qwen2-Math can solve simpler math competition problems. However, the Qwen team emphasizes they "do not guarantee the correctness of the claims in the process."

To avoid skewing test results due to overlaps between training and test data, the Qwen team says they cleaned up the datasets before and after training.

The Math models are available under the Tongyi Qianwen license on Hugging Face. A commercial license is required for more than 100 million users per month.

Currently, Qwen2 math models mainly support English. The team plans to release bilingual models supporting English and Chinese soon, with multilingual models in development.

The quest for logical AI

Alibaba Cloud developed the Qwen model series. Researchers published the first generation of Qwen language models in August 2023. The company recently introduced Qwen2, a more powerful successor with improvements in programming, mathematics, logic, and multilingual capabilities.

Alibaba says it aims to further improve the models' ability to solve complex mathematical problems. But it's unclear if training language models solely on math problems will lead to fundamental improvements in logical capabilities.

Google DeepMind and likely OpenAI are focusing on hybrid systems that combine the reasoning capabilities of classical AI algorithms like Alpha Zero with generative AI.

Google DeepMind recently presented AlphaProof, which combines a pre-trained language model with AlphaZero, and the system won silver medals at this year's International Mathematical Olympiad (IMO). But the scalability via reinforcement learning and generalizability remain to be seen.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.