AlphaEvolve is Google DeepMind's new AI system that autonomously creates better algorithms

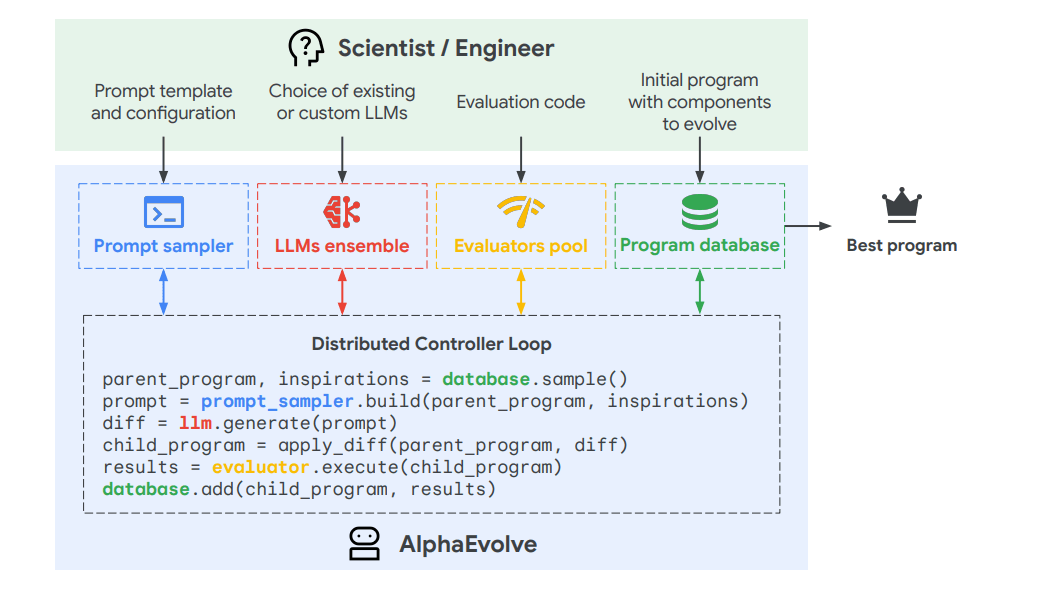

Google Deepmind has unveiled AlphaEvolve, a system that uses large language models to automate the discovery and refinement of algorithms. The aim is to develop new algorithms, systematically improve them, and adapt them for practical use.

AlphaEvolve works by combining two versions of Google's Gemini language models. Gemini Flash is responsible for generating a wide range of program candidates, while Gemini Pro takes a closer look at these proposals and analyzes them in depth.

An evolutionary algorithm then steps in to evaluate the results based on objective metrics like accuracy and computational efficiency. The top-performing variants are selected and further improved in an ongoing loop.

Deepmind describes AlphaEvolve as a "test-time compute agent"—an AI system that actively explores and evaluates new solutions while it runs. Like current reasoning models, AlphaEvolve uses test-time computation to improve its results through active problem-solving.

However, while "standard" reasoning models typically apply test-time reasoning to generate step-by-step answers for a single prompt, AlphaEvolve goes further by running an evolutionary loop: it generates, tests, and refines entire algorithms over multiple iterations. This approach builds on the principles of test-time reasoning, but extends them to the broader task of automated algorithm discovery and optimization.

Deepmind says the progress AlphaEvolve achieves can also be distilled back into the underlying language models, compressing what the system learns into more efficient model versions.

Efficiency gains across Google infrastructure

Deepmind says AlphaEvolve is already being used in multiple parts of Google's infrastructure. In one example, the system developed a new heuristic for resource allocation in Google's "Borg" management system, which freed up 0.7 percent more computing power. Deepmind notes that the new solution is robust and easy for humans to read and understand.

AlphaEvolve has also helped optimize the Gemini model itself. By improving how matrix multiplications are broken down, the system reduced training times by one percent. In another case, AlphaEvolve optimized the FlashAttention kernel—a critical GPU component for running large language models—achieving up to a 32.5 percent improvement in benchmark tests.

These types of optimizations are especially difficult, since they are deeply embedded in the software stack and are rarely revised by human engineers, the researchers write.

AlphaEvolve is designed to make this process much more efficient: optimizations that used to take weeks of manual work can now be completed in just a few days of automated experimentation. According to Google Deepmind, this leads to faster adaptation to new hardware and lower long-term development costs for AI systems.

Progress in mathematics

Google Deepmind also tested AlphaEvolve on more than 50 open mathematical problems. The system was able to reconstruct known solutions in about 75 percent of cases and found better solutions than previously known in 20 percent of cases.

One standout example is the kissing number problem, which asks how many spheres of the same size can touch a central sphere without overlapping. For the eleven-dimensional case, AlphaEvolve discovered a new configuration with 593 spheres, setting a new lower bound.

In the area of algorithmic mathematics, AlphaEvolve developed a new way to multiply complex 4x4 matrices using just 48 scalar multiplications—an improvement over the classic Strassen method from 1969. While its predecessor AlphaTensor focused exclusively on matrix multiplication, AlphaEvolve is designed for a much broader range of algorithmic challenges.

According to Deepmind, these results indicate that AlphaEvolve is capable of more than just replicating existing solutions; it can also discover new approaches in specialized areas of computer science and mathematics.

Where AlphaEvolve fits—and where it doesn't (yet)

AlphaEvolve is best suited for problems that can be expressed algorithmically and evaluated automatically. Deepmind sees application potential in areas like materials research, drug development, and industrial process optimization. The company is also working on a user-friendly interface and an early access program for academic institutions.

There are limits, though. For problems that can't be reliably assessed by automated tests—such as those requiring real-world experiments—AlphaEvolve is less effective. Deepmind says that in the future, language models could provide initial qualitative assessments before more structured evaluation takes place, and the company is already exploring these hybrid approaches.

AlphaEvolve builds on FunSearch, Deepmind's earlier system launched in 2023, but takes things much further. While FunSearch found solutions for mathematical problems like cap sets and bin packing, AlphaEvolve is designed to create complete, practical algorithms for a much broader range of challenges.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.