Amazon's new Trainium2 AI chip aims to take on Nvidia with 4x speed and 3x memory boost

Amazon is developing its third generation of AI processors and investing billions in AI company Anthropic, aiming to reduce its reliance on Nvidia's chips.

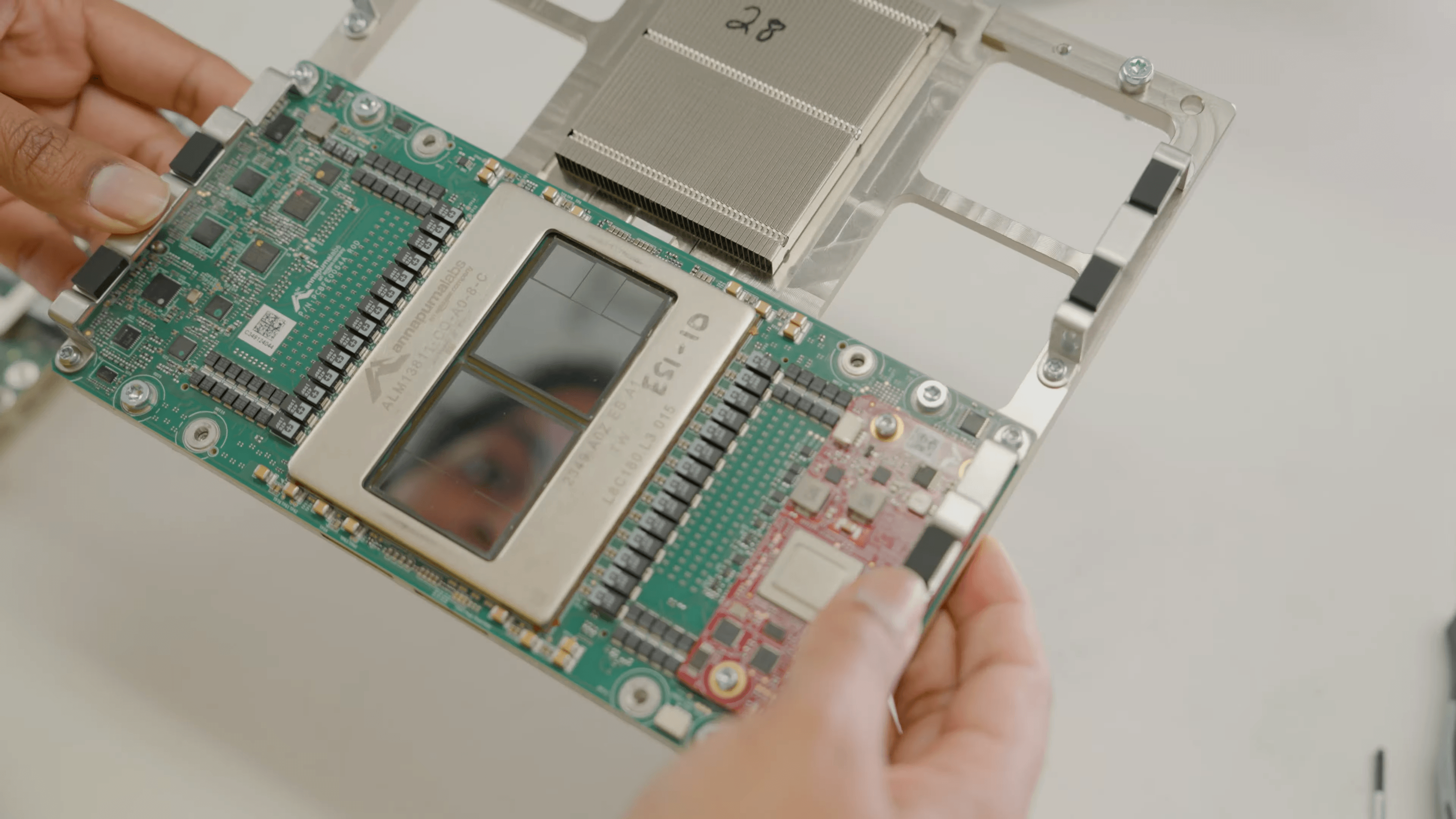

A team in Austin, Texas is creating Amazon's next Trainium2 AI chip, Bloomberg reports. Amazon says the new processor will be four times faster than its predecessor and offer three times more memory. The company simplified the design by cutting the number of chips per unit from eight to two and replacing cables with circuit boards, making maintenance easier.

Software remains a challenge

While Nvidia offers mature tools that let customers start quickly, Amazon's Neuron SDK software package is still new. Even with more user-friendly software, switching from Nvidia to Amazon could require hundreds of hours of development time, according to Bloomberg.

To address this gap, Amazon is investing up to $8 billion in Anthropic. In exchange, Anthropic will use more Amazon chips and work directly with AWS teams at Annapurna Labs, Amazon's chip division.

"We’re particularly impressed by the price-performance of Amazon Trainium chips," says Tom Brown, Anthropic’s chief compute officer. "We’ve been steadily expanding their use across an increasingly wide range of workloads."

https://www.youtube.com/watch?v=4nfkonjjICo

Cloud business drives Amazon's AI strategy

The Anthropic partnership goes beyond just chip development. As part of the deal, Anthropic will use Amazon Web Services as its primary cloud platform and run its AI models on Amazon's custom Trainium and Inferentia processors.

The investment could pay off for Amazon shareholders. Cloud growth tends to drive up Amazon's valuations, so if Anthropic helps to significantly expand Amazon's cloud business, Amazon's market value could rise—provided the AI sector maintains its momentum.

As for Amazon's own AI development, things seem stuck in research mode. While rumors suggested its "Olympus" AI model were supposed to surpass Anthropic's systems by mid-2024, Amazon hasn't made any official announcements about these models yet.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.