Amazon's Nova 2 undercuts OpenAI and Google on price but still trails top-tier models

Key Points

- Amazon introduced the Trainium3 AI accelerator, which can scale in clusters of up to one million chips to lower compute costs. The next generation, Trainium4, will add support for Nvidia's NVLink.

- The new Nova 2 model family is priced to undercut competitors. Analyses show it is cheaper to run than models from OpenAI or Google, though it still falls short of their top performance.

- Amazon is also pushing its AI tools toward more autonomous behavior and is releasing specialized agents for developers, security, and DevOps that can handle tasks with limited supervision.

Amazon used re:Invent 2025 to focus on scaling its in-house hardware, lowering model costs with the Nova 2 lineup, and pushing its AI tools toward more autonomous behavior instead of simple assistant-style workflows.

To reduce reliance on Nvidia and lower AI workload costs, AWS introduced the third generation of its accelerators. The Trainium3 UltraServer, built on a 3-nanometer process, is designed to offer four times the speed and memory of the previous version with a 40 percent improvement in energy efficiency.

The larger shift is scale. AWS now supports clusters of up to one million Trainium3 chips, an approach already used by customers such as Anthropic.

Amazon also signaled a change for the next generation. The upcoming Trainium4 will support Nvidia's NVLink Fusion, enabling hybrid systems where Amazon's lower-cost hardware can work directly with Nvidia GPUs. This reflects Nvidia's continued dominance of the CUDA ecosystem and gives AWS more flexibility in mixed environments.

Price war with Nova 2

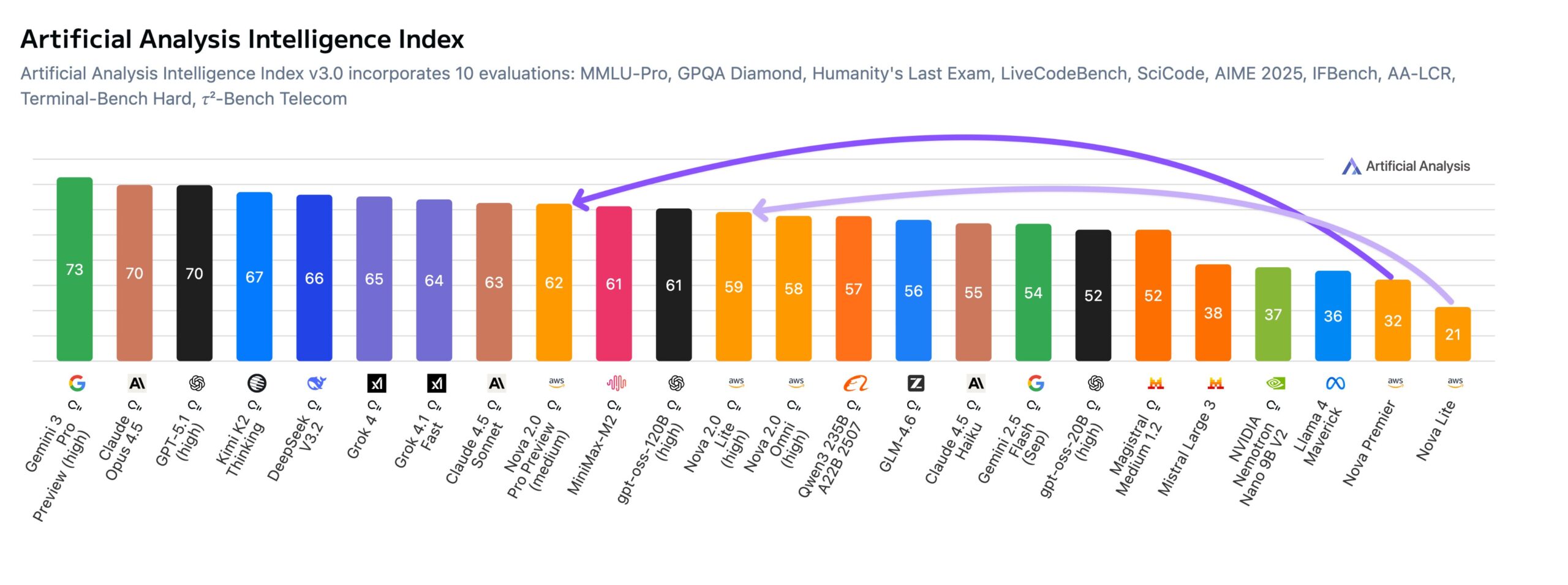

Pricing is central to the Nova 2 strategy. Amazon introduced the Nova 2 Lite and Nova 2 Pro families and continues to position them through direct price-performance comparisons with OpenAI and Google. A test series from Artificial Analysis showed that Nova 2.0 Pro (Preview) gained 30 points in the firm's internal index and now sits near the top group, though it still trails the leading models from other vendors.

Pricing remains the standout factor. Nova 2.0 Pro is listed at 1.25 dollars per million input tokens and 10 dollars for output. According to Artificial Analysis, a benchmark run cost about 662 dollars with Nova 2.0 Pro, compared to 817 dollars for Claude 4.5 Sonnet and 1,201 dollars for Google Gemini 3 Pro.

Amazon also introduced Nova Forge, which allows companies to include their own data during the model's training phase rather than relying solely on fine-tuning. Reddit used the service to build a moderation model that, according to CTO Chris Slowe, better reflects the platform's specific dynamics.

In speech processing, Amazon released the speech-to-speech model Nova Sonic 2.0. Benchmarks from Artificial Analysis place its reasoning performance between the current market leaders. In Big Bench Audio, a dataset of 1,000 complex audio questions, Nova Sonic 2.0 reached an accuracy of 87.1 percent, placing it second behind Google's Gemini 2.5 Flash Native Audio Thinking and ahead of OpenAI's GPT Realtime.

Latency is mixed. The model produces its first audio response in an average of 1.39 seconds, more than two seconds faster than Google's higher-performing model but slower than OpenAI's latest systems. It supports bidirectional audio streaming in five languages, including German, and adapts its speaking style to match the rhythm and emphasis of the input.

From assistant to autonomous agent

At the application level, AWS is shifting from assistant-style tools toward more autonomous agents. The company expects many future AI use cases to involve systems that can complete tasks without constant prompting.

AWS introduced three agents meant to integrate directly into developer workflows: Kiro, which maintains context across sessions and repositories and can work on tasks such as bug triage, code coverage, and preparing pull requests; the AWS Security Agent, which handles security checks and penetration testing; and the AWS DevOps Agent, which monitors infrastructure metrics through tools such as CloudWatch and Datadog and can perform its own root-cause analysis during outages.

AWS says these agents can learn a company's internal patterns over several months. Whether they actually reduce workload or create new oversight challenges will become clear once they are used in production settings.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now