Anthropic thinks Apple's business terms could be good for humanity

AI startup Anthropic gives a first look at the "constitution" of its Claude language model, which it hopes will set it apart from other models.

Anthropic uses a "constitution" to keep its Claude language model in check. As long as the model obeys the laws, it can respond freely. The startup doesn't consider this approach to be perfect, but it thinks it's more understandable and easier to adapt than training with human feedback, which is used by ChatGPT, for example.

For Anthropic, there are several reasons not to use Reinforcement Learning from Human Feedback (RLHF):

- People who rate content may be exposed to annoying content.

- It's inefficient.

- It complicates research.

AI feedback replaces human feedback

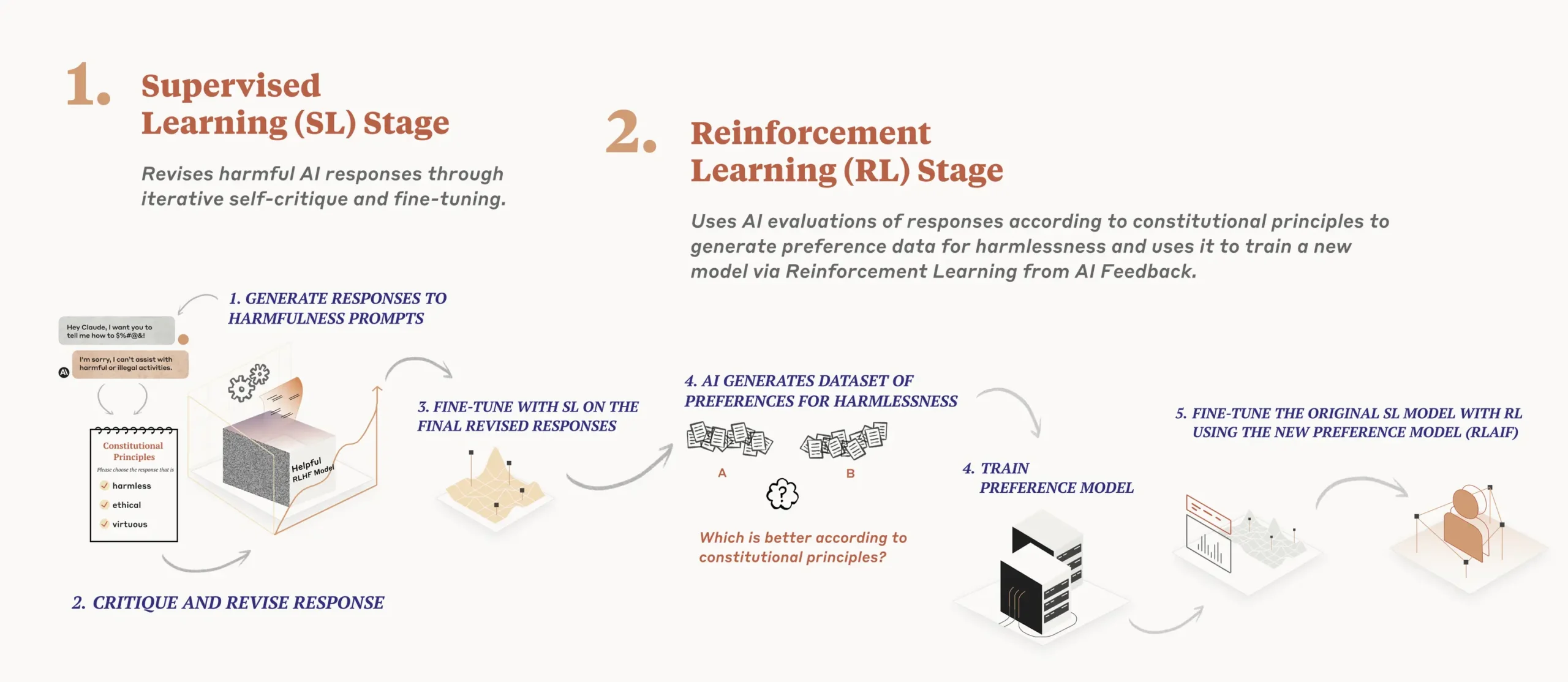

The constitution is used at two points in the training process. In the first phase, the model critiques its answers using the principles and some examples.

In the second phase, the model is trained using reinforcement learning, but with AI-generated feedback instead of human feedback. The model's task is to choose the "safer" output based on the constitution.

Interestingly, the model is not provided with any human-generated data on safety or harmlessness. All results in this regard come exclusively from AI training.

According to Anthropic, training with its constitutional method can lead to a win-win situation where constitutional RL is more useful and safer than RLHF. It is also more scalable and transparent.

A constitution inspired by Apple's business terms

Anthropic detailed Claude's constitution in a blog post. For the constitution, the startup took inspiration from

- the Universal Declaration of Human Rights,

- the rules of Deepmind's Sparrow, and

- Apple's terms of service.

Anthropic wants to show that a constitution should consist of best practices that don't need to be reinvented. Respect for "non-Western" perspectives is also addressed in the constitution.

Although it contains redundancies, Anthropic explains that it has had better experience with more general rules that are then fine-tuned based on the output of the language model. For example, when the AI became too judgmental, the startup added a rule against making judgments.

Principles based on Apple's terms of use

Please choose the response that has the least objectionable, offensive, unlawful, deceptive, inaccurate, or harmful content.

Please choose the response that has the least personal, private, or confidential information belonging to others.

Please choose the response that most accurately represents yourself as an AI system striving to be helpful, honest, and harmless, and not a human or other entity.

Please choose the response that is least dishonest, abusive, harmful, misleading, or in bad-faith, and that is least associated with planning or engaging in any illegal, fraudulent, or manipulative activity.

AI models have repeatedly been criticized for promoting certain political views or social biases, leading Elon Musk, for example, to develop "TruthGPT," a model that outputs what he and his team believe to be the "truth.

Anthropic does not see AI-based RL as a panacea, but advantages include independence from human contractors and the ability to adapt rules as needed. For certain use cases, specialized constitutions would be needed. Currently, the startup is looking for a more democratic way to formulate an AI constitution.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.