"Anything in Any Scene": AI framework inserts photorealistic objects into video

Key Points

- XPeng Motors is developing an AI system called "Anything in Any Scene" that can insert photorealistic objects into video sequences, improving realism and accuracy over previous methods.

- The framework takes into account occlusion, consistent anchoring, realistic lighting, and shadow casting to seamlessly embed objects into video scenes.

- Potential applications include film production and autonomous vehicle training, as the system is efficient, cost-effective, and can simulate rare scenarios.

XPeng Motors introduces an AI system that can insert photorealistic objects into video sequences.

XPeng Motors, an electric vehicle company, has developed a new framework called "Anything in Any Scene" that can insert objects into video scenes in a way that surpasses previous methods in terms of realism and accuracy.

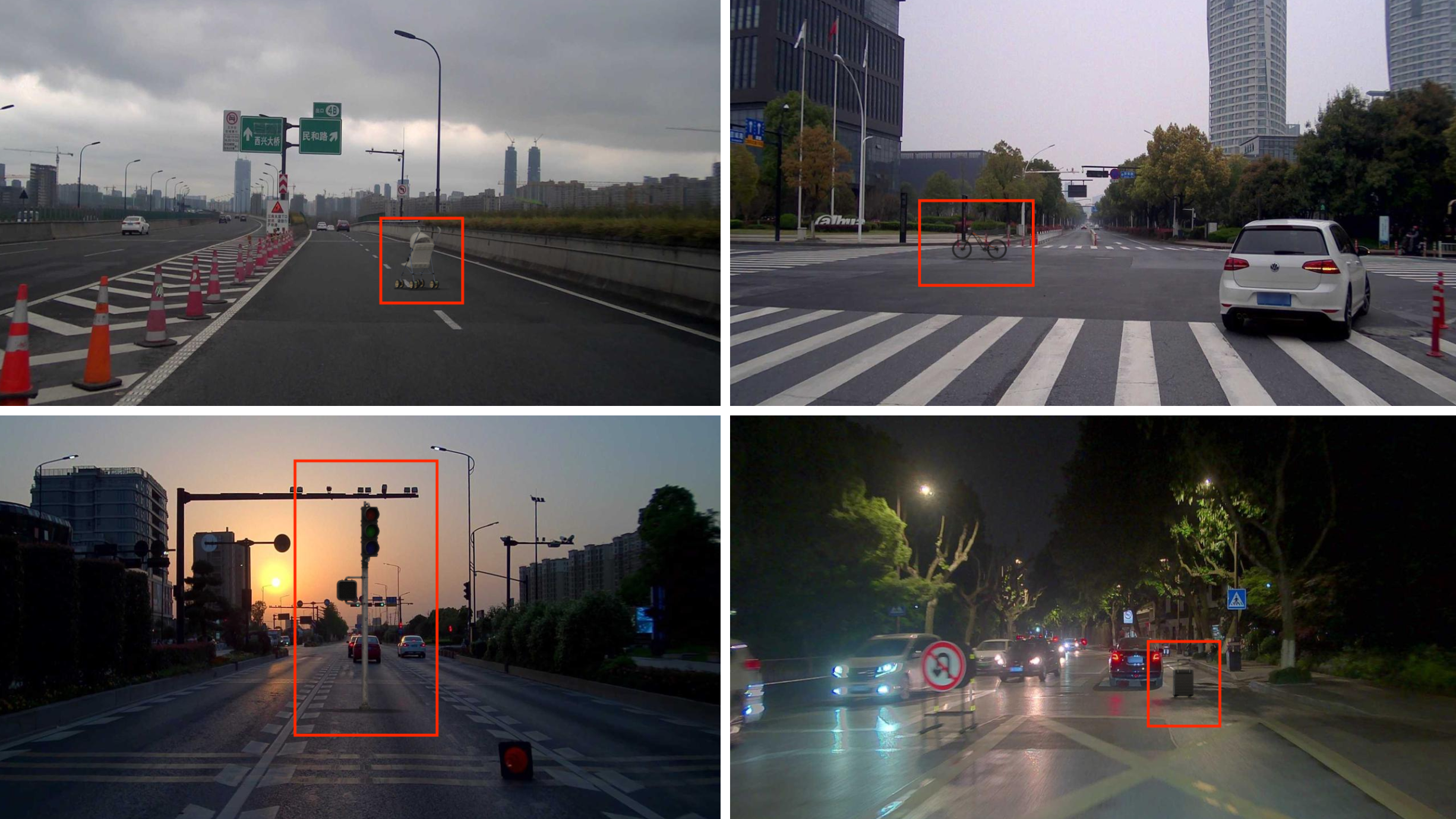

The Anything in Any Scene framework embeds objects into videos so that they match the color, texture, and general appearance of the real video scenes being captured. In the examples shown, the objects can only be recognized as artificial upon closer inspection. The method overcomes the limitations of previous approaches, which are often limited to certain settings and neglect factors such as lighting and realistic placement.

"Anything in Any Scene" provides photorealistic object integration and stabilization

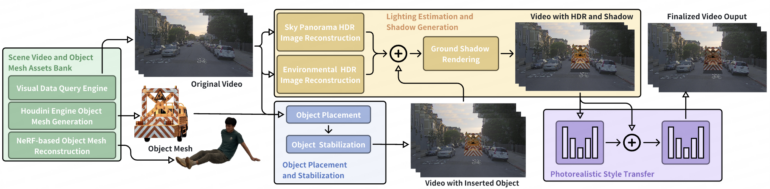

The framework places and stabilizes objects in each frame of the video, taking into account occlusion by other objects and ensuring consistent anchoring across successive frames. The framework also reconstructs high dynamic range (HDR) panoramic images from normal images to create realistic lighting and shadows. It determines the position and brightness of key light sources, such as the sun outdoors, and then renders realistic shadows for the inserted object.

The final step of the framework is photorealistic style transfer. This uses a network that transfers the style characteristics of the original video to the inserted object to adjust colors, lighting, and textures to create a seamless and realistic appearance.

Video: XPeng Motors

Video: XPeng Motors

According to the team, the framework could be used in film production, for example, as a comparatively efficient and cost-effective alternative to real filming. It can also be used to simulate rare but important scenarios that are often underrepresented in real-world datasets — for example, to advance the training of autonomous vehicles, where edge cases play an important role.

More examples and soon the code can be found on the Anything in Any Scene project page.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now