AI image creation has made great strides recently, but image processing has lagged behind. Until now, that is, because Apple is demonstrating a method that understands and executes complex text instructions for image editing.

Working with researchers at the University of California, Apple has developed a new open-source AI model that can edit images using natural language instructions. It's called "MGIE," which stands for Multimodal Large Language Models Guided Image Editing.

MGIE uses Multimodal Large Language Models (MLLMs) to interpret user commands and perform pixel-accurate image manipulation. MLLMs can process both text and images, and have already proven themselves in applications such as ChatGPT, which understands images using GPT-4V and generates new ones using DALL-E 3.

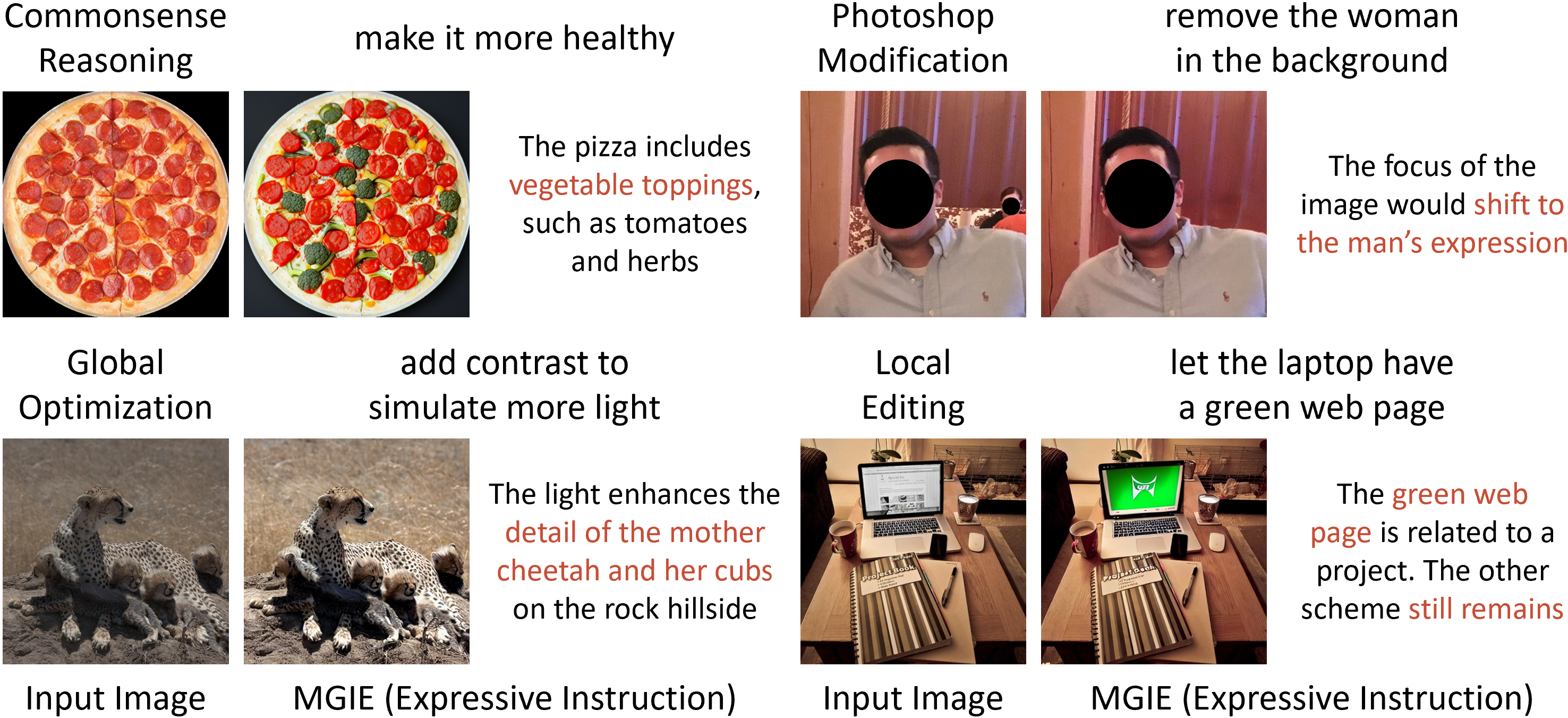

MGIE takes advantage of these capabilities and for the first time enables comprehensive image processing tasks, from simple color adjustments to complex object manipulation.

Global and local manipulation

Another feature of MGIE is the ability to perform global and local manipulations. The model can perform expressive instruction-based editing and apply common Photoshop-like edits such as cropping, scaling, rotating, mirroring, and adding filters.

It also understands sophisticated instructions such as changing the background, adding or removing objects, and merging multiple images.

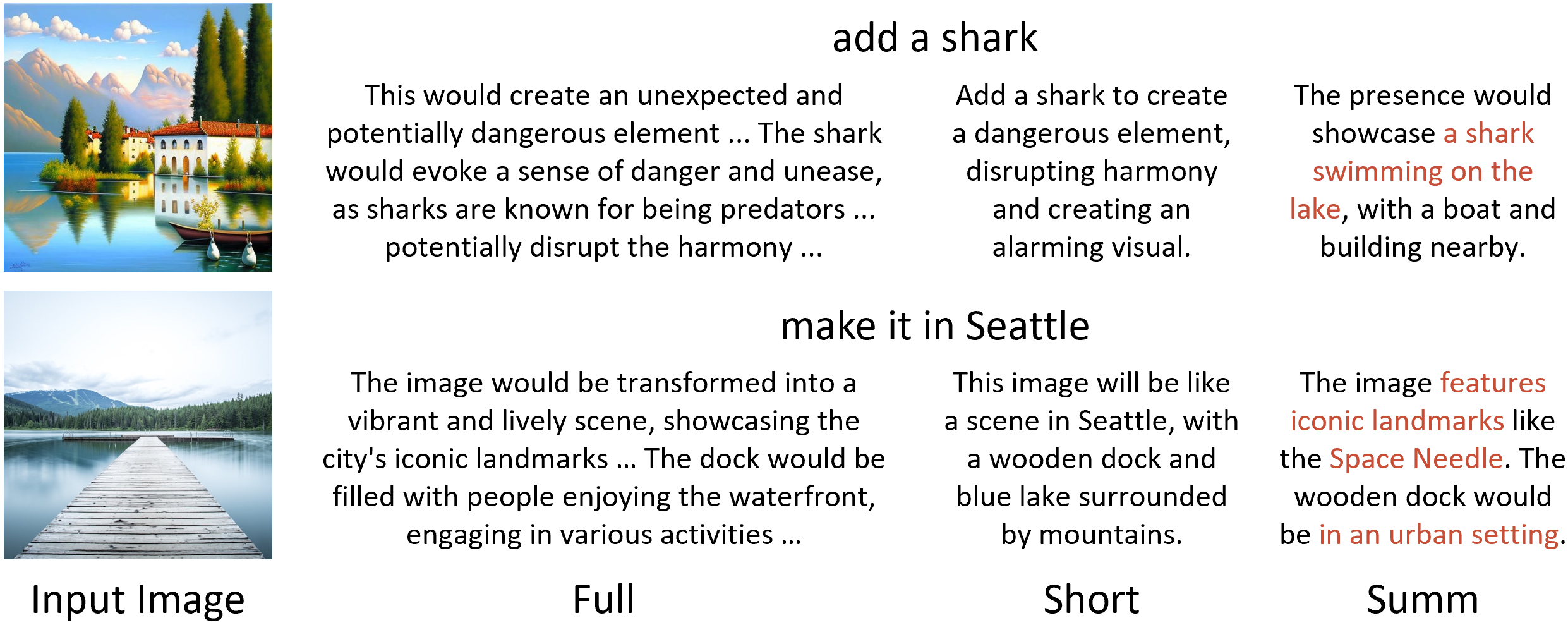

The method consists of two steps. The first is to solve the problem that user instructions are often too short.

The researchers therefore preface the user's instruction with the prompt "what will this image be like if" to formulate a very detailed prompt using a large language model.

Since these are often too long, a pre-trained model is used to summarize the detailed prompt. Words like "desert" are associated with "sand dunes" or "cacti". In the second step, the model generates the image. OpenAI uses a similar approach with DALL-E 3 in ChatGPT.

MGIE is available as an open-source project on GitHub. There is also a demo on Hugging Face.

Another building block in Apple's AI strategy

The release of MGIE underscores Apple's growing ambitions in AI research and development. Apple CEO Tim Cook recently confirmed the upcoming introduction of generative AI capabilities in iOS 18 and expressed his "incredible excitement" about Apple's work in this area. Research like the above should provide some insight into what Apple is planning in terms of new features for iOS.

A similar, older model of instruction-based image processing is InstructPix2Pix. Based on Stable Diffusion, however, this method was only intended for modifying individual objects in an image and performs much less accurately than MGIE.