Read full article about: Transformer co-creator Vaswani unveils high-performance Rnj-1 coding model

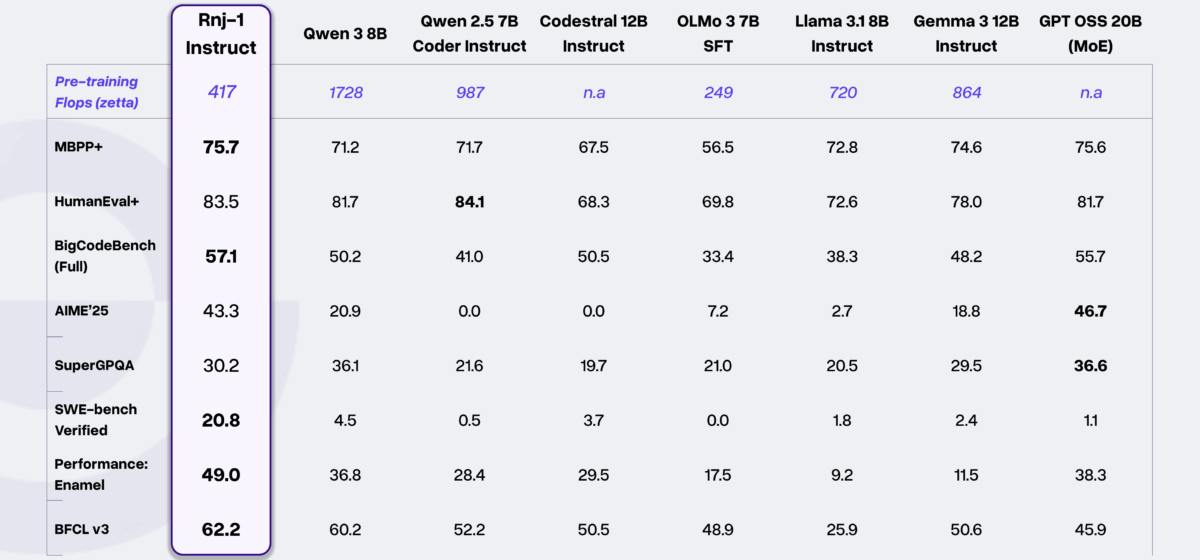

Essential AI's new open-source model, Rnj-1, outperforms significantly larger competitors on the "SWE-bench Verified" test. This benchmark is considered particularly challenging because it evaluates an AI's ability to independently solve real-world programming problems. Despite being a compact model with just eight billion parameters, Rnj-1 scores 20.8 points.

By comparison, similarly sized models like Qwen 3 (without reasoning, 8B) only reach 4.5 points in Essential AI's testing. The system was introduced by Ashish Vaswani, co-founder of Essential AI and co-author of the famous "Attention Is All You Need" paper that launched the Transformer architecture. Rnj-1 is also Transformer-based, specifically utilizing the Gemma 3 architecture. According to the company, development focused primarily on better pre-training rather than post-training methods like reinforcement learning. These improvements also result in lower pre-training computational costs, thanks to the use of the Muon optimizer.

Comment

Source: EssentialAI