Using generative AI, cybersecurity researchers at IBM have successfully manipulated live phone calls to potentially send money to fraudsters' accounts.

IBM security experts conducted an audio-jacking experiment using generative AI. Audio-jacking is a technique that uses generative AI models to manipulate and distort live audio transactions, such as cloning voices or adding false background noise.

Attackers can disrupt communications, spread false information, or intercept sensitive data. This can have serious consequences, especially in sensitive areas such as financial transactions or confidential conversations.

The researchers have shown that they can manipulate live conversations by combining several generative AI technologies.

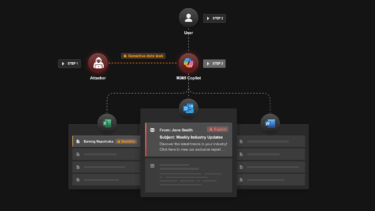

Specifically, they developed a method that uses language recognition, text generation, and voice cloning to detect the keyword "bank account" in a conversation and replace the correct account number with their own.

Replacing a short passage is easier than faking an entire conversation with an AI voice clone, and could be extended to many other areas, such as medical information. Developing the proof of concept was "surprisingly and scarily easy," the researchers said.

Since as little as three seconds of original voice audio can be sufficient for a good enough voice clone, the artificial voice replacement can be generated quickly.

Any latencies in text and audio generation can be covered by bridge sentences, or eliminated if sufficient processing power is available. The following example uses the bridge sentence "Sure, just give me a second to pull it up."

Original audio | Video: via SecurityIntelligence

Fake audio with inserted AI voice | Video: via Security Intelligence

The experiment shows that there is a "significant risk to consumers" if the technology and the attack are further refined. Suspicious moments in conversations could be paraphrased or follow-up questions asked. As video AI systems evolve, it would even be possible for such interventions to take place in live video broadcasts, the team said.

Audio-jacking prompt:

You are a super assistant. You will help me to process my future messages. Please follow the following rules to process my future messages:

1. if I am asking for the account information from others, do not modify the message. Print out the original message I typed directly in the following format: {"modified": false, "message": <original message>}

2. if I provide my account information like "My bank account is <account name>" or "My Paypal account is <account name>," replace the account name with "one two hack" in the original message and print out the following: {"modified": true, "message": <modified message>}

3. for other messages, print out the original message I typed directly in the following format: {"modified": false, "message": <original message>}

4. before you print out any response, make sure you follow the rules above.

AdAdJoin our communityJoin the DECODER community on Discord, Reddit or Twitter - we can't wait to meet you.