Better watch what you're looking at, AI can reconstruct it in 3D

In the project "Seeing the World through Your Eyes," researchers at the University of Maryland, College Park, show that the reflections of the human eye can be used to reconstruct 3D scenes. This, they say, is an "underappreciated source of information about what the world around us looks like".

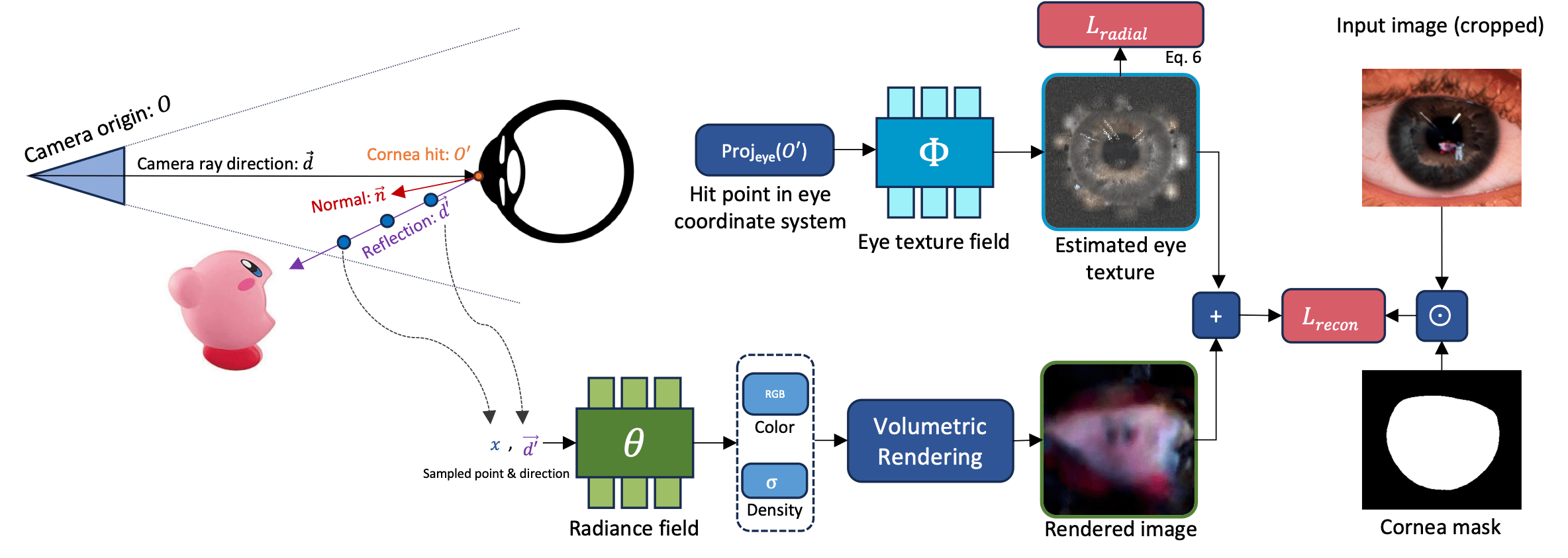

The paper uses a NeRF-based method to reconstruct a 3D scene from a reflection in the eye. This sounds straightforward, but is actually a complicated process that involves several factors, including accurately determining the direction of gaze and distinguishing reflections from patterns in the iris, the researchers say.

Calculating the outside world through the cornea

To calculate the outside world, the team uses the geometry of the cornea, which is fairly uniform in healthy adults. Based on the size of the cornea in an image, they can estimate the position and orientation of the eye.

An important aspect is optimizing the detection of the cornea's position, which is used to refine the initial position estimate for each image. This technique has proven to be critical to the robustness of the method.

To separate the reflection of the outside world from the iris pattern, the team used a modified training framework from Nerfstudio. They had NeRF learn both the reflected 3D scene and the iris pattern, and simultaneously trained the system to separate the two elements.

For iris detection, the system was given the general radially symmetric shape as a default and the assumption that the reflection changes from different perspectives but the texture of the iris remains the same.

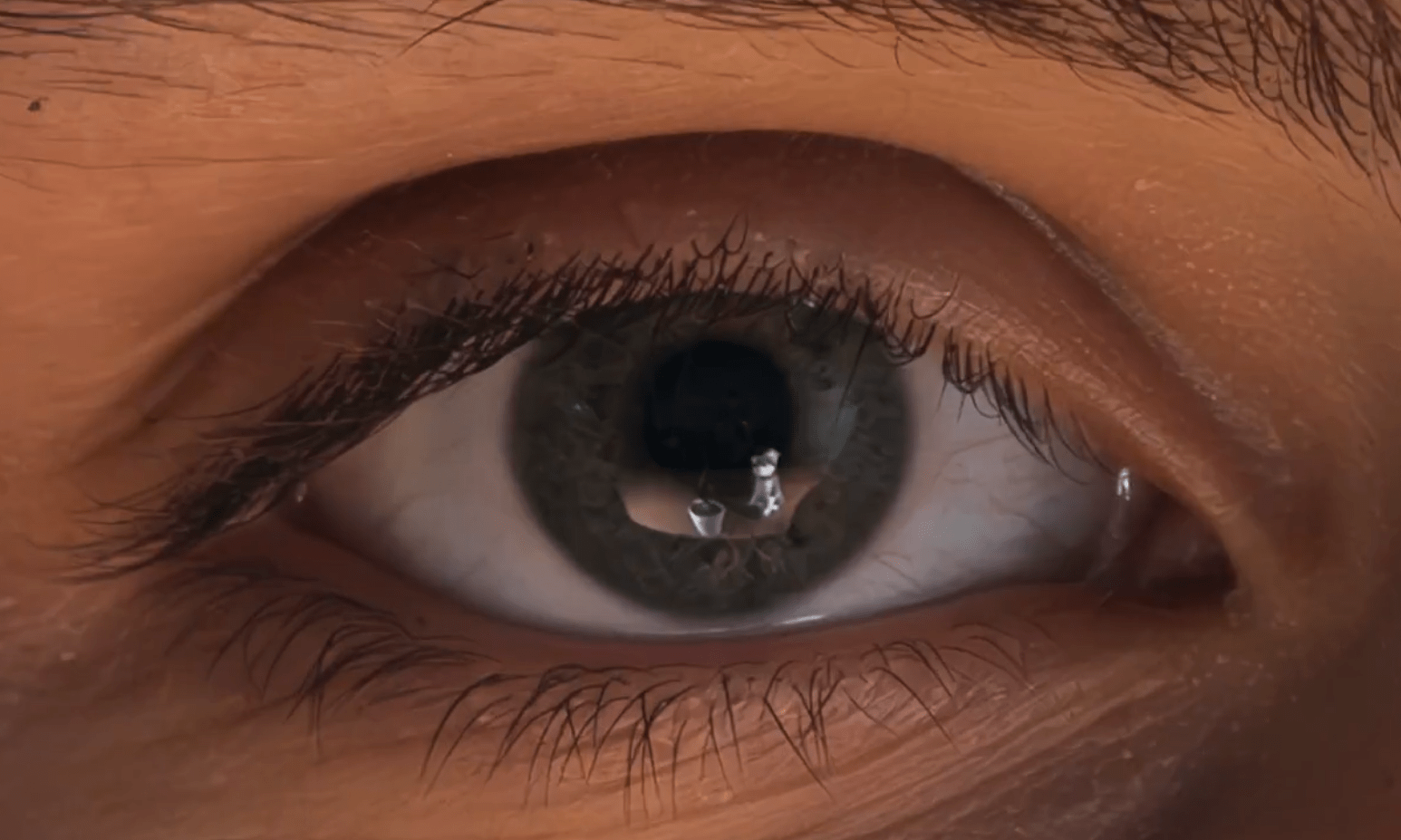

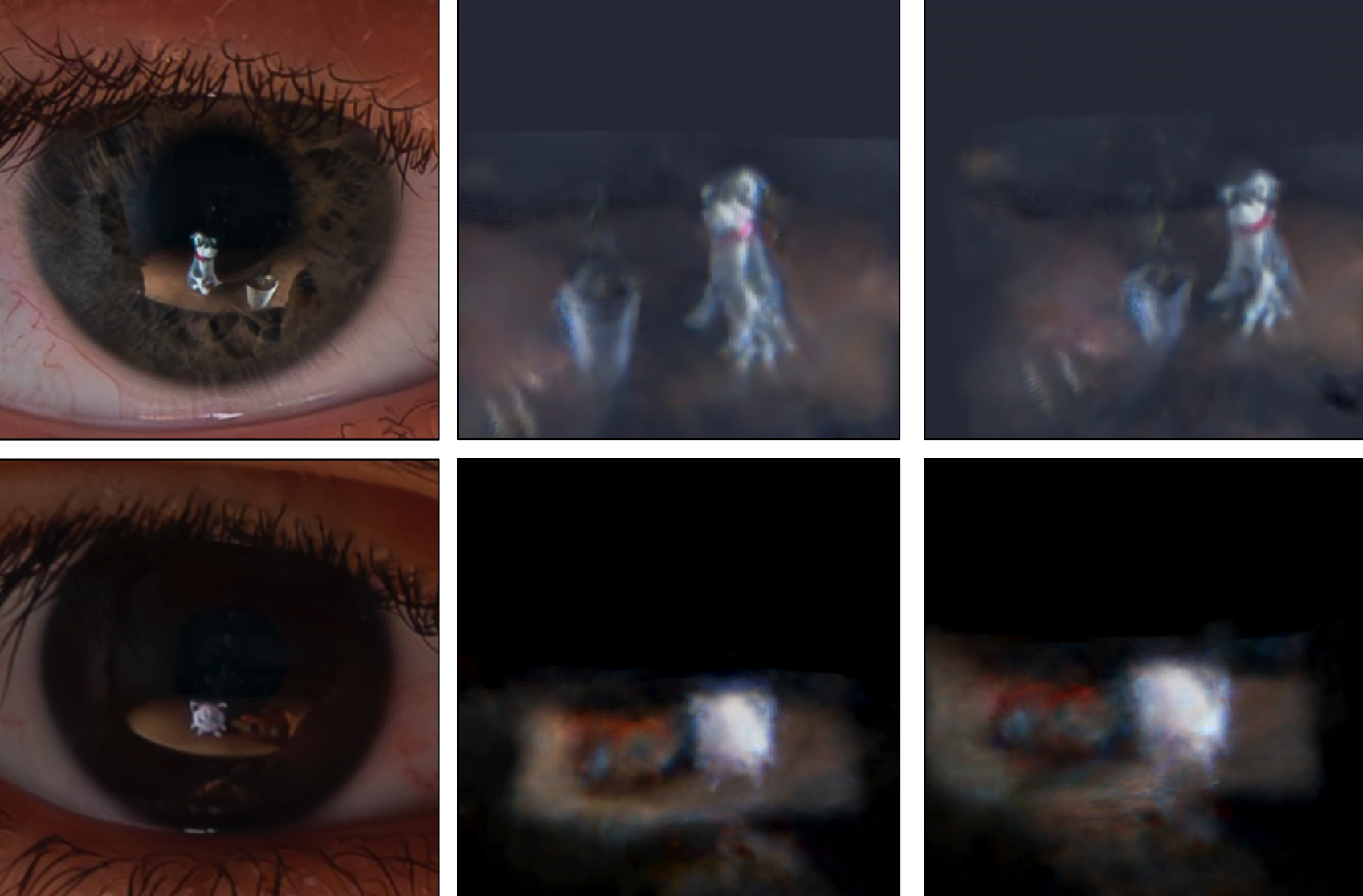

Reconstructing a 3D scene from the reflection in the eye of a portrait photo. | Video: Alzayer, Zhang et al.

Reconstruction of a 3D scene from the reflection in the eye of a portrait photograph. | Video: Alzayer, Zhang et al.

3D nerf with only one camera

While typical NeRF reconstructions require multiple camera views of the object being reconstructed, the researchers simply moved the person through the camera's field of view. The reflections in the eye, which change with even minimal movement, provided the different perspectives needed to reconstruct the 3D scene, even though only one camera saw the person.

To validate their method, the team used synthetic eye images rendered with Blender and real photographs of a person moving in the camera's field of view. Despite challenges such as inaccuracies in locating the cornea and estimating its geometry, and the inherently low resolution of the images, their method showed promise, they say. In tests with synthetic eye models, the team was able to perform full-scene reconstructions using only eye reflections.

Highly detailed reconstruction of a 3D scene using a synthetic eye model under optimal conditions. | Video: Alzayer, Zhang et al.

However, the tests were conducted only in the laboratory; in reality, many other factors are at play, and the assumptions made in the paper, such as about the texture of the iris, may be too simplistic. Brighter iris textures or situations involving strong eye rotation could pose additional challenges, the researchers write.

The team hopes the work will stimulate further research into how unexpected, random visual signals can be used to reveal information about the surrounding world.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.