Neural Radiance Fields (NeRFs) can render photorealistic 3D scenes from photos and videos. The open-source tool Nerfstudio makes it easy to get started with the new visualization technology.

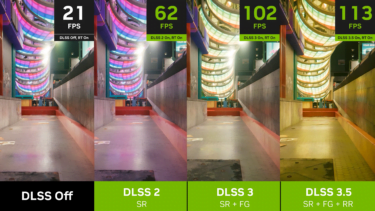

Neural graphics methods are developing rapidly. Companies like Nvidia are betting on them and investing in AI-based rendering methods like DLSS and corresponding hardware.

When it comes to rendering 3D content, Neural Radiance Fields (NeRFs) are considered a hot candidate for a key rendering technology of the future. NeRFs are neural networks that learn a 3D representation of a scene from 2D images and then can render it from new viewpoints.

Videos for example can be transformed into photo-realistic 3D scenes, or photos of individual objects can be transformed into individual, realistic 3D renderings.

Nvidia, Google, Deepmind, and Meta invest in NeRFS

Lately, Nvidia, Google, and Meta have unveiled increasingly faster and better NeRF methods that enable 3D portraits, and could one day revolutionize Street View or transfer objects faster into the metaverse. Google already uses a variant of the technology in Google's Immersive View for Google Maps.

Similar to other AI technologies, access to NeRFs has so far been limited by high computing power requirements and a lack of accessible tools. A group of researchers has now launched Nerfstudio, a Python library that provides a simplified end-to-end process for creating, training, and visualizing NeRFs.

Releasing ?nerfstudio?, a plug-and-play python library to easily create your own NeRFs!@nerfstudioteam is a contributor friendly open-source repo with a realtime web viewer that makes it easy to make cool videos?️https://t.co/zw0ZPqIZ4chttps://t.co/NZM8GPjNrF #nerfstudio 1/ pic.twitter.com/hmTz8ePjW1

- Angjoo Kanazawa ?? (@akanazawa) October 5, 2022

Nerfstudio makes it easier to get started with NeRFs

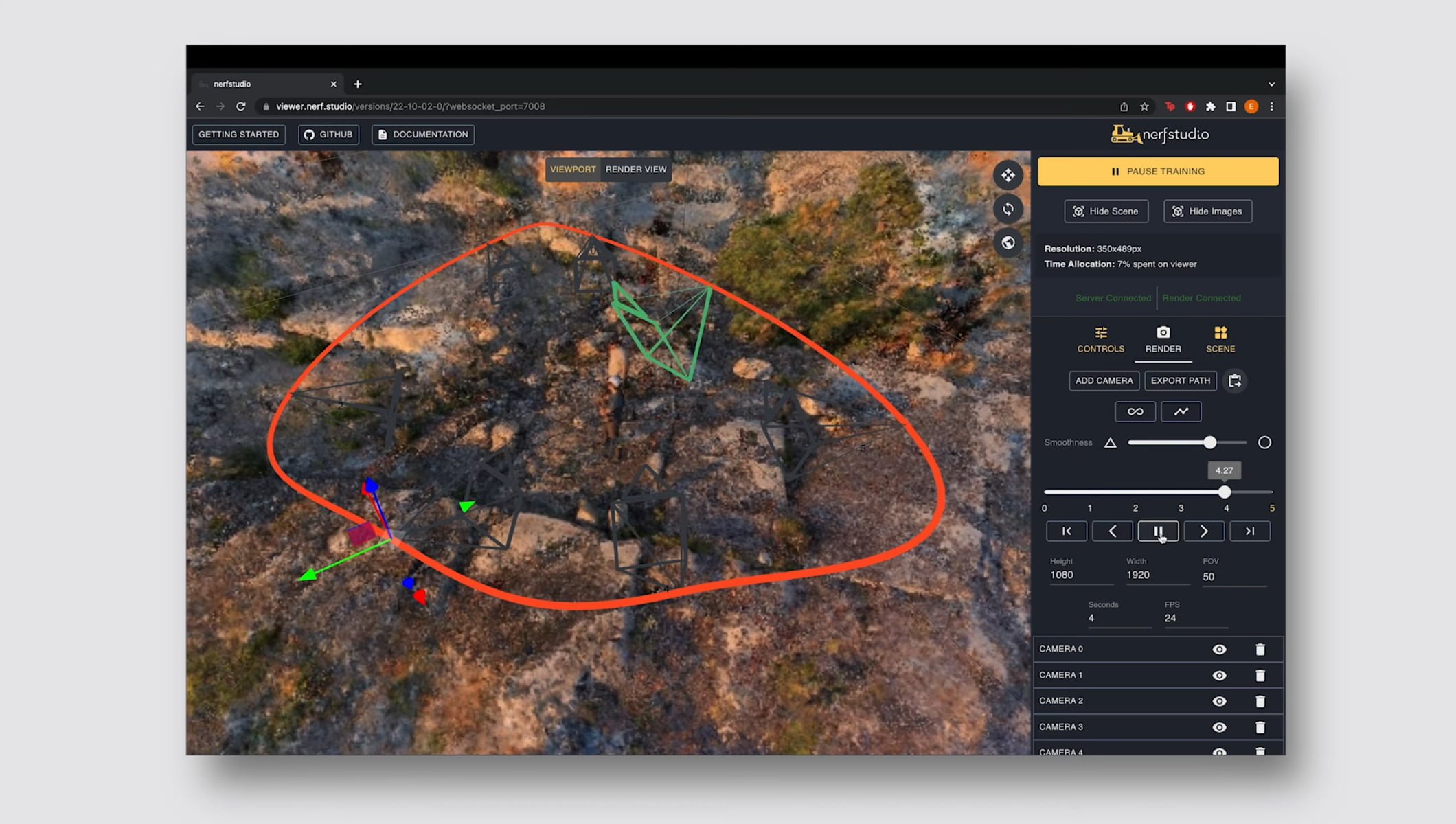

Every step in creating NeRFs is modularized in Nerfstudio, the team writes. The software is designed to provide a user-friendly experience when exploring the technology. In addition to the API and a clean web interface, Nerfstudio offers tutorials and documentation.

To get started, the group recommends gaining initial experience with the included NeRF of a Lego scene created in Blender. Subsequently, you can venture into training with your own images.

Amazing render by @brenthyi! Captured on a phone (no drone involved) #nerfacto #NeRF #neuralrendering #AI #computervision #nerfstudio pic.twitter.com/lRegSdfNTa

- nerfstudio (@nerfstudioteam) October 13, 2022

Nerfstudio still requires rudimentary code knowledge - and some time and willingness to read through the manuals. Because similar to the beginning of the current Stable Diffusion rush, data preparation, training and other steps have to be done using code. The actual training then can be followed in the web viewer, where custom camera paths can then be inserted and rendered to a video fly-through of the NeRF.

The code, examples and information are available on the Nerfstudio github. Instructions and further help are available on the Docs page of Nerfstudio as well as on the Nerfstudio Discord.