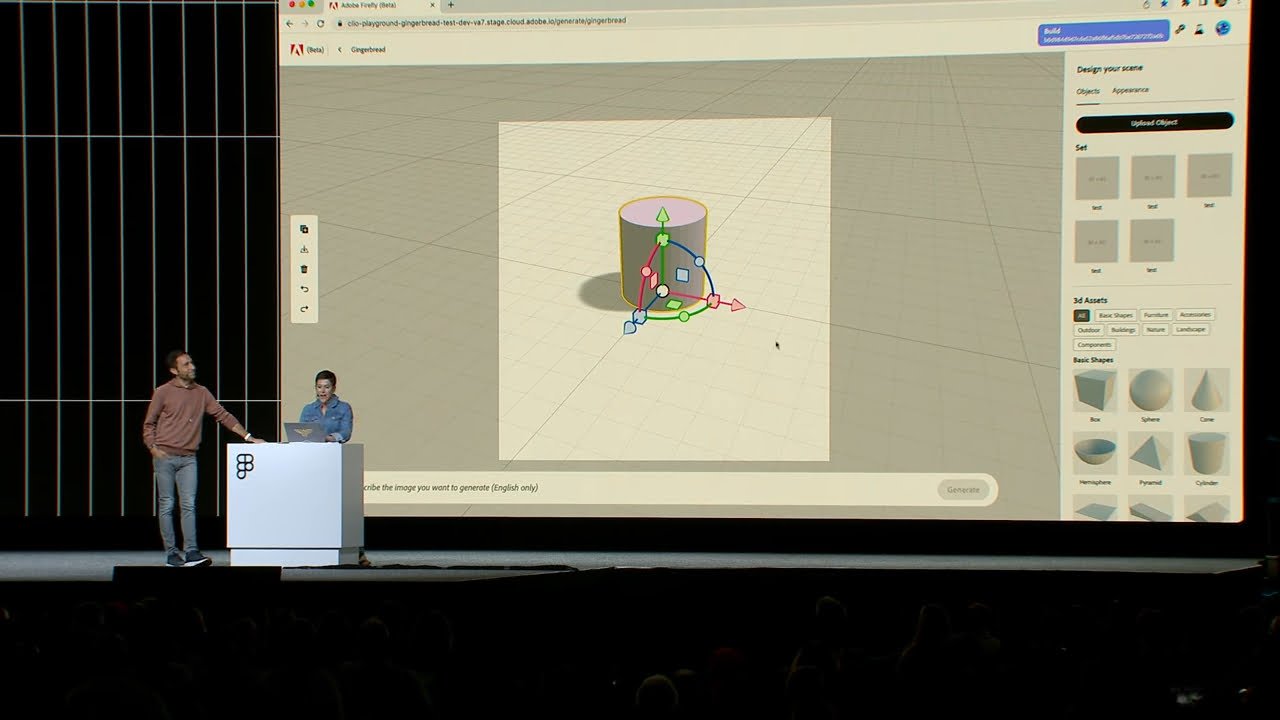

Adobe offers a glimpse into the future of generative image processing with Project Gingerbread, a more accurate AI tool for designers.

After Firefly, US graphics specialist Adobe unveiled another image AI tool that allows for more accurate yet flexible generation by basing image generation on user-created 3D scene presets. The generated image is layered on top of these presets, rather than simply being output in a random composition.

"AI tends to put things smack bang in the middle of the image. But what if I want to compose around it?" said Brooke Hopper, principal designer for emerging design at Adobe.

3D modeling with text prompts

First, the user roughly models the image scene with 3D objects. Adobe demonstrates this with the example of a whiskey glass: a 3D shape that resembles the shape of the glass is pushed slightly into the background and to the right edge of the image.

The image system will then follow these guidelines when processing the text prompt "whisky glass on wooden table" and place the image on the 3D model accordingly. The whisky glass will appear at approximately the same position in the image where the 3D shape was previously placed.

In this way, Gingerbread reduces randomness in image generation. Users can also upload custom 3D objects that more closely resemble the objects they want to visualize.

AI image generation becomes more precise

Image AI systems like Midjourney currently give their customers little control over the images they generate, apart from text prompts.

However, research projects such as DragGAN show that precise AI image generation is technically possible. Meta's Make-A-Scene and project GLIGEN, which use 2D sketches as templates for AI image filling, also offer more control than text-only image systems.

So, Adobe's idea of making AI generation more controllable isn't new. But when it's productively integrated into a widely used tool like Photoshop, it may be the first time it moves out of the research lab and into the real world.

Adobe unveiled Project Gingerbread at Config 2023, a show organized by Figma, the design firm it acquired. When and in what form Project Gingerbread will appear, possibly as an extension of Firefly, is unknown.

Adobe has integrated Firefly into various programs in its Creative Suite, including Photoshop, Premiere Pro, and Illustrator, in a variety of ways that go beyond simple text-to-image prompts.

More news about Gingerbread could come at Adobe Max 2023 in October 2023. Typically, Adobe saves big announcements for its in-house show. But in the fast-paced AI industry, days count more than weeks. That's probably why Adobe decided to show off Gingerbread early.