Captive to industry, robots now dream of work, with no electric sheep in sight

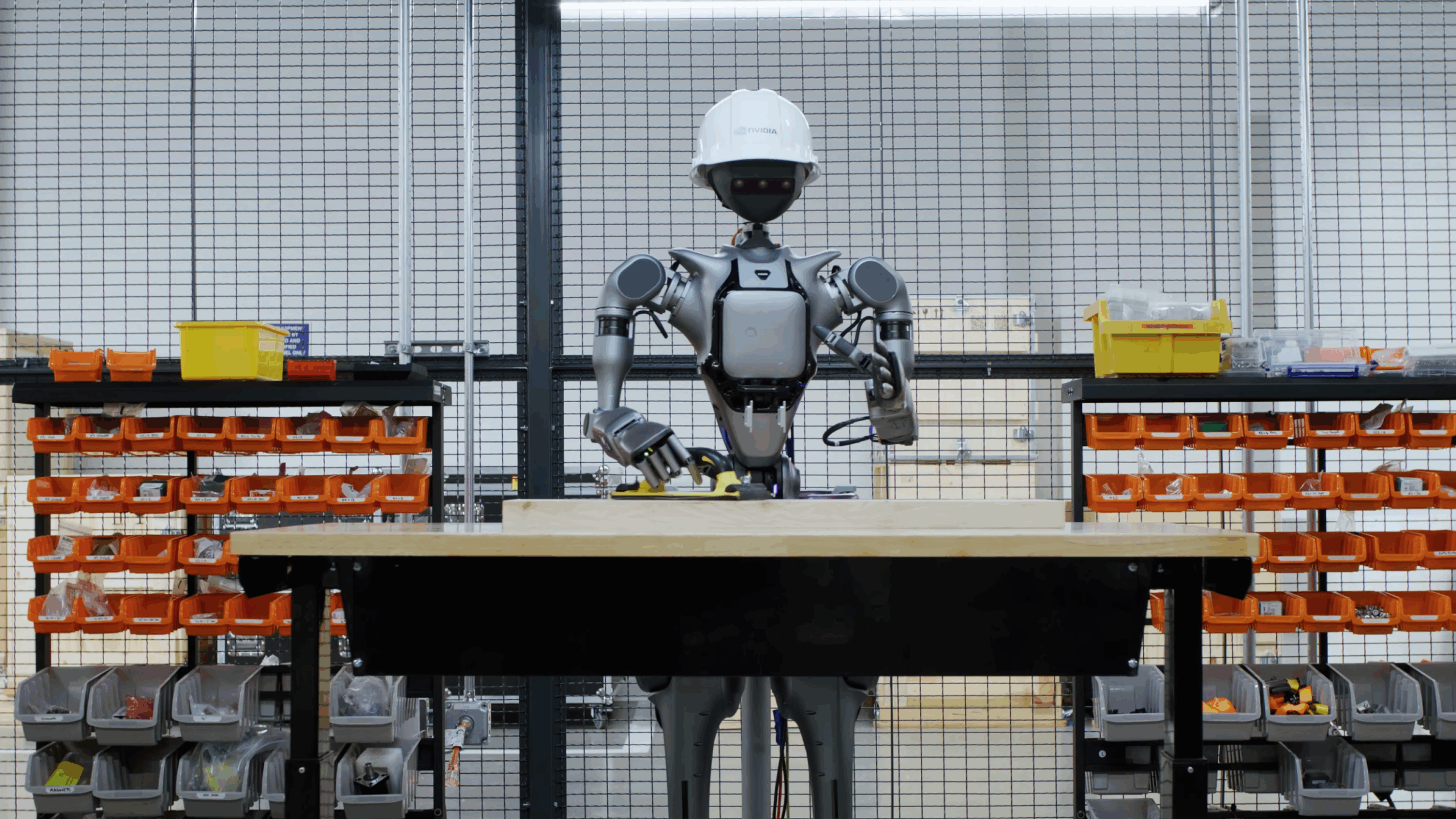

At Computex 2025, Nvidia unveiled an updated version of its foundation model for humanoid robots, GR00T.

The latest release, GR00T N1.5, builds on the original GR00T N1 announced in March. The new model is designed to better handle unfamiliar environments and can recognize and perform a wide range of material handling tasks.

GR00T N1.5 is built around a dual-system architecture. System 2 handles cognitive tasks like planning, while System 1 controls real-time motor execution. The goal is to equip humanoid robots with generalized reasoning and action skills—much like how large language models have transformed language tasks.

According to Nvidia, GR00T N1.5 can be trained in just 36 hours using synthetic data, a process that would normally take about three months with traditional methods.

Early adopters include AeiRobot, Foxlink, Lightwheel, and NEURA Robotics. AeiRobot is using the model to control industrial pick-and-place workflows through natural language. Foxlink aims to make its industrial robots more flexible, while Lightwheel is validating synthetic training data for humanoid robots in manufacturing.

GR00T-Dreams: Training data from AI-generated videos

Nvidia also introduced a new blueprint called GR00T-Dreams, which uses image-driven AI video models to generate synthetic motion data for robot training. The system was developed under the direction of Jim Fan, who leads Nvidia's research group for embodied generative AI.

Developers first train a world model using Cosmos Predict. Next, GR00T-Dreams takes a single image and generates a video showing a robot performing a new task in a new environment. The system then extracts so-called action tokens—compressed data fragments that teach the robot new behaviors.

Real-world robots can only collect a limited amount of data each day, but with GR00T-Dreams, developers can generate as much training data as needed. The videos are produced by the Cosmos AI video generator, which has been fine-tuned with robot footage from Nvidia’s labs. These synthetic videos are then fed into the training pipeline.

New simulation systems narrow the sim-to-real gap

To further accelerate training and testing, Nvidia announced updates to several open simulation and data frameworks, including Isaac Sim 5.0 and Isaac Lab 2.2, both available on GitHub. Isaac Lab now offers new test environments for GR00T N models. Nvidia is also releasing an open-source dataset containing 24,000 high-quality motion sequences for humanoid robots.

A new world model called Cosmos Reason is also available. It uses a "chain of thought" approach to curate high-quality synthetic training data. Cosmos Predict 2, which powers GR00T-Dreams, is set to launch soon on Hugging Face.

Rounding out the setup, the GR00T-Mimic blueprint, introduced in March, generates large volumes of synthetic motion data for manipulation tasks from just a handful of human demonstrations. Companies like Foxconn and Foxlink are already using it to speed up their training pipelines.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.