CAT4D from Google Deepmind turns videos into simple 3D scenes

Key Points

- Researchers from Google Deepmind, Columbia University and UC San Diego have developed an AI system called CAT4D that can generate dynamic 3D scenes from ordinary videos.

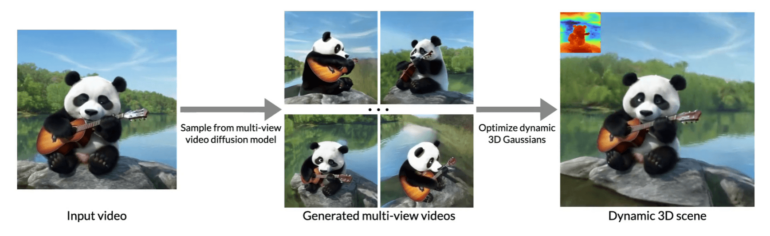

- CAT4D uses a novel multi-view video diffusion model, trained on a mixture of real and synthetic data, to generate multiple views from different angles of a video and compute a changing 3D reconstruction.

- The technology could have applications in areas such as game development, film and augmented reality, although the system still struggles with temporal extrapolation beyond the input frames.

A new AI system from Google Deepmind can turn ordinary videos into dynamic 3D scenes. The team, which includes researchers from Columbia University and UC San Diego, calls their creation CAT4D.

The system uses a diffusion model to take a video shot from a single angle and generate views from multiple perspectives. It then builds these different viewpoints into a dynamic 3D scene. The end result? A video where you can look at the subject from many angles.

Video: Google Deepmind

Until now, capturing something like this required elaborate setups with multiple cameras recording the same scene simultaneously. CAT4D simplifies the process by working with regular video footage.

Training challenges and solutions

The team faced one problem: there wasn't much existing data to train their AI. To work around this, they got creative and mixed real-world footage with computer-generated content. The training data included multi-view images of static scenes, single-perspective videos, and synthetic 4D data.

The diffusion model learns to create images from specific angles at specific moments in time. According to the researchers, CAT4D produces higher quality results than similar systems, though it still struggles with generating videos longer than the original footage.

Technology like CAT4D could find its way into several industries, the researchers say. Game developers might use it to create virtual environments, while filmmakers and AR developers could incorporate it into their workflows.

Anyone interested in seeing more examples can check out the project's GitHub page.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now