Cerebra's WSE-3 enables AI models to be ten times larger than GPT-4 and Gemini

Cerebras Systems has unveiled its third wafer-scale AI chip, WSE-3, which is expected to double the performance of its predecessor and will power an 8 exaflops supercomputer in Dallas.

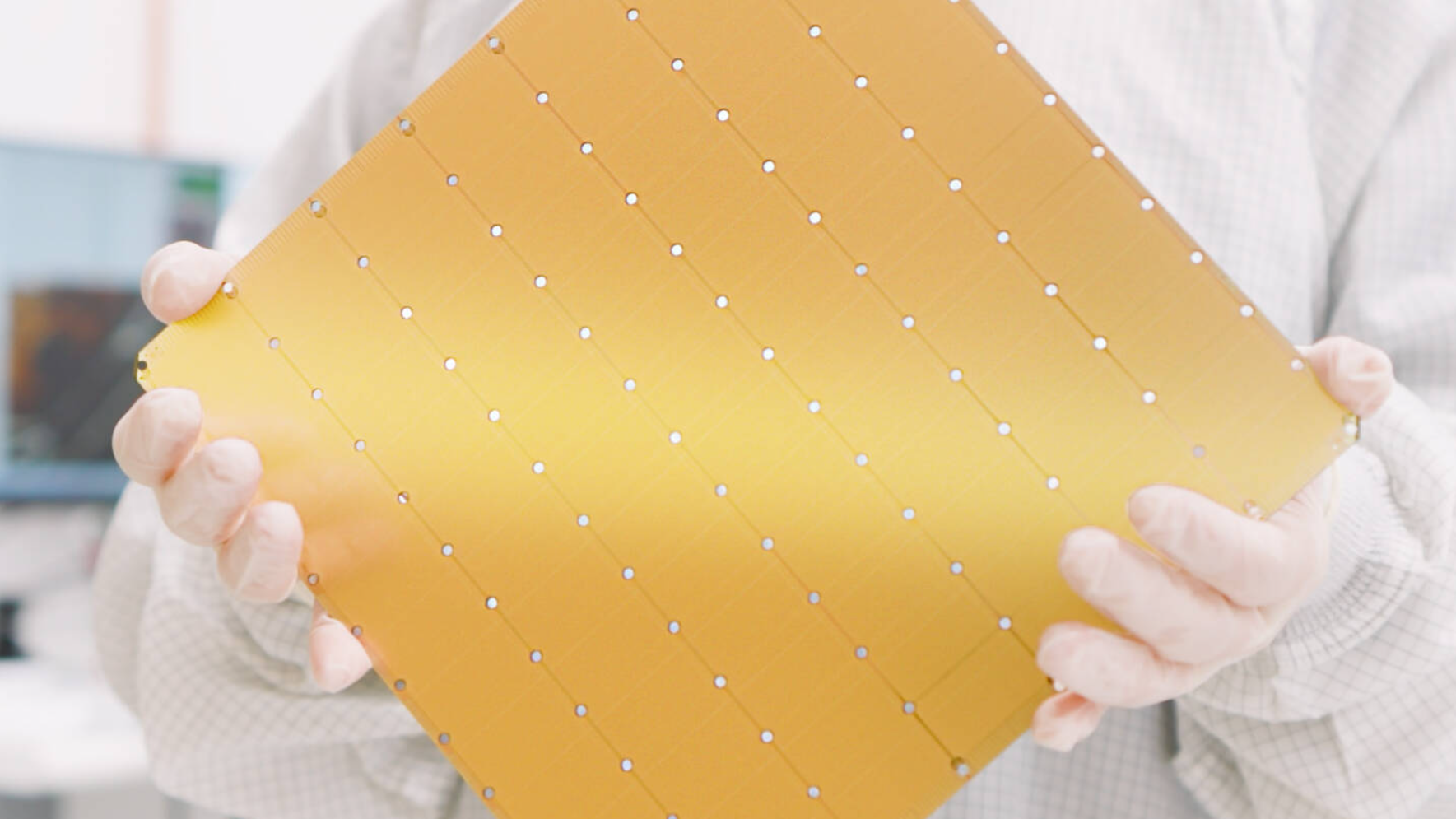

Cerebras Systems has unveiled the WSE-3, the third generation of its wafer-scale AI megachip. According to the company, the chip is twice as powerful as its predecessor while consuming the same amount of power. With 4 trillion transistors and a more than 50 percent increase in transistor density thanks to the latest chip manufacturing technology, Cerebras continues its tradition of producing the world's largest single chip. The square chip, with an edge length of 21.5 centimeters, uses nearly an entire 300-millimeter silicon wafer.

Since the first megachip WSE-1 in 2019, the number of transistors has more than tripled. The latest chip, WSE-3, will be built on TSMC's 5-nanometer technology, after the 2021 WSE-2 was built on the company's 7-nanometer technology.

WSE-3-based supercomputers to enable AI training on a new scale

The computer built around the new AI chip, the CS-3, is said to be capable of training new generations of huge language models, ten times larger than OpenAI's GPT-4 and Google's Gemini. Cerebras claims that the CS-3 can train neural network models with up to 24 trillion parameters without the need for software tricks that other computers require.

Up to 2,048 systems can be combined, a configuration that could train a language model such as Llama 70B in just one day. The first CS-3-based supercomputer, Condor Galaxy 3 in Dallas, will consist of 64 CS-3s and is expected to achieve 8 exaflops of performance. Like its CS-2-based sister systems, it will be owned by Abu Dhabi's G42.

Cerebras has also entered into a partnership with Qualcomm to reduce the price of AI inference by a factor of ten. To do this, the team plans to train AI models on CS-3 systems and then make them more efficient using methods such as pruning. The networks trained by Cerebras will then run on Qualcomm's new inference chip, the AI 100 Ultra.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.