Chatbots can improve online political debate, study finds

In a field experiment, researchers use real-time phrasing assistance from a language model to improve the quality of discussion in a chat about gun control in the United States. The experiment supports the researchers' thesis that chatbots could have a positive impact on the culture of debate.

In total, the researchers recruited 1,574 people with different views on US gun control. The participants discussed the issue in an online chat room.

The perfect setting for chaos, anger, and contention? Apparently not when a language model focused on kindness and appreciation supports the discussion.

Two-thirds of AI suggestions accepted

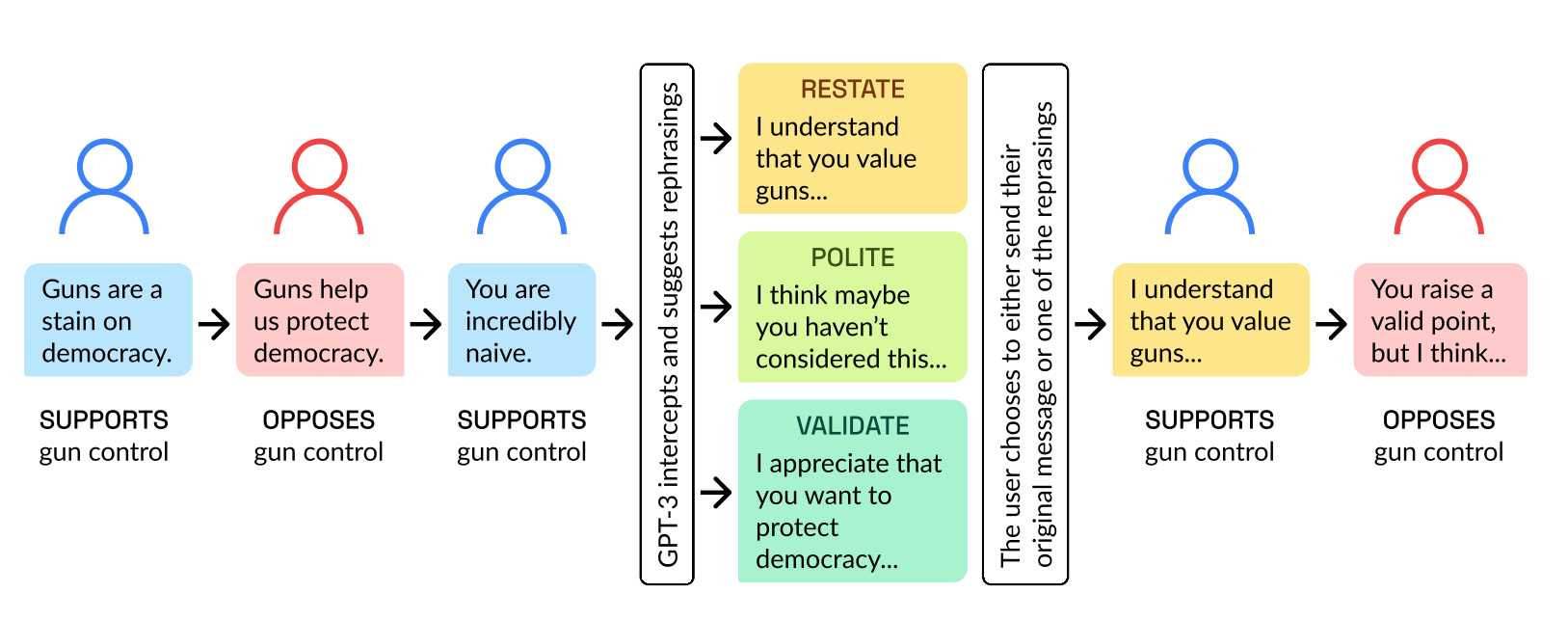

A large language model (GPT-3) reads conversations and suggests alternative wording before the message is sent. The suggestion is intended to make the message more reassuring, validating, or polite.

Subjects can choose to use the suggested wording, change it, or keep their original message. In total, GPT-3 made 2,742 suggestions for improvement, which were accepted two-thirds of the time, or 1,798 times. A conversation lasted an average of twelve messages, and participants were paid.

According to the team, the experiment was prompted in particular by the rapid escalation of debates on digital channels and whether AI can help improve the culture of discussion there.

"Such toxicity increases polarization and corrodes the capacity of diverse societies to cooperate in solving social problems," the paper says.

Large language models should promote understanding and respect

The results of the experiment support the team's hypothesis that a language model can improve online discussion culture: According to participating researcher Chris Rytting, a Ph.D. candidate at Brigham Young University, the AI suggestions had "significant effects in terms of decreasing divisiveness and increasing conversation quality, while leaving policy views unchanged."

The researchers measured these improvements using text analysis of conversations before and after an AI suggestion, as well as a pre- and post-chat questionnaire. A follow-up survey nearly three months after the experiment checked to see if the chatbot interventions had a lasting effect on the subjects, and the team found no anomalies.

Political opinions were not affected by the language model, the researchers said. Even if disagreements persist, high-quality political discourse is good for a society's social cohesion and democracy, they said.

"Such dialogue is a necessary, even if insufficient, condition for increasing mutual understanding, compromise, and coalition building."

The next step could be to confirm and deepen these findings in further studies under real-life conditions, such as unpaid and longer conversations among family or friends.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.