ChatGPT trumps human authenticity, relevance, coherence in political debate study

A University of Passau study found that British citizens view AI-generated responses in political debates as more authentic and relevant than actual responses from public figures.

The study surveyed 948 British citizens who rated questions and answers from the BBC's "Question Time" program alongside responses generated by GPT-4 Turbo. These were attributed to public figures and presented to the participants without identifying the source.

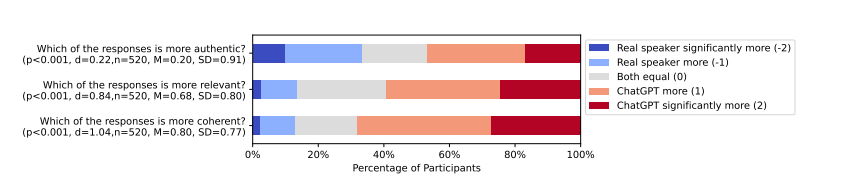

"Our results show clearly that LLM-generated, impersonated content is judged as more authentic, coherent, and relevant than the actual debate responses," the study authors said.

Perception vs. reality

The study found that AI-generated responses often differed from actual responses. About half were rated as dissimilar, while only a third were rated as similar. Worryingly, even when the content differed from the public figures' actual positions, participants still rated the AI responses as authentic.

Linguistic analysis showed differences in language use. AI answers contained more nominalizations, greater lexical variety, and higher agreement with words from the questions. Real answers contained more phrases like "I think."

What's important to note is that there are limitations to the comparison of AI-generated responses to human responses in a live setting. For example, politicians are under time pressure and can't put the same resources into their answers as an AI system.

Potential for misuse

The researchers caution: "Threat actors can easily use LLMs to pollute public information spheres with fake but authentic political statements, e.g., to sow confusion about what the actual remarks were and to invent talking points."

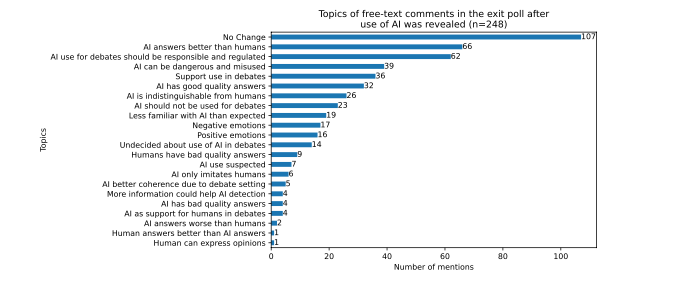

Over 85% of study participants felt AI use in public debates should be transparent, and information about AI development should be shared.

The researchers stress the need to educate the public about modern AI capabilities. Many participants initially underestimated the technology's potential. After learning about AI use, they showed greater appreciation for AI capabilities but also increased desire for regulation.

The study backs up a warning from OpenAI CEO Sam Altman, who said that AI will eventually achieve superhuman powers of persuasion, and this "may lead to some very strange outcomes."

There's another study from (EPFL) and Italy's Fondazione Bruno Kessler that found that GPT-4, with access to personal information, was able to increase participants' agreement with their opponents' arguments by a remarkable 81.7 percent compared to debates between humans.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.