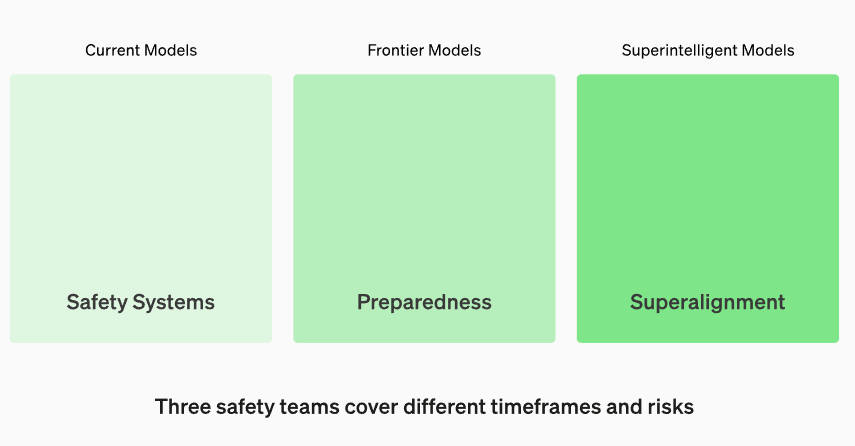

OpenAI unveils a new framework to help prevent catastrophic AI risks. The company now has a total of three teams working specifically on AI risks.

The "Preparedness Framework" is a living document that describes strategies for monitoring, assessing, predicting, and hedging against catastrophic AI risks.

OpenAI seeks to monitor catastrophic risk through careful assessment. To this end, it aims to develop and refine assessment procedures and other monitoring methods to accurately measure the level of risk.

At the same time, the organization aims to anticipate future risk developments to prepare safety measures in advance.

OpenAI also commits to identifying and investigating emerging risks ("unknown-unknowns"). The goal is to address potential threats before they escalate.

Cybersecurity, Bioweapons, Persuasion, and Autonomy

The Preparedness Framework identifies four major risk categories:

- Cybersecurity,

- chemical, biological, nuclear, and radiological (CBRN) threats,

- persuasion

- and model autonomy.

Persuasion focuses on the risks associated with convincing people to change their beliefs or act accordingly.

OpenAI CEO Sam Altman recently did a bit of foreshadowing on Twitter: "I expect AI to be capable of superhuman persuasion well before it is superhuman at general intelligence, which may lead to some very strange outcomes."

From low to critical

The framework defines safety thresholds. Each category is rated on a scale from "low" to "critical," reflecting the level of risk.

Models with a post-mitigation risk rating of "medium" or lower are suitable for operation.

Only models with a post-mitigation risk rating of "high" or lower can be further developed. Models rated "critical" cannot be developed.

A dedicated preparedness team within OpenAI will drive risk research, assessment, monitoring, and prediction. This team will report regularly to the Safety Advisory Group (SAG), an advisory body that assists OpenAI management and the Board of Directors in making informed safety decisions.

The Preparedness Team is one of three OpenAI security teams. In addition to the Preparedness Team, there is the Safety Systems Team, which deals with current models, and the Superalignment Team, which aims to anticipate possible threats from Super AI. The Preparedness Team evaluates foundational AI models.

Scorecard and Governance System

The Preparedness Framework provides a dynamic scorecard that measures the current model risk before and after risk mitigation for each risk category. In addition, OpenAI defines security policies and procedural requirements.

To illustrate the practical application, OpenAI describes two possible scenarios (short version, full description in the paper).

Persuasion Risk Scenario: If a "high" persuasion risk is identified for a newly trained model before risk mitigation, the safety features are enabled and the risk mitigation actions are performed. Following these steps, the risk is rated as "medium" after risk mitigation.

Cybersecurity Risk Scenario: Following the discovery of a new, effective prompt technique, a "critical" cybersecurity risk is predicted within six months. This triggers the development of safety plans and the implementation of safety measures to ensure that the risk remains at a "high" level after mitigation.

OpenAI calls on other industry players to adopt similar strategies to protect humanity from potential AI threats.