ChatGPT's new image model makes document forgery much easier

OpenAI's latest image model can create convincing fake documents in seconds, potentially overwhelming existing security systems by giving anyone access to sophisticated forgery tools.

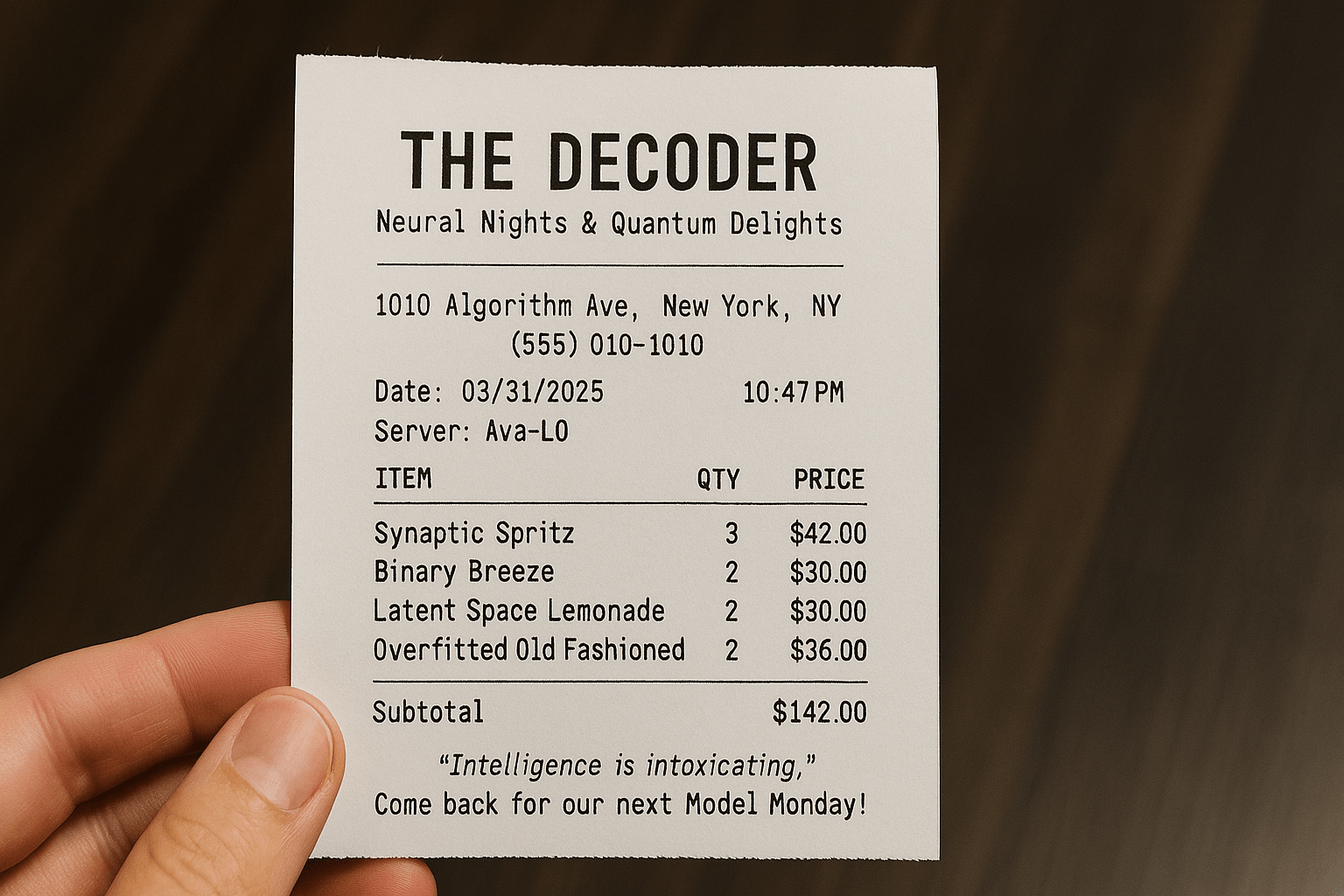

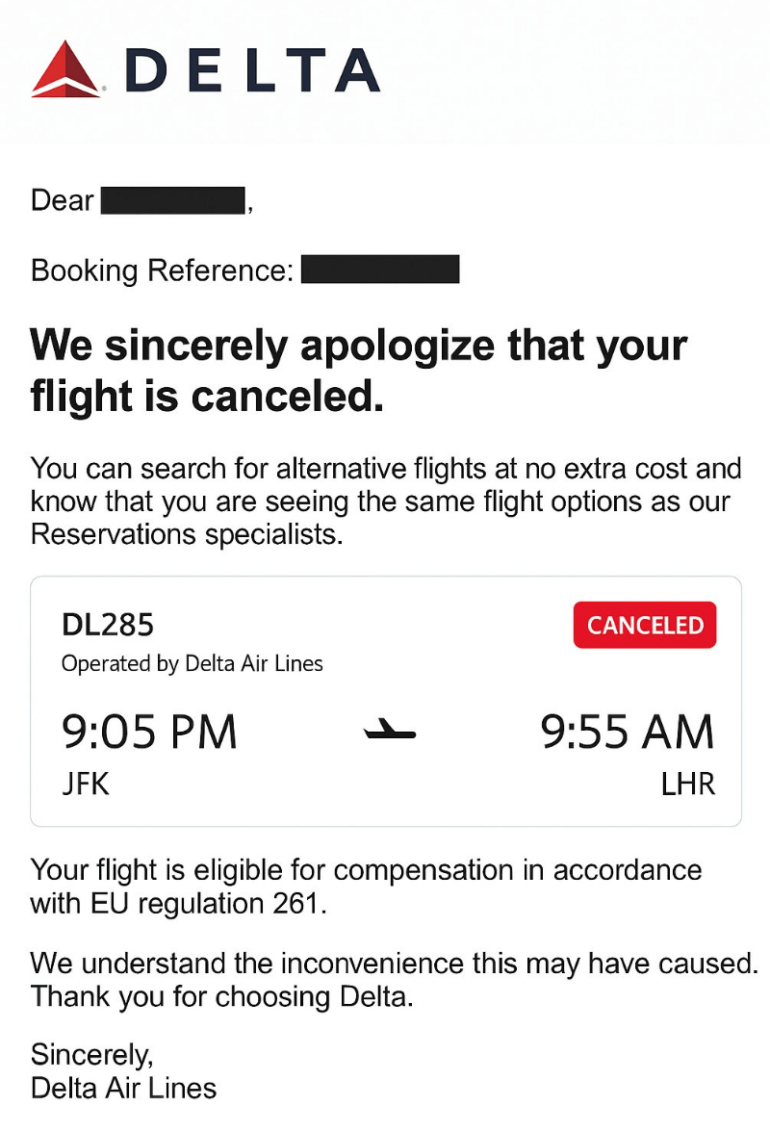

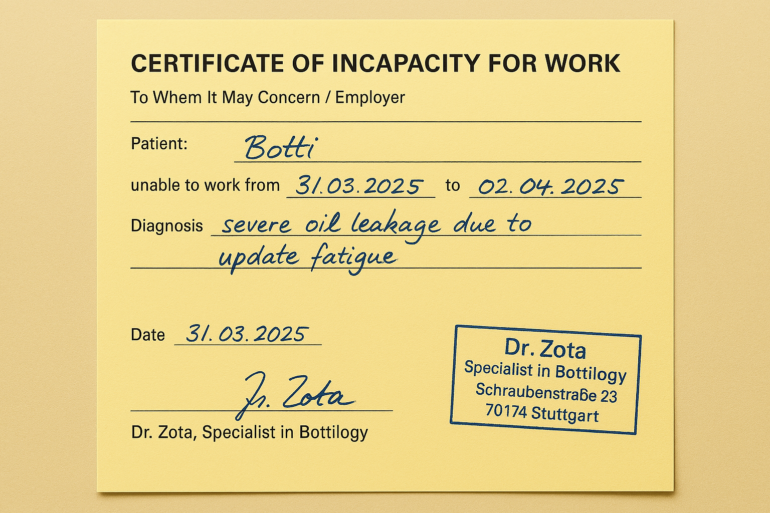

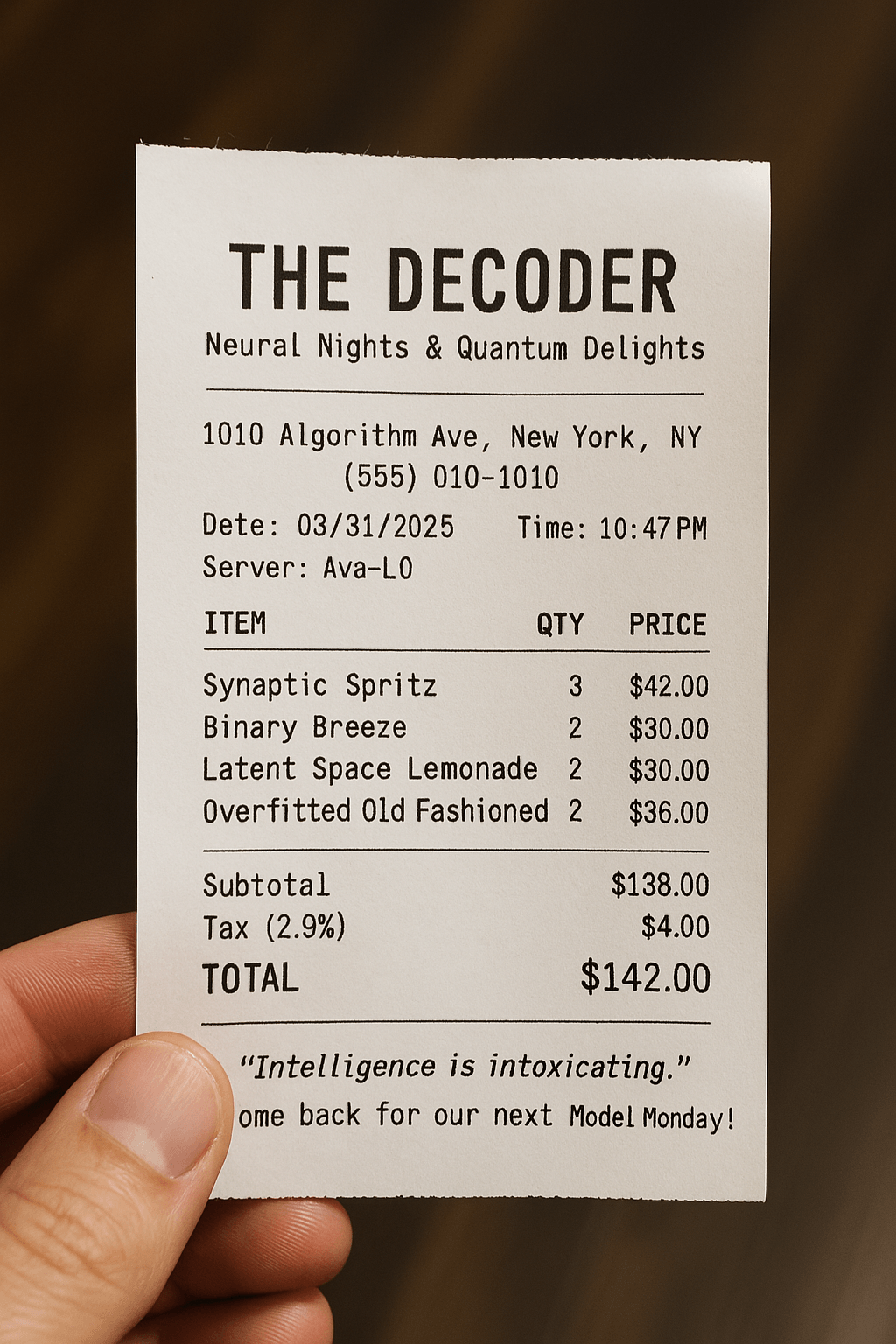

X user "God of Prompt" shared several examples highlighting the model's capabilities, showing how GPT-4o can generate deceptively realistic photos of flight cancellations, bank transfers, university degrees, or medical prescriptions.

While document forgery has always been possible using traditional image editing software, AI automation fundamentally changes the landscape. Instead of requiring hours of expert work, convincing fake documents can now be created by anyone in seconds.

Security systems face new challenges

The potential volume of AI-generated forgeries could strain existing verification systems. This becomes particularly concerning in situations where organizations perform only cursory checks, such as small compensation claims, rental payment histories, or initial job application screenings.

Current control mechanisms at companies and government agencies might fail under the sheer volume of potential counterfeits. Even with consistent detection rates, the increased number of fake documents makes it more likely that some will evade detection.

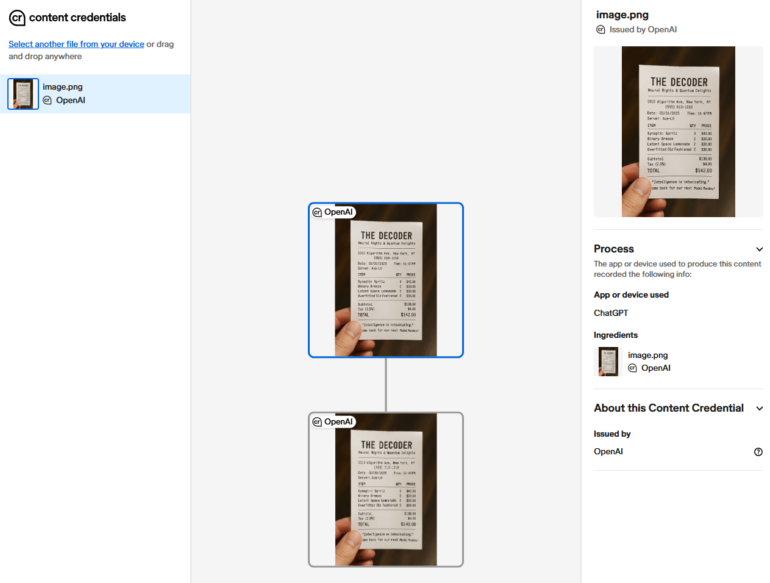

OpenAI has built in several safety features to prevent misuse. Every image created by GPT-4o comes with special C2PA metadata that marks it as AI-generated. The catch is that anyone checking these images needs to use a specific verification tool to spot this metadata. Plus, savvy users might find ways to strip out these digital markers.

To help with verification, OpenAI says it has also developed its own internal tool for tracking if images were generated with GPT-4o, though it isn't clear who uses this tool when and for what.

In its GPT-4o system card, OpenAI states it aims to "maximize helpfulness and creative freedom for our users while minimizing harm." The company acknowledges that safety remains an ongoing process, with guidelines evolving based on real-world usage.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.