China captured the global lead in open-weight AI development during 2025, Stanford analysis shows

At least a dozen Chinese institutions now produce state-of-the-art AI models, and in terms of global distribution, they've apparently already overtaken their US competitors, according to a new Stanford Human-Centered AI Institute analysis.

When Deepseek made waves with its R1 model in early 2025, all eyes were on a single Chinese startup. But that picture is incomplete, argue researchers from the Stanford Institute for Human-Centered Artificial Intelligence in a new analysis. "Chinese-made open-weight models are unavoidable in the global competitive AI landscape," the authors write. The ecosystem runs far broader and deeper than most people realize.

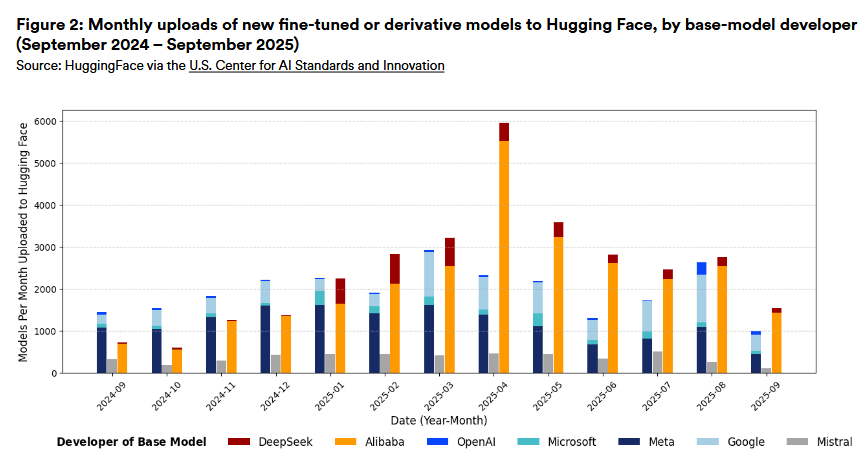

According to the study, Alibaba's Qwen model family replaced Meta's Llama as the most downloaded language model family on Hugging Face in September 2025. Between August 2024 and August 2025, Chinese developers accounted for 17.1 percent of all downloads, edging out US developers at 15.8 percent. The numbers are even more striking for derivative models: 63 percent of all new fine-tuned models on Hugging Face were based on Chinese base models in September 2025.

China's AI ecosystem extends far beyond Deepseek

The Stanford researchers push back against the idea that Deepseek is the only player that matters. Beyond the Hangzhou-based startup, they list more than a dozen Chinese organizations openly publishing high-performance models. These include the so-called "tiger" unicorns—Z.ai, Moonshot AI, MiniMax, Baichuan AI, StepFun, and 01.AI—along with tech giants Alibaba, Tencent, Baidu, Huawei, and ByteDance.

Baidu's shift is particularly telling. Its CEO had positioned himself as an advocate for closed models before the company released its Ernie 4.5 models in June 2025. Competitive pressure from successful open models with permissive licenses forced even staunch skeptics to reconsider.

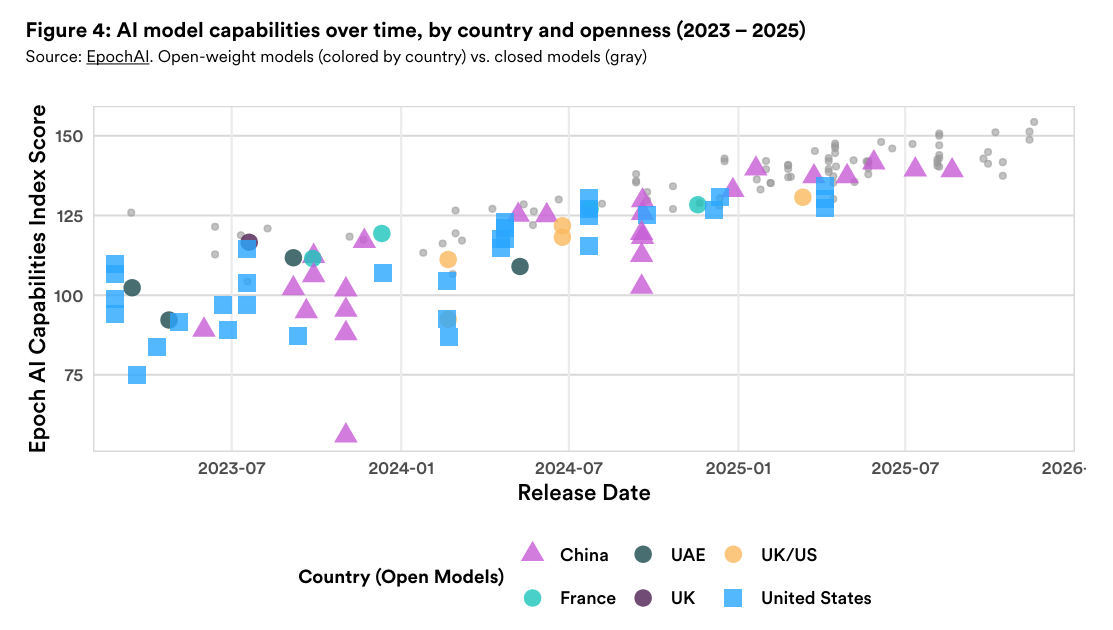

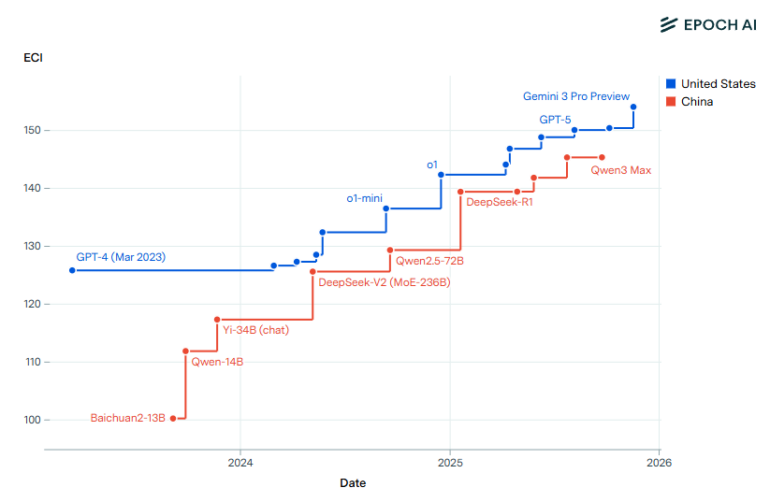

In terms of raw performance, the best Chinese open-weight models trail only slightly behind leading closed systems from Google, xAI, and OpenAI, according to the analysis. On the ChatBot Arena leaderboard, 22 releases from five Chinese labs outperformed the top-ranked open US model. Only one non-Chinese open model made the top 25, developed by French company Mistral.

Chip embargo pushed Chinese developers toward efficiency

The researchers identify a technical focus running through the entire Chinese ecosystem. "Chinese AI developers are prioritizing the development of computationally efficient open-weight models," the report states.

Mixture-of-experts technology enables better results with less computing power, a direct response to US export controls that have restricted access to the most powerful AI chips since October 2022.

At the same time, licensing terms have become increasingly generous. While earlier versions contained restrictions on commercial use, current flagship models ship under Apache 2.0 or MIT licenses, with virtually no limits on use, modification, or redistribution. The researchers see this as a deliberate strategy shift to accelerate global adoption.

Chinese AI models trail their US counterparts by an average of seven months, according to a recent Epoch AI analysis. Since 2023, every model at the top of the performance rankings, measured by the Epoch Capabilities Index , has come from the United States. The gap has fluctuated between four and 14 months. The researchers see a connection to the split between proprietary and open-weight models: nearly all leading Chinese models are open source, while US frontier models remain closed.

Global adoption grows, but security concerns remain

Global adoption of Chinese models is picking up. Singapore's national AI program is building its flagship model on Alibaba's Qwen. Huawei is marketing Deepseek integration in cloud services for African markets. US companies are also turning to Chinese open-weight models, according to the analysis. Meta recently acquired agent startup Manus, which runs on Chinese open-weight LLMs.

The existence of "good enough" Chinese open-weight models could reduce global dependence on US companies offering models via APIs, the authors argue. For users with limited resources, especially in low- and middle-income countries, affordable access and reliable availability may matter more than marginal performance differences.

However, the researchers also flag security issues. Tests by the US government center CAISI found that Deepseek models are on average twelve times more vulnerable to jailbreaking attacks than comparable US models. "There is some evidence that Chinese developers may be less focused than their U.S. counterparts on the various risks under the rubric of 'AI safety,'" the authors write.

Beijing's support for open AI development isn't guaranteed

The authors describe the Chinese government's role as mixed. While Beijing has rhetorically supported open source development since its 2017 AI development plan, internationally China has positioned itself as an advocate for equal technological development, a deliberate contrast to US export controls and closed models.

Deepseek itself apparently achieved its breakthrough with little direct state support. Only after the success of the V3 model did the company receive political recognition, when Premier Li Qiang invited the founder to a government symposium.

At the same time, the researchers point to reports of travel restrictions for Deepseek executives. "It is by no means guaranteed that the Chinese government will continue to support open-weight AI model development," they warn. A serious AI incident or perceived threat to national security could prompt the government to quickly restrict open model development.

Deepseek's success forced a US policy shift

In the US, Deepseek's success triggered a change in direction. President Trump called the R1 model a "wake-up call." In July 2025, America's AI Action Plan elevated open models to a strategic asset. A month later, OpenAI published open-weight models for the first time in almost six years, a move researchers see as a direct response to Chinese competition.

"The Deepseek moment—and subsequent open-weight models released by other Chinese AI labs—likely played a pivotal role in expanding the willingness to accept the risks related to open-weight model development to compete more directly with China," the authors write.

Still, the Stanford researchers urge caution. Benchmark results are often self-reported and should be viewed with skepticism. China's current lead in adoption is only a year old and could shift again. They recommend basing policy decisions on a precise understanding of actual deployment scenarios. And they advocate maintaining dialogue with Chinese researchers and developers: "Selective engagement with Chinese labs, academics, and policymakers should not be avoided or unnecessarily constrained."

An earlier analysis of Hugging Face data showed that Chinese developers had overtaken their US competitors in download numbers for open AI models. At the same time, concerns about political influence through Chinese AI models are growing. An investigation by US media watchdog NewsGuard found that leading Chinese systems repeat or fail to correct pro-Chinese false claims 60 percent of the time. As these models are increasingly adopted by Western companies, not least due to their low costs, particularly among startups, there's a growing risk of gradual spread of state-controlled narratives.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.