CLIPN teaches CLIP the "semantics of negations". This should help computer vision to recognize classes that were not part of the training data.

Computer vision models recognize objects in the images on which they were trained. In real-world applications, however, these models often encounter unknown objects outside their training data, leading to poor results. AI researchers have proposed several techniques to enable AI models to recognize when input is "out-of-distribution" (OOD)-that is, from unknown classes not seen during training. However, existing methods have limitations in identifying OOD examples that resemble known classes.

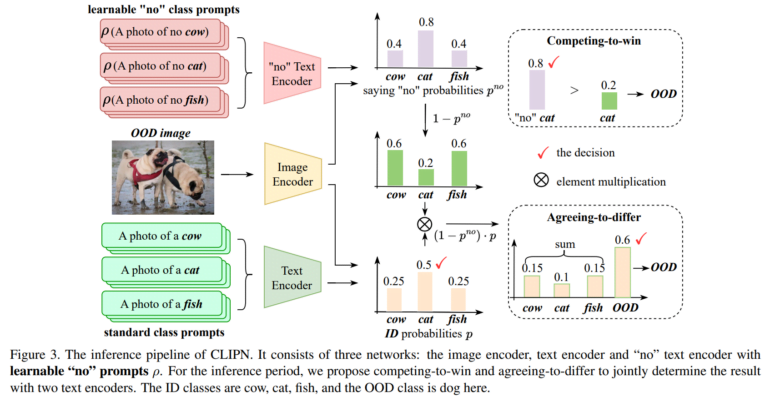

Researchers at the Hong Kong University of Science and Technology have now developed a new technique called CLIPN that aims to improve OOD detection by teaching the well-known CLIP model to reject unknown inputs. The basic idea is to use both positive and negative text cues along with user-defined training techniques to give CLIP a sense of when an input is OOD.

The challenge: hard-to-distinguish unknowns

Suppose a model has been trained on images of cats and dogs. If it is asked to process an image of a squirrel, the squirrel is an out-of-distribution class because it does not belong to the known classes of cats and dogs.

Many OOD detection methods evaluate how well an input matches known classes. However, these methods may misclassify the image of the squirrel as a cat or a dog because it has (some) visual similarities.

CLIPN therefore extends CLIP with new learnable "no" prompts and "no" text encoders to capture the semantics of negations. In this way, CLIP learns when and how to say "no" to recognize when an image falls outside its known classes. For example, the CLIPN technique teaches the model to say "No, that's not a cat/dog" in the case of the squirrel, marking the class as OOD.

In experiments, the team shows that CLIPN identifies OOD examples that standard CLIP does not. According to the researchers, CLIPN improves OOD detection in 9 reference datasets by up to nearly 12 percent compared to existing methods.

However, they say it is still unclear whether the method works on specialized datasets, such as medical or satellite images, and whether it is suitable for other applications, such as image segmentation.