Cosine trained GPT-4o on high-quality data to "codify human reasoning" for its Genie assistant

Cosine, a San Francisco-based AI startup, has unveiled Genie, a new AI model designed to assist software developers. The company claims Genie significantly outperforms competitors in benchmarks.

Working with OpenAI, Cosine trained a GPT-4o variant using high-quality data, resulting in impressive benchmark results. The company says the key to Genie's performance is its ability to "codify human reasoning," which could have applications beyond software development.

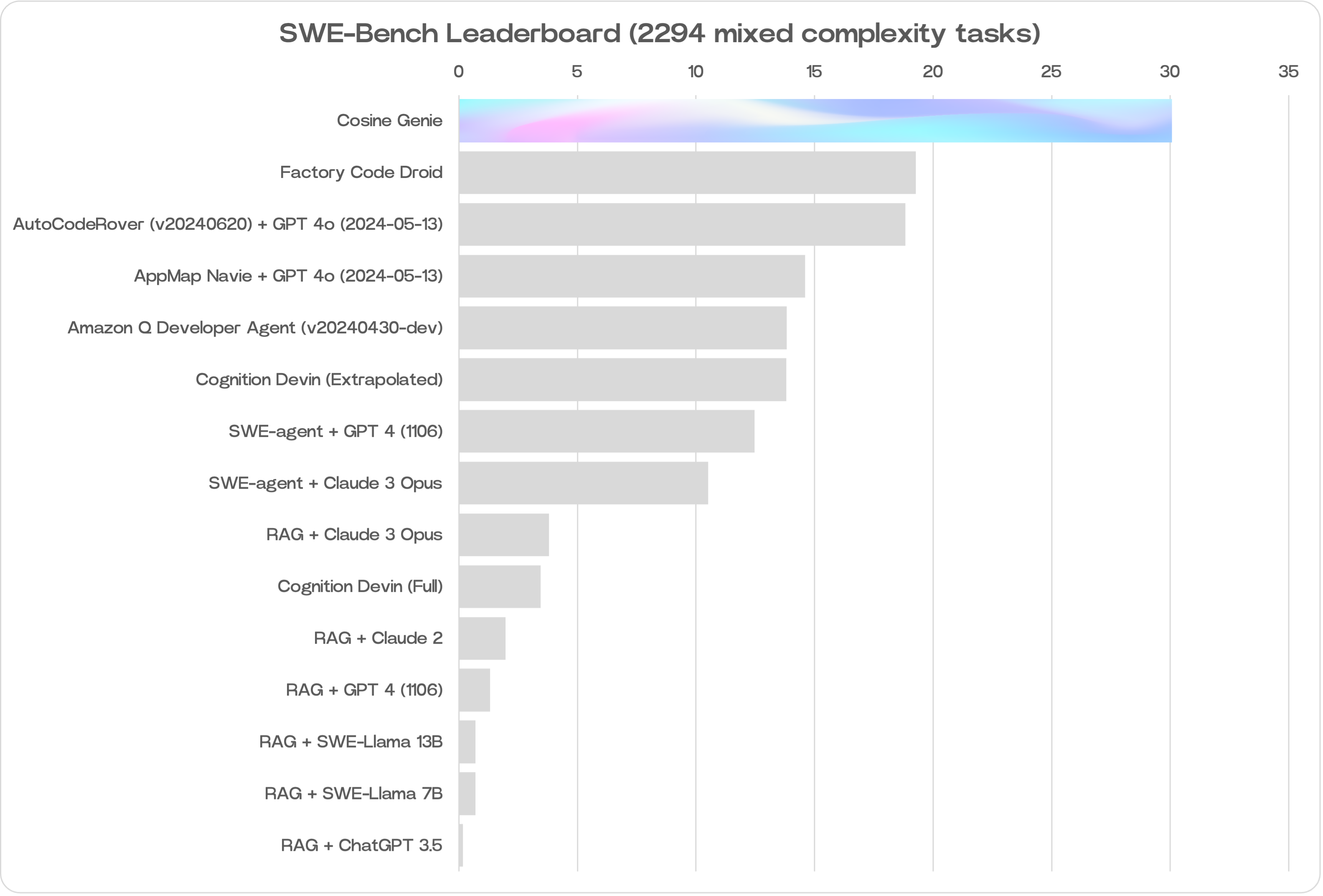

Genie takes the SWE-lead

Cosine co-founder and CEO Alistair Pullen reports that Genie achieved a 30 percent score on the SWE-Bench test, the highest ever for an AI model in this area. This surpasses other coding-focused language models, including those from Amazon (19 percent) and Cognition's Devin (13.8 percent on a portion of SWE-Bench).

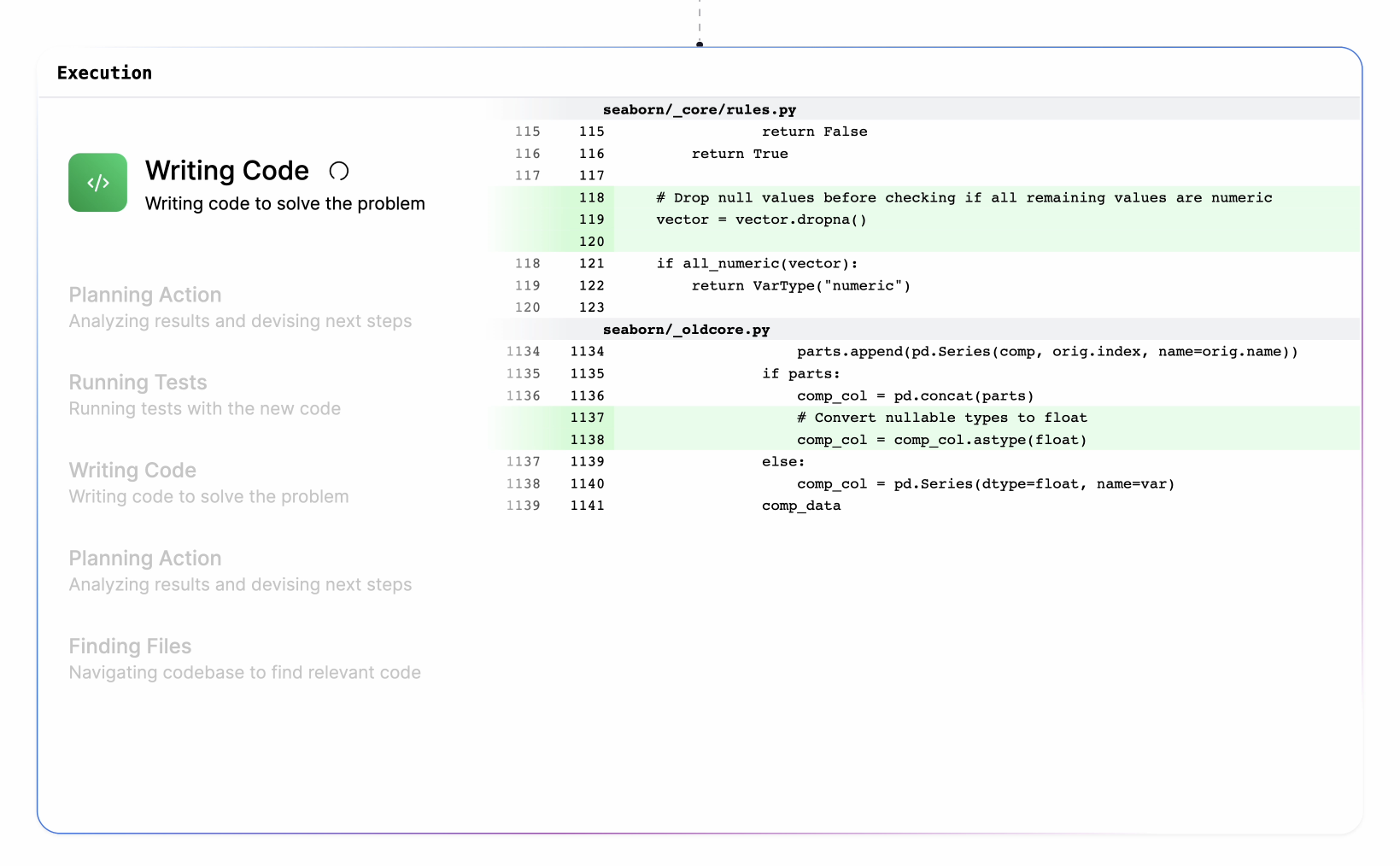

Genie's architecture aims to replicate the cognitive processes of human developers. It can autonomously and collaboratively fix bugs, develop new software features, restructure code, and perform various programming tasks.

Self-improvement through synthetic data

The model was developed using a proprietary process, training and fine-tuning a non-public GPT-40 variant with billions of tokens of high-quality data. Cosine spent nearly a year curating this data with help from experienced developers. The dataset comprises 21 percent each of JavaScript and Python, 14 percent each of TypeScript and TSX, and 3 percent each of other languages from Java to C++ to Ruby.

A key factor in Genie's performance was its self-improving training. Initially, the model learned mostly from perfect, working code, but struggled with its own mistakes.

Cosine addressed this by using synthetic data: if Genie's first proposed solution was incorrect, the model was shown how to improve using the correct result. With each iteration, Genie's solutions improved, requiring fewer corrections.

Overcoming technical limitations

Pullen recognized the potential of large language models to support human software developers in early 2022. However, the technology wasn't yet advanced enough to realize Genie's vision at that time.

The context window, often limited to 4,000 tokens, was a major bottleneck. Today, models like Gemini 1.5 Pro can process up to two million tokens in one prompt. Cosine hasn't disclosed Genie's token capacity.

Cosine believes it has an advantage over coding assistants that simply wrap general models like GPT-4 as separate products. "Everyone working on this problem is butting up against the same limit of model intelligence, this is why we chose to train rather than prompt," Pullen explains.

Video: Alistair Pullen/X

Beyond software development

Cosine plans to expand its portfolio with smaller, specialized models and larger, more general ones. The company will also increase its involvement in open-source communities and regularly enhance Genie's capabilities based on customer feedback. Given the size of the dataset, full model training isn't ruled out for the future.

Pullen believes the concept has applications beyond programming: "We truly believe that we’re able to codify human reasoning for any job and industry. Software engineering is just the most intuitive starting point and we can’t wait to show you everything else we’re working on."

Cosine will offer Genie in two pricing tiers: a roughly $20 option with some feature and usage limitations, and an enterprise-level offering with advanced features and virtually unlimited usage. Currently, interested parties can only join a waiting list.

The Y Combinator spin-off recently secured $2.5 million in seed funding from various venture capital firms to support Genie's development and plans.

Cosine appears to have combined key insights from recent AI developments: mimicking human work can lead to improved outcomes, and the quality of the training data is crucial. AI models like Genie could have a significant impact on the software development industry by increasing productivity. But note that we only have the company's self-reported benchmarks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.