OpenAI has unveiled GPT-4o, its latest large multimodal model that sets new standards in performance and efficiency by combining text, image, and audio processing in a single neural network.

The "o" in GPT-4o stands for "omni," reflecting the model's ability to process multiple input and output types through the same neural network.

One of the key features of GPT-4o is its impressive audio capabilities. The model can respond to audio input in as little as 232 milliseconds on average, which is comparable to human response times in conversation. In comparison, the old models took between 2.8 and 5.4 seconds to respond.

GPT-4o can also distinguish between calm and excited breaths, express various emotions in synthetic speech, and even change its voice to a robotic sound or sing on request, OpenAI demonstrated.

Video: OpenAI

In terms of text performance, GPT-4o matches GPT-4 Turbo in English and significantly outperforms it in non-English languages, according to OpenAI. The model's vision capabilities allow it to analyze video or graphics in real time, recognize and describe emotions in faces, and react accordingly.

GPT-4o is more efficient and cheaper than GPT-4 Turbo

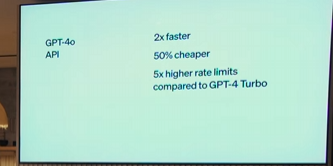

Efficiency was a key focus in the development of GPT-4o, with OpenAI claiming that the new model is twice as fast and 50% cheaper than its predecessor, GPT-4 Turbo.

OpenAI is expanding access to cutting-edge AI models by making GPT-4o available for free in ChatGPT, albeit with some lower rate limits for free users compared to paying customers and API users. Paid users of ChatGPT have a five times higher rate limit.

Still, this is the first time that a GPT-4 level model, the best in the world according to OpenAI, is available to the public for free.

Developers can use GPT-4o in the API as a text and "vision" model, with plans to make the audio and video capabilities available to a select group of trusted partners in the coming weeks.

"Our initial conception when we started OpenAI was that we’d create AI and use it to create all sorts of benefits for the world. Instead, it now looks like we’ll create AI and then other people will use it to create all sorts of amazing things that we all benefit from," writes OpenAI CEO Sam Altman.

GPT-4o outperforms GPT-4 Turbo

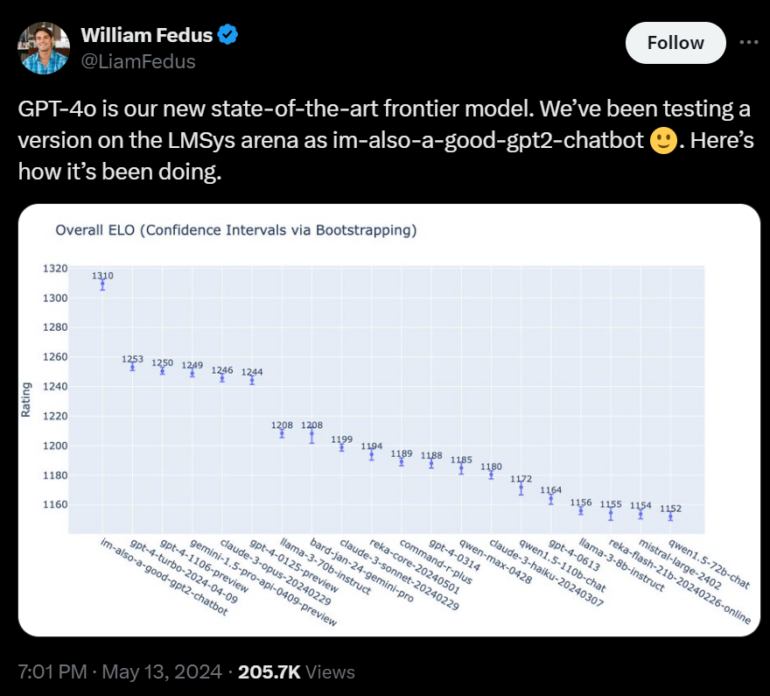

To demonstrate the performance of GPT-4o, OpenAI tested the model in the LMSys Arena, a benchmark for language models. According to OpenAI researcher William Fedus, it outperformed its predecessor, GPT-4 Turbo, by about 60 ELO points.

The ELO score is currently considered one of the most important indicators of a model's performance, as it is evaluated in a blind test by humans using the model's actual output. ELO is a rating system originally used in chess to measure relative playing strength. The higher the ELO rating, the better a player - or in this case, an AI model - performs in comparison. The data comes from the Chatbot Arena, where OpenAI recently let the model compete under a pseudonym.

The advantage is even more significant in challenging tasks, especially in programming, where GPT-4o achieves an ELO score 100 points higher than its predecessor, Fedus said.

According to OpenAI, GPT-4o matches the performance of GPT-4 Turbo on traditional text, reasoning, and programming benchmarks, but sets new benchmarks for multilingual, audio, and visual comprehension.

For example, GPT-4o scored a new high of 87.2 percent on the General Knowledge test (MMLU, 5-Shot). It also significantly outperforms GPT-4 and other models in speech recognition and translation, and in tasks involving diagrams (M3Exam). GPT-4o also sets new standards in visual perception tests, OpenAI says.

A new tokenizer for GPT-4o can more efficiently break down languages into tokens, speeding up processing and reducing memory requirements, especially for non-Latin scripts. For example, the sentence "Hello, my name is GPT-4o" requires 3.5 times fewer tokens in Telugu and 1.2 times fewer in German than before.

OpenAI focused its announcement on GPT-4o. Information about the next major model will follow soon, OpenAI CTO Mira Murati said on stage.

ChatGPT gets a desktop app

OpenAI has also introduced a new desktop app for ChatGPT on MacOS, with a Windows version to follow, and an updated web design to make the experience more user-friendly and conversational.

Video: OpenAI

The app allows quick access to ChatGPT via keyboard shortcuts, and users can discuss screenshots directly within the app. A voice mode allows for conversations with ChatGPT, and integration of GPT-4o's audio and video capabilities is planned for the future.

Safety, availability, and limits

According to OpenAI, the safety of GPT-4o has been redesigned from the ground up for all input types, for example by filtering training data or optimizing behavior after training. New safety systems have also been created for audio output.

OpenAI has evaluated GPT-4o against its own Preparedness Framework and with the help of 70 external experts to date to identify and mitigate the risks posed by the new modalities. For now, only text and image input and text output will be released to the public.

The other input types will follow once the technical infrastructure, usability, and safety are in place. An alpha version of the new voice mode with GPT-4o will be released in ChatGPT Plus in the coming weeks.

Despite the progress, GPT-4o still has limitations, such as complex tasks with multiple steps or understanding context and nuance, the company says. OpenAI is seeking feedback to further improve the model.