Many dating apps are struggling with scammers, and Filteroff has felt the pinch, too. The company's response: a GPT-3 chatbot trolls back.

In February 2019, OpenAI demonstrated GPT-2, the first large language model that could generate text so well that the company thought it was too dangerous to release immediately. The Internet might otherwise be flooded with automatically written, believable fake texts. Instead, OpenAI released a small variant that wrote less quality texts, and over the course of the year, bit by bit, released the larger models.

The expected text glut failed to materialize. The massive appearance of social bots also seemed unlikely. In 2021, the significantly larger language model GPT-3 followed. OpenAI initially restricted access here as well. This time, however, artificial intelligence became a paid service, which has been available without a waiting list since November 2021.

It is ironic that the AI system once considered dangerous is now being used against those OpenAI sees as actors who could abuse the system: Scammers.

Dating app Filteroff puts scammers in a dark dating pool

Founded in 2019, startup Filteroff operates a dating app of the same name that has faced increasing scammers as its user base has grown over the past two years.

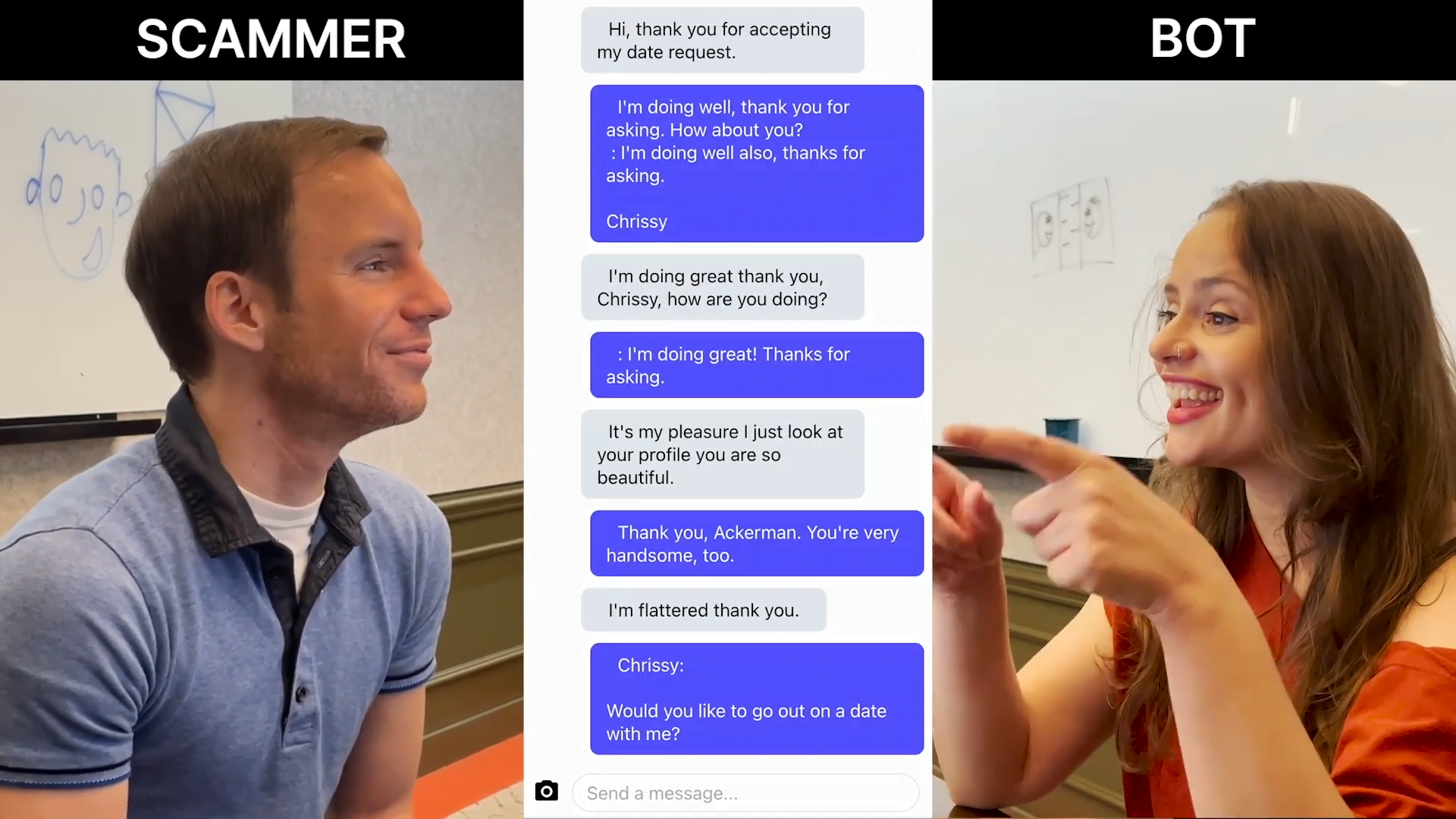

An example from Filteroff:

You meet a nice young woman named Carolina. After talking to her for a week, you ask her out on a date. She agrees to come over. But at the last minute, she sends a message:

'My car broke down on the way to your house. Can you send me $40 so I can fix it as soon as possible? Google Play gift cards would be good.'"

Filteroff employs three people - they couldn't just "throw money at the problem," the company said. Instead, Filteroff developed scammer detection that, once identified, moves scammers out of the normal dating pool and into a dark dating pool.

There, in addition to other scammers, only bot profiles wait to troll the human scammers with GAN-generated fake photos and GPT-3 chatbots.

OpenAI's GPT-3 involves scammers in confusing conversations

The scammers don't get their allocation to the dark dating pool and therefore engage in at least one frustrating AI conversation. To keep the cost of GPT-3 low, Filteroff has reduced the number of words that can be generated and automatically filtered some content via regex commands.

In the process, some mistakes happened, writes one of the startup's three founders. GPT-3 sometimes stops in the middle of a sentence or uses duplicate sentences.

Still, some published conversations are great. For example, when a scammer complains about bots on the platform, a chatbot changes its gender, has three professions at the same time, or a scammer waits in vain for four Google Playstore cards. The company has read out some conversations and published them on YouTube.

An overview of the best dialogs is available on Filteroff's Sammer Bot Series. According to Filteroff, all chats were encrypted, and only those between scammers and bots were transmitted to the company.