Researchers at Google DeepMind and the University of Southern California have unveiled Self-Discover, a framework that enables language models to find logical reasoning prompts for complex tasks on their own.

Despite all the progress that has been made, logical reasoning is still the greatest challenge for large language models. To solve this problem, scientists from Google DeepMind and the University of Southern California have now presented a new approach called "Self-Discover". The goal is for language models to discover logical structures on their own to solve complex problems.

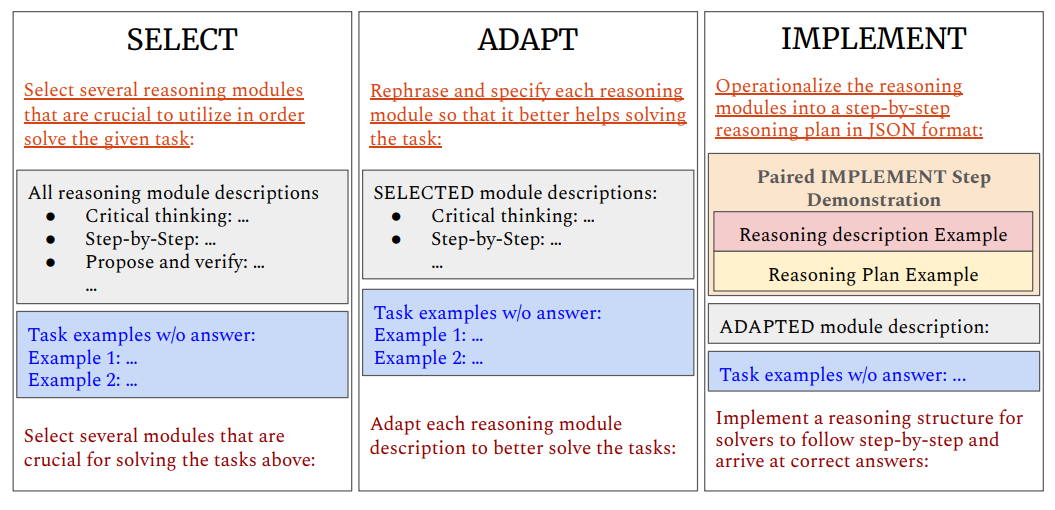

Select, adapt, implement

The framework provides the language model with a set of reasoning prompts, such as "step-by-step," "critically examine," or "break down into sub-problems."

In the first phase, for a given task, the model selects from these reasoning prompts, adapts them to the specific task, and eventually combines them into an actionable plan.

In the second phase, the model attempts to solve the task simply by following the plan it has developed.

According to the researchers, this approach mimics the human problem-solving process. It promises better results and is also computationally efficient since it only needs to be generated once at the meta-level.

One possible application for Self-Discover is solving a complex mathematical equation. Instead of trying to solve the equation directly, Self-Discover could first create a logical structure consisting of several steps, such as simplifying the equation, isolating variables, and finally solving the equation. The LLM then follows this structure to solve the problem step by step.

Self-Discover delivers significant improvements over Chain of Thought

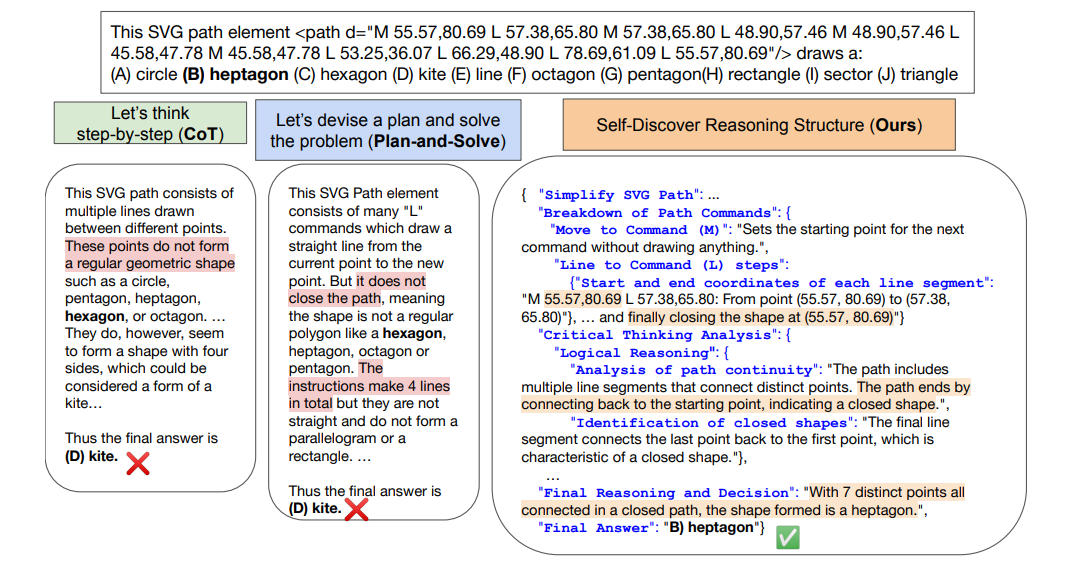

An example from the researchers compares Self-Discover with Chain of Thought (CoT) and Plan-and-Solve, where the language model is asked to infer the correct geometric shape from the SVG path of a vector file.

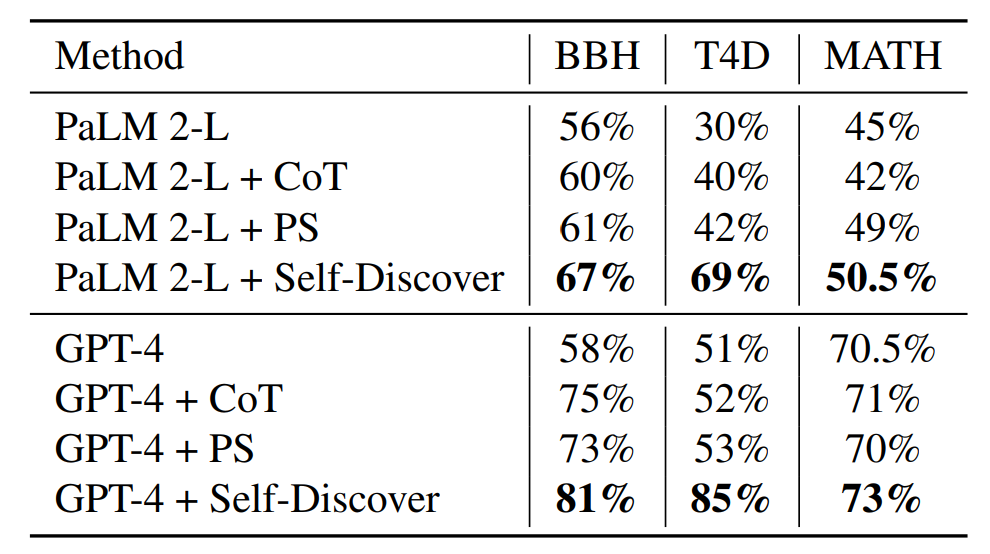

The researchers tested Self-Discover with OpenAI's GPT-4 Turbo, GPT-3.5 Turbo, Meta's LLaMa-2-70B, and Google's PaLM 2. In 21 out of 25 tasks, Self-Discover outperformed the proven CoT method, which also required more computing power, by up to 42 percent. Self-Discover required only three additional inference steps at the task level.

In three other reasoning benchmarks (BigBench-Hard, Thinking for Doing, Math), the framework was able to improve LLM performance, in some cases significantly, regardless of the language model. In all tests, Self-Discover outperformed the widely used Chain-of-Thought (CoT) and Plan-and-Solve (PS) prompts. This indicates that the Self-Discover technique is universally applicable.

Self-Discover is said to outperform inference-intensive methods such as CoT Self-Consistency or Majority Voting by more than 20 percent, with 10 to 40 times less computation required for inference.

OpenAI recently introduced the Meta-Prompting Framework, another prompting solution for improving the logical performance of large language models.

Similar to Self-Discover, the framework breaks down complex tasks of a language model into smaller, more manageable parts, which are then handled by specific "expert" instances of the same language model to converse and find an optimal solution. While this can improve performance, switching back and forth between multiple experts increases the computational cost compared to traditional prompting.