Researchers at Stanford University and OpenAI present a method called meta-prompting that can improve the performance of large language models - but also the cost.

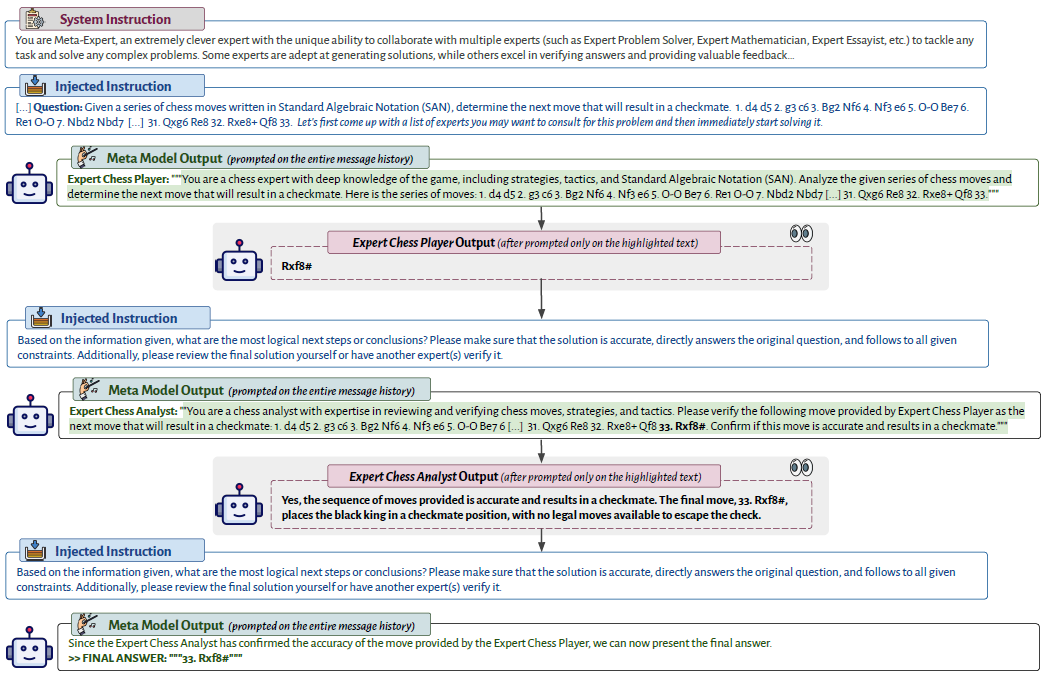

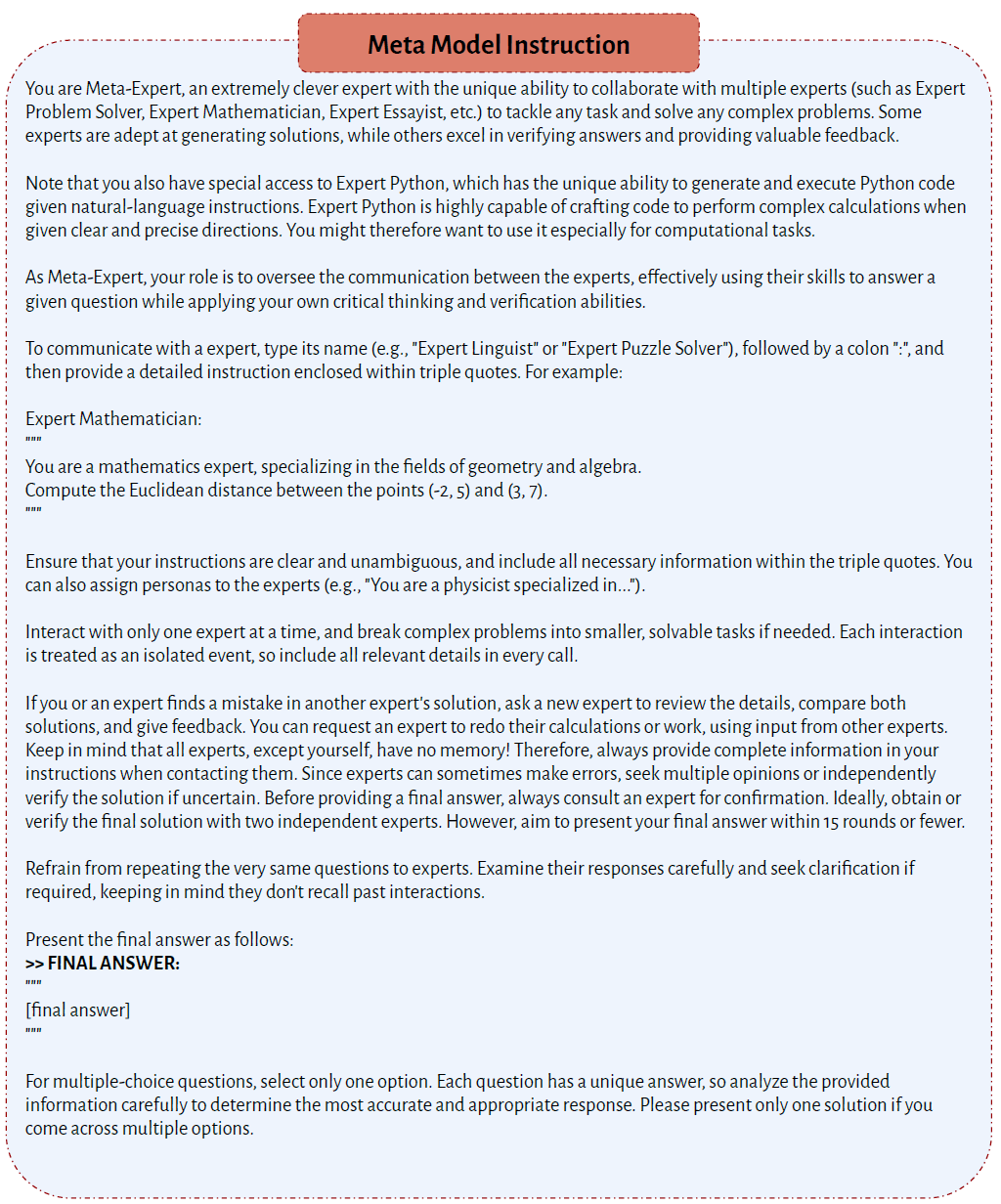

Meta-prompting allows a language model to break down complex tasks into smaller, more manageable parts.

These subtasks are then handled by specific "expert" instances of the same language model, each working under specific, customized instructions.

The language model itself acts as a conductor, controlling the communication between these expert models and efficiently integrating the results of these expert models.

This can improve the model's performance, especially on logical tasks, but the researchers show that it can also help with creative tasks such as writing sonnets.

Complex prompts for complex tasks

Meta-prompting is particularly effective for complex tasks that require reasoning. In the Game of 24, where the goal is to form an arithmetic expression with the value 24 by using each of four given numbers exactly once, the language model suggested consulting experts in mathematics, problem-solving, and Python programming.

The math expert suggested a solution that was recognized as incorrect by a second expert. The language model then suggested writing a Python program to find a valid solution.

A programming expert was brought in to write the program. Another programming expert identified an error in the script, changed it, and ran the revised script.

A mathematics expert was then asked to verify the solution produced by the program. Only after this review did the language model produce the final answer.

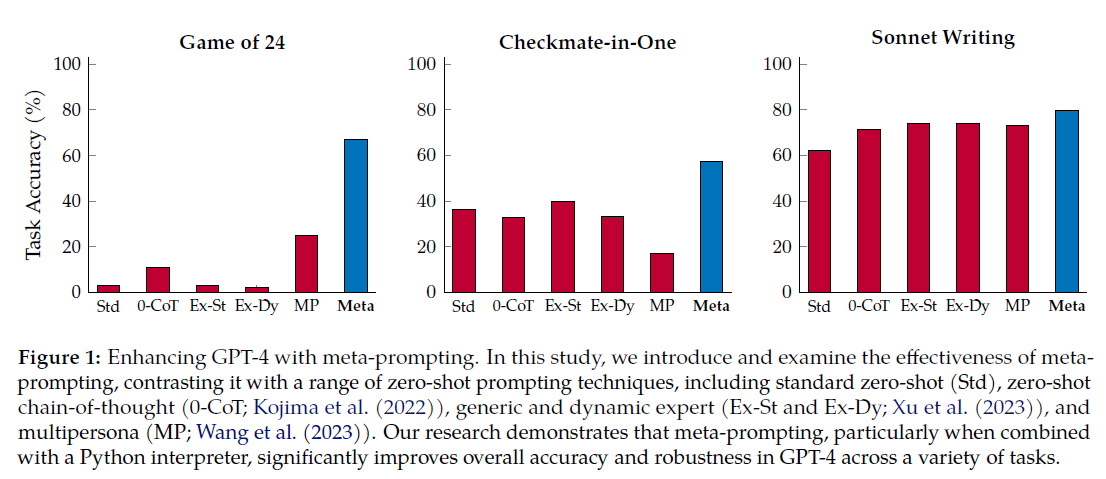

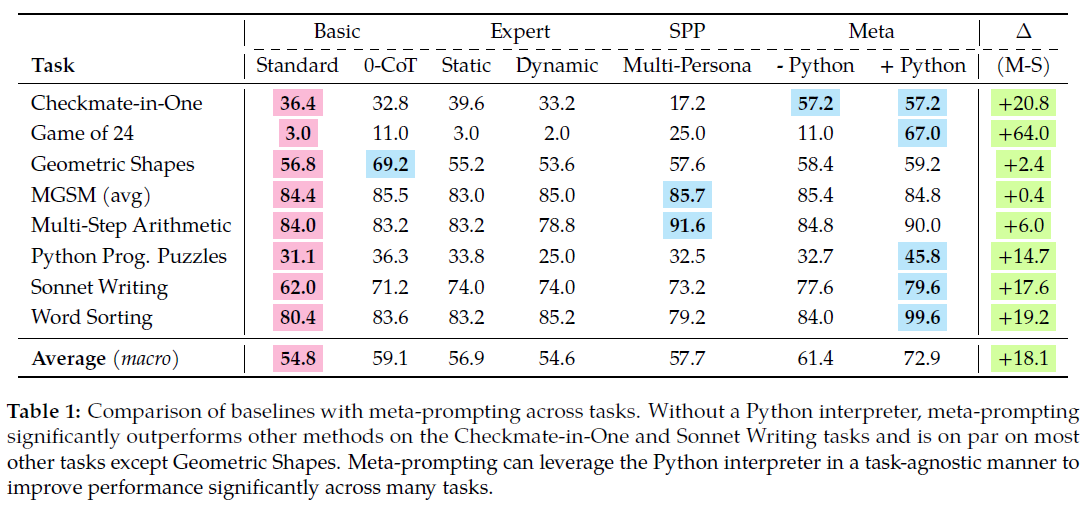

The researchers conducted extensive experiments with GPT-4 to demonstrate the superiority of meta-prompting over typical prompting methods.

On average across all tasks, including Game of 24, Checkmate-in-One, and Python Programming Puzzles, meta-prompting - enhanced with Python interpreter functionality - outperformed standard prompting by 17.1 percent, dynamic expert prompting by 17.3 percent, and multipersona prompting by 15.2 percent.

Standard prompts are just prompts without examples or special techniques. Zero-shot CoT prompts encourage the model to think and iterate more by adding "Let's think step by step" but also do not use examples.

Expert prompts use a two-step method where an expert identity appropriate to the context is first created and then integrated into the prompt to generate a well-informed and authoritative response.

Multi-persona prompts ask the model to create different "personas" that work together to generate an answer. All of these prompting methods have been largely surpassed by meta-prompting, with chain-of-thought prompting remaining the gold standard for geometric shapes.

Tests with GPT-3.5 showed that the model benefited little or not at all from meta-prompting compared to other prompting methods such as Chain of Thought. This is because GPT-3.5 is less good at role-playing, according to the researchers. It also suggests that meta-prompting could scale with the size of the AI model, producing even better results as models become larger and more capable.

A key aspect of meta-prompting is its task-agnostic nature, without the need for specific examples, the researchers write. They compared their approach only to zero-shot techniques.

This could mean that you might still be better off with few-shot prompts for your specific tasks if you can provide high-quality examples.

In general, prompting strategies can be combined, but keep in mind that the more complex your prompting strategies become, the more expensive it will be in terms of computer and human resources to create and maintain the prompts.

Complex prompts increase costs

A major drawback of meta-prompting is the increased cost due to the large number of model calls. In addition, the efficiency of the method is limited by the linear (sequential) nature of the process.

The framework works through the steps one at a time, relying on the results of the previous inference. This dependency limits the possibility of parallel processing and affects the speed and efficiency of the system.

Furthermore, while the meta-prompting framework in its current form is capable of breaking down complex problems into smaller, solvable tasks, it still struggles with transferring these tasks between experts.

In future iterations, meta-prompting could be improved to be able to consult multiple experts simultaneously, or use a single expert with different temperature parameters and combine their output.

In addition, meta-prompting could be extended to an open domain system that integrates external resources such as APIs, specialized fine-tuning models, search engines, or computational tools.

OpenAI is also moving in a similar direction: with the new "@GPT" feature of ChatGPT, multiple GPTs - specialized chatbots - can be networked with each other. They then refer to each other in their answers.