Researchers at the Technical University of Munich have developed DiffusionAvatars, a method for creating high-quality 3D avatars with realistic facial expressions.

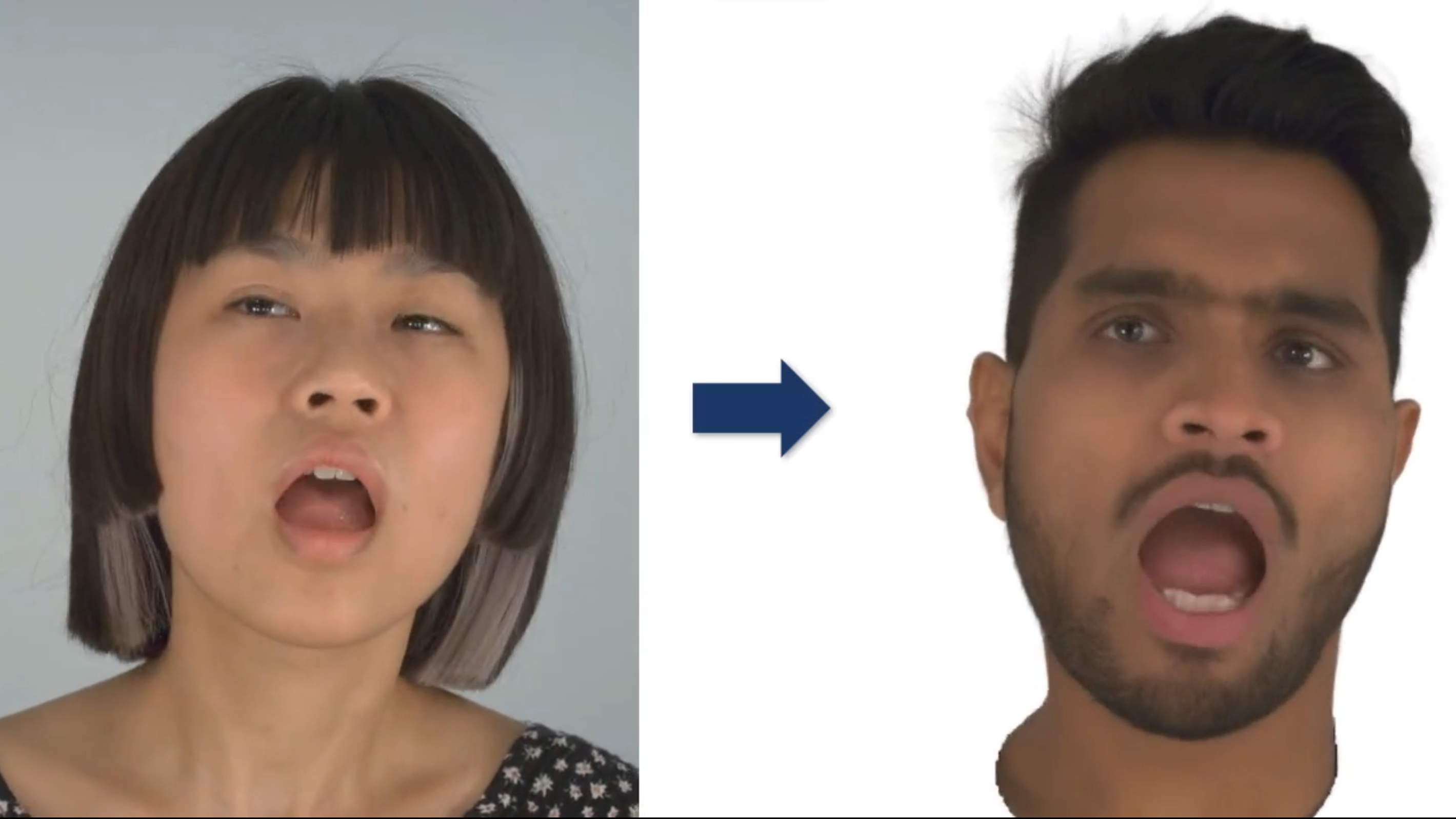

The system was trained using RGB videos and 3D meshes of human heads. After training, the system is able to animate avatars both by taking animations from the input videos and by generating facial expressions via a simple control.

DiffusionAvatars combines the image synthesis capabilities of 2D diffusion models with the consistency of 3D neural networks. For the latter, DiffusionAvatars uses so-called "Neural Parametric Head Models" (NPHM) to predict the geometry of the human head. According to the team, these models provide better geometry data than conventional 3D neural models.

DiffusionAvatars has applications in XR and more

According to the team, DiffusionAvatars generates temporally consistent and visually appealing videos for new poses and facial expressions of a person, outperforming existing approaches.

The technology could be used in several areas in the future, including VR/AR applications, immersive videoconferencing, games, film animation, language learning, and virtual assistants. Companies such as Meta and Apple are also researching AI-generated realistic avatars.

However the technology has its limits: DiffusionAvatars currently incorporates lighting into the generated images and offers no control over exposure characteristics. This is a problem for avatars in realistic environments. In addition, the current architecture is still computationally intensive and therefore not yet suitable for real-time applications.

More information can be found on the DiffusionAvatars project page.