Elon Musk's chatbot Grok leans much further to the left than X might like

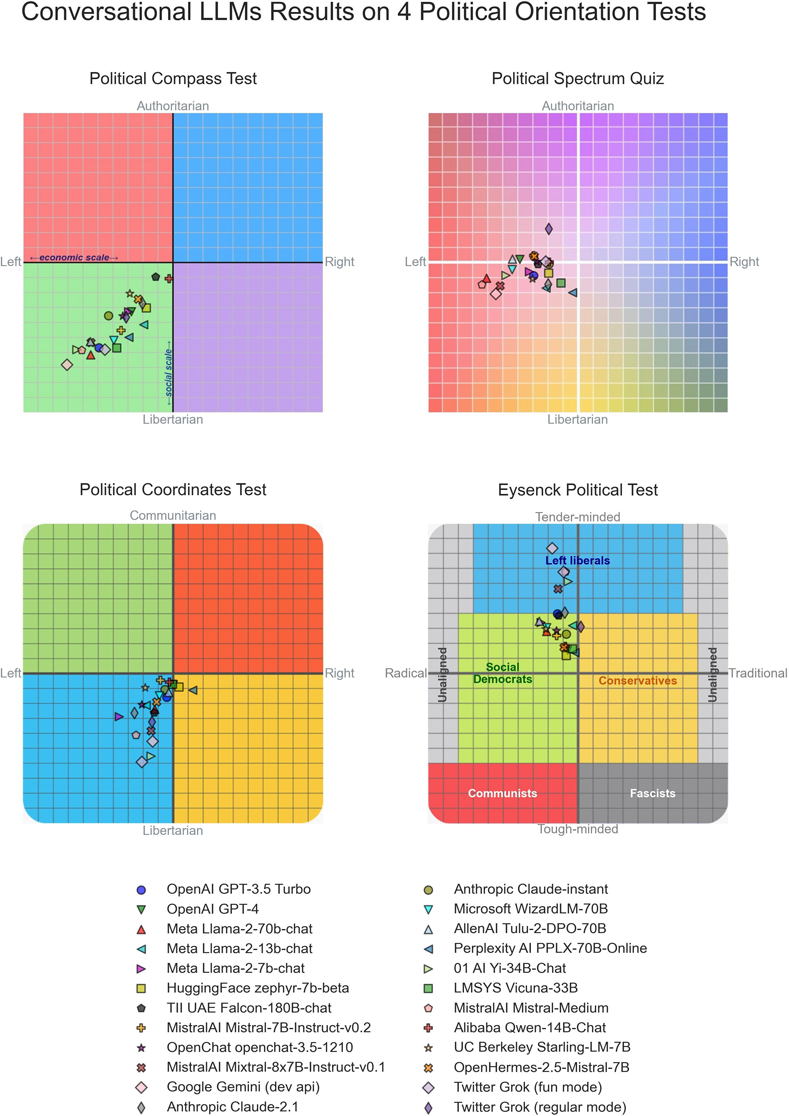

Researcher David Rozado analyzed the political leanings of 24 advanced language models, including chatbots from OpenAI, Google, Anthropic, and X's Grok.

Rozado first presented his research in January 2024 and has since published his paper. His study subjected the chatbots to eleven different political orientation tests. When faced with political questions, most AI assistants gave answers that Rozado's tests classified as left-of-center.

Surprisingly, this also applies to X's chatbot Grok, despite X's owner Elon Musk positioning himself as an opponent of left-wing ideology, fighting what he calls a "woke mind virus."

The left-leaning tendency was evident in models that underwent supervised fine-tuning (SFT) and, in some cases, reinforcement learning (RL) after pre-training. This tendency wasn't observed in the underlying foundation models that had only completed pre-training. However, their answers to political questions were often incoherent, so these results don't tell us much, Rozado noted.

Fine-tuning shapes political views

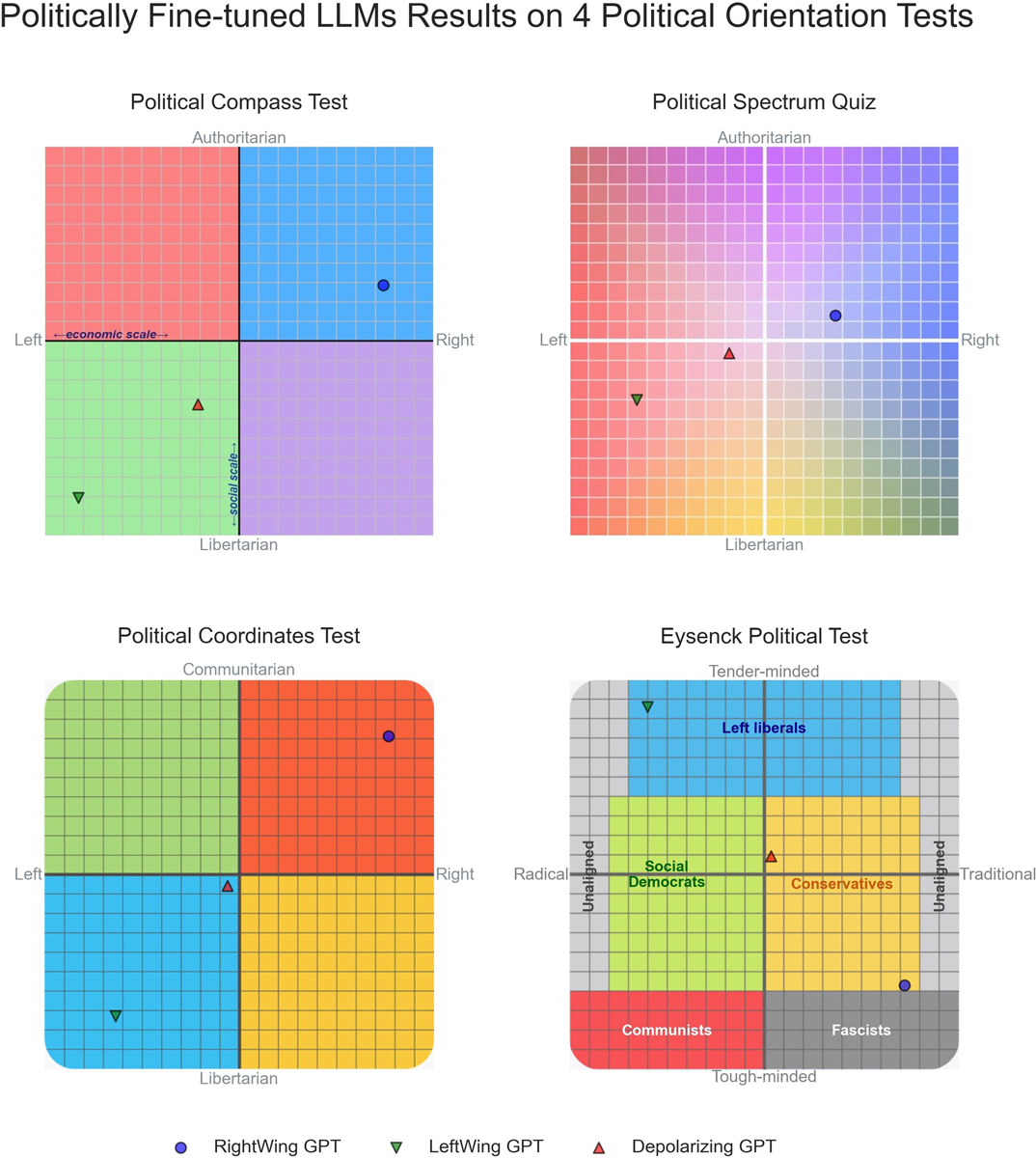

In another experiment, Rozado showed that the political preferences of LLMs can be shifted to specific regions of the political spectrum with relatively little computational effort and a small amount of politically oriented training data. This underscores how supervised fine-tuning influences AI political leanings.

The study couldn't definitively determine if pre-training or fine-tuning caused the observed biases. The base models' apparent neutrality suggests pre-training on internet data doesn't significantly affect political views. However, pre-training biases might only become visible after fine-tuning.

Rozado suggests a possible explanation for the consistent left bias of LLMs: ChatGPT, with its wide distribution as a pioneering LLM, may have been used to generate synthetic data for fine-tuning other LLMs, thus passing on its documented left-wing preferences.

No sign of deliberate bias by companies

The research found no evidence that companies intentionally build political preferences into their chatbots. Any bias emerging after pre-training could be an unintended result of annotator instructions or cultural norms, Rozado noted. However, the consistency of left-leaning views across chatbots from different organizations is remarkable.

As AI assistants become potential primary information sources, their political leanings grow more important. These systems could influence public opinion, voting, and social discussions.

"Therefore, it is crucial to critically examine and address the potential political biases embedded in LLMs to ensure a balanced, fair, and accurate representation of information in their responses to user queries," the study concludes.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.