Ex-OpenAI CTO Mira Murati introduces Tinker, an API for fine-tuning of open-weight LLMs

Thinking Machines, the startup founded by former OpenAI CTO Mira Murati, has introduced its first product: Tinker, an API for training language models.

Tinker is meant to make it easier for teams to build open source models, even if they lack access to large-scale compute resources. The service is aimed at researchers and developers who want to train or fine-tune open-weight models without having to manage complex infrastructure themselves.

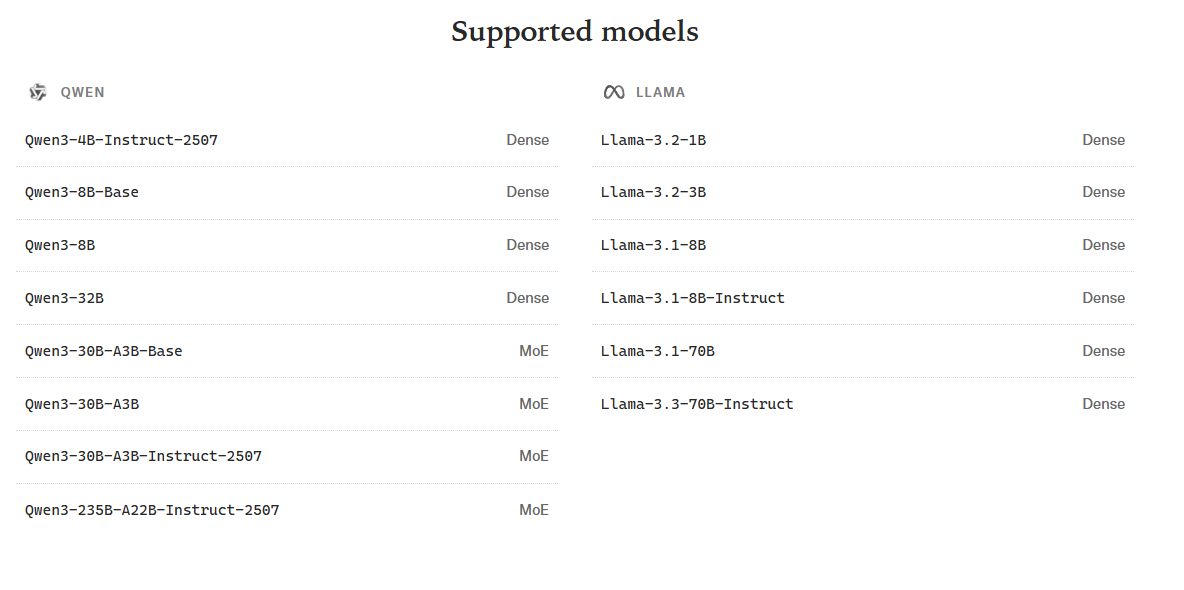

According to the company, the goal is to enable "more people to do research on cutting-edge models and customize them to their needs." At launch, Tinker works with several open-weight models from Meta (Llama) and Alibaba (Qwen), including larger models like Qwen-235B-A22B. Users can switch between models by updating a single string in the code, according to the company.

Tinker runs as a managed service on the company’s own compute clusters. Training jobs can be started immediately, while the platform handles resource management, fault tolerance, and scheduling. The platform uses LoRA (Low-Rank Adaptation), which allows several fine-tuning runs to run in parallel on shared hardware. This approach lets different training jobs use the same compute pool, which should help reduce costs, the company says.

Beta and Pricing

Tinker is currently in closed beta. Interested users can join the waitlist. The service is free during the beta, with usage-based pricing planned for the coming weeks.

Developers can also use the Tinker Cookbook, an open library of standard post-training methods that run on the Tinker API. The cookbook is intended to help avoid common fine-tuning errors.

Murati left OpenAI in fall 2024 after reports of internal tensions. By launching a fine-tuning API, she signals that she doesn't see OpenAI's models as unbeatable or expect superintelligence anytime soon.

Along with other former OpenAI colleagues like co-founder John Schulman and researchers Barret Zoph and Luke Metz, Murati appears to believe that fine-tuned open-weight models offer more flexibility and economic value right now than closed models like GPT-5. Otherwise, it would be hard to explain the focus on building specialized fine-tuning tools.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.