Foundation Model Transparency Index shows lack of transparency among AI companies

The Center for Research on Foundation Models (CRFM) at Stanford HAI has developed the Foundation Model Transparency Index (FMTI), a rating system for corporate transparency in the area of AI foundation models.

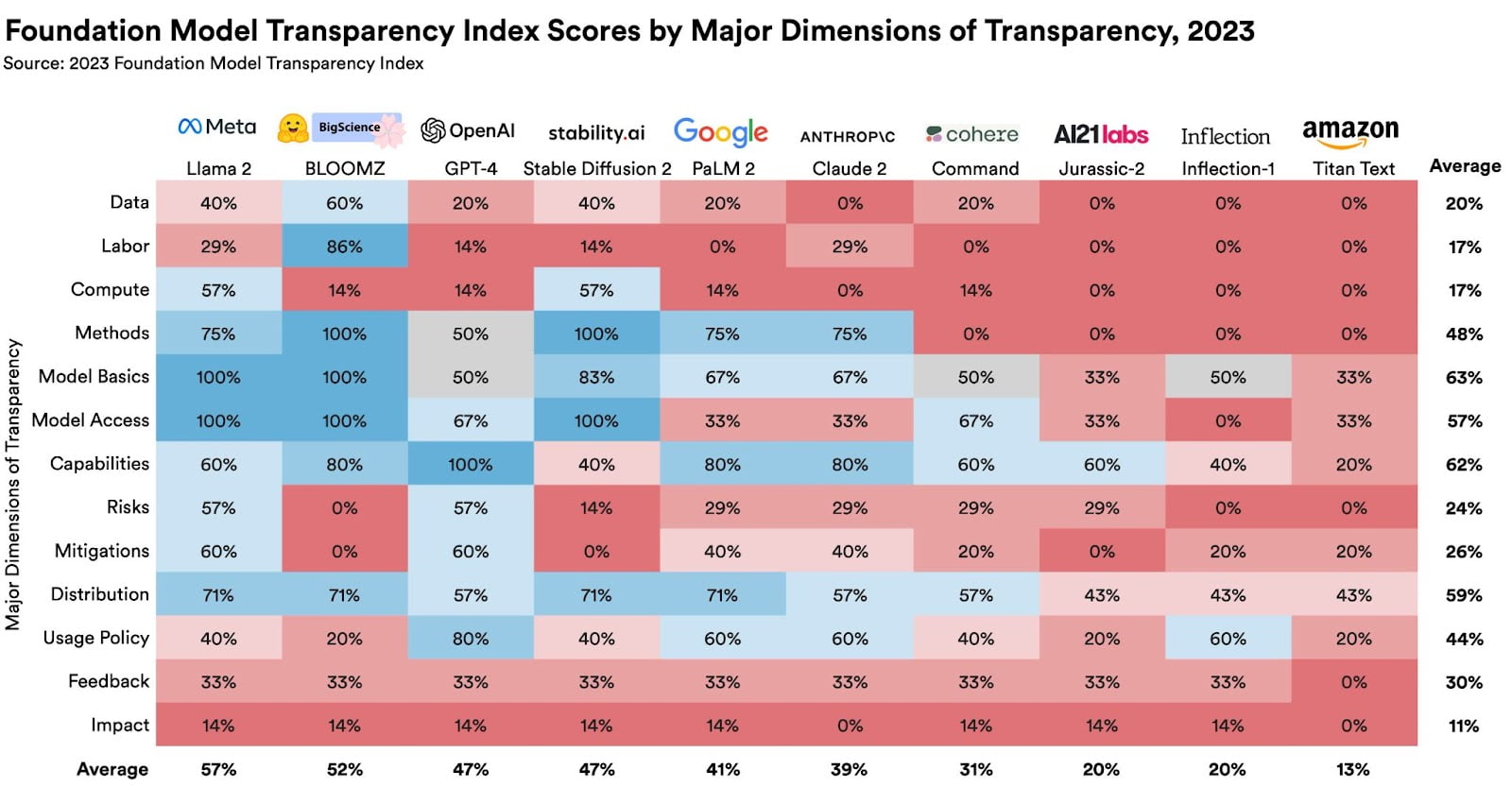

The index examines 100 different aspects of transparency, including how a company creates a foundation model, how it works, and how it is used in downstream applications.

According to FMTI, most companies, including OpenAI, are becoming increasingly less transparent. This makes it difficult for companies, researchers, policymakers, and consumers to understand and safely use these models.

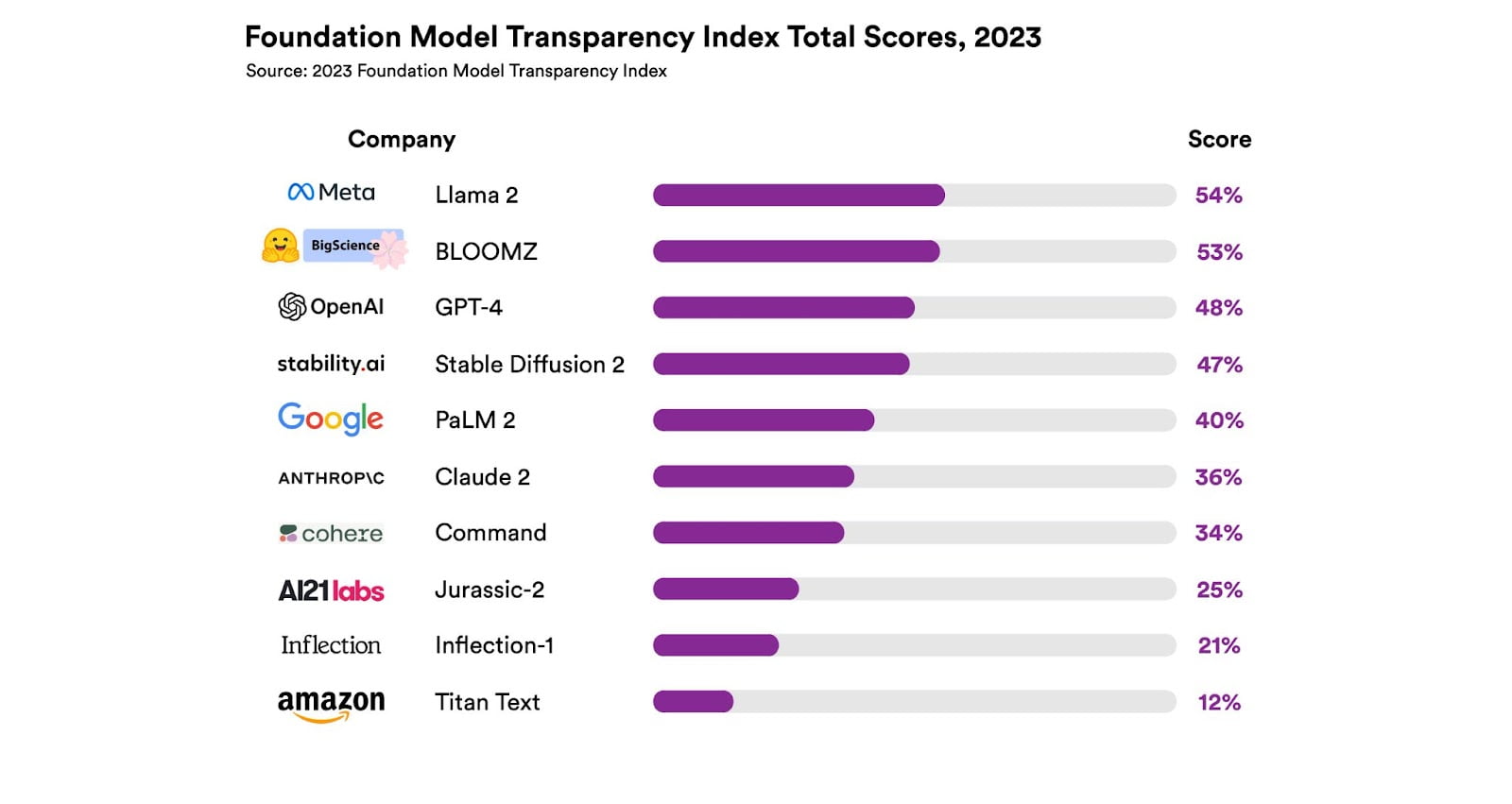

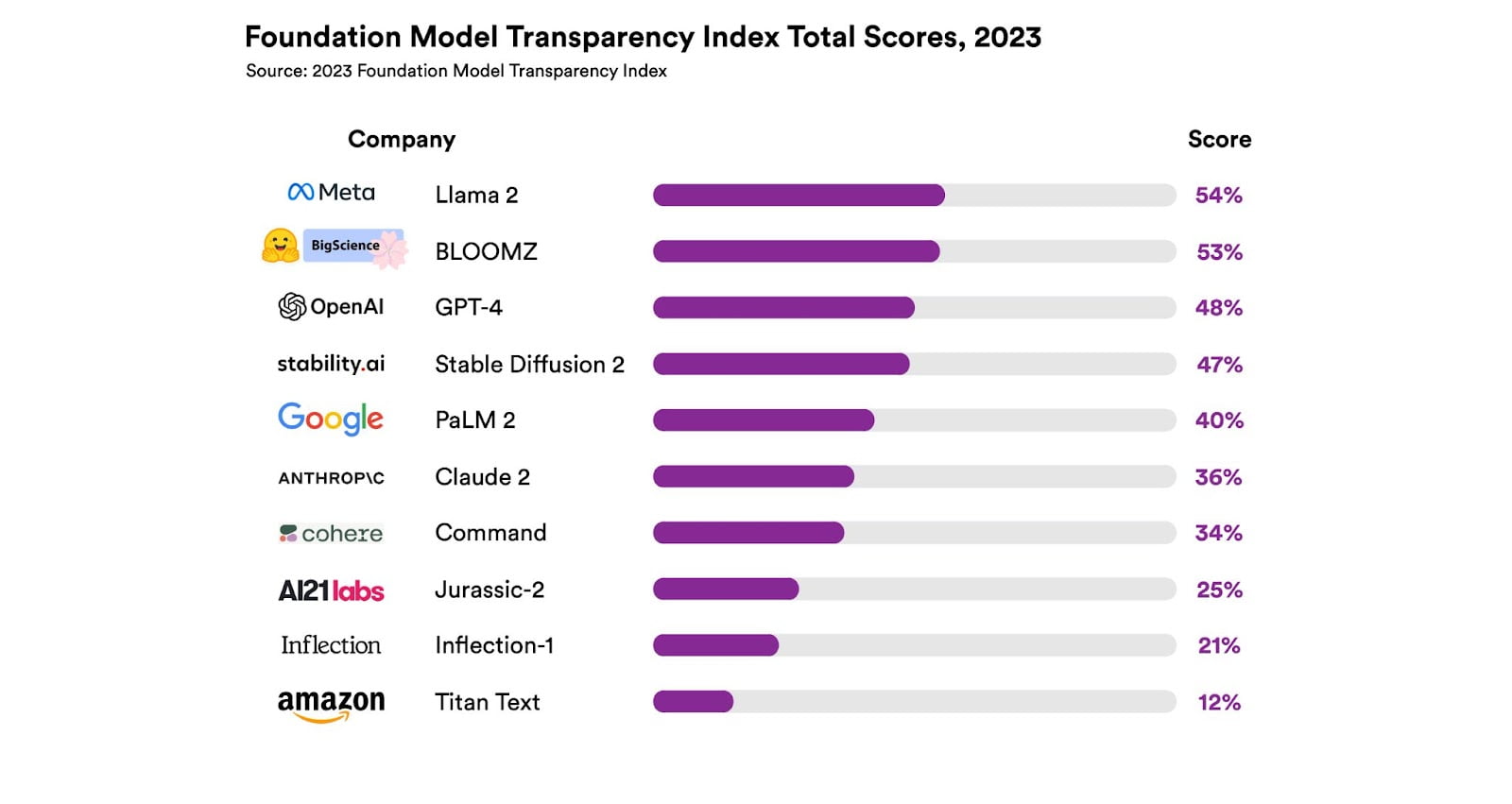

The highest scores achieved ranged from 47 to 54 out of a possible 100 points, indicating a significant need for improvement, according to the research team.

The research team used a structured research protocol to gather publicly available information on each company's leading models.

Open source is fuzzy among the opaque

Metas Llama 2, an open-source model, tops the list but only achieves a transparency score of 54 percent. OpenAI, scorned by the open-source community as "closed" AI, is just behind Llama 2 with its top model GPT-4 at 48 percent. In arguably the most important category, the data used to train the AI, nearly all model providers fail with scores well below 50 percent.

"We shouldn’t think of Meta as the goalpost with everyone trying to get to where Meta is," says Rishi Bommasani, society lead at the Center for Research on Foundation Models (CRFM). "We should think of everyone trying to get to 80, 90, or possibly 100."

The FMTI is designed to help policymakers effectively regulate foundation models and motivate companies to improve their transparency.

"This is a pretty clear indication of how these companies compare to their competitors, and we hope will motivate them to improve their transparency," Bommasani says.

The full methodology and results are available in a 100-page companion document to the index.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.