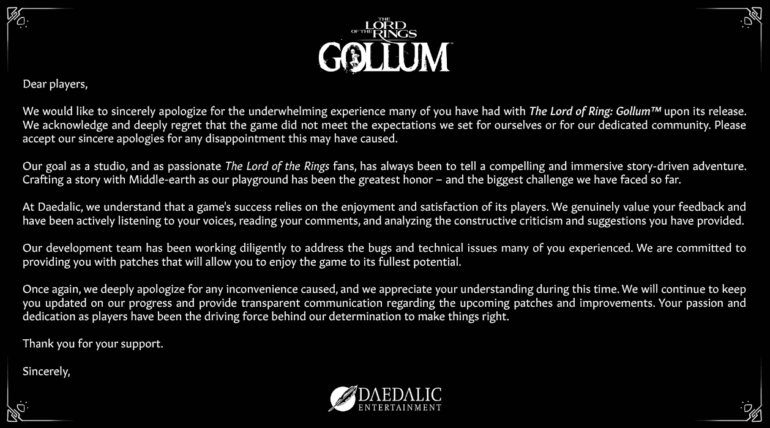

The video game Lord of the Rings: Gollum was so disastrous that the game studio and publisher had to apologize for it. The fact that this apology may have been written using ChatGPT is now an issue. Why is that?

It is making headlines in editorial and social media: First, game studio Daedalic Entertainment and publisher Nacon deliver a lousy video game about one of the most popular and well-known characters in the Lord of the Rings saga. Then, according to Game Two sources, publisher Nacon wrote an apology using ChatGPT.

If you are inclined to believe this, you might find clues in a typo in the publisher's apology, which reads "The Lord of Ring" instead of "The Lord of the Rings," and in the fact that the letter sounds rather generic. It is also easy to generate similar-sounding generic apologies with ChatGPT.

However, according to Nacon, the letter was not written with ChatGPT. And that is plausible because the above symptoms are no proof of AI content. PR is often generic and vague to keep doors open and avoid legal problems. And the typo suggests human rather than machine intervention.

In fact, with a few details in the prompt, ChatGPT can write a much better apology than the one Nacon published for Daedalic Entertainment. It also spells "The Lord of the Rings: Gollum" correctly because it's in the prompt. ChatGPT does not know the title of the game because it was released after its training.

Dear Fans of Middle-earth,

I am writing this letter today not as a faceless development team but as fans, just like you, who are deeply passionate about the world J.R.R. Tolkien crafted. It is with a heavy heart that we address the widespread disappointment many of you have expressed regarding "The Lord of the Rings: Gollum."

We embarked on this journey with the vision of creating a unique narrative experience that would delve deeper into the life and struggles of one of Tolkien's most iconic characters. We hoped to provide fans with a new perspective, one that would enrich their understanding and appreciation of Middle-earth. However, based on your feedback, we fell short of the mark, and for that, we are truly sorry.

We acknowledge the shortcomings of the game, and we are taking your feedback seriously. Many have raised concerns about the game mechanics, story decisions, and overall quality, and we understand the pain these issues have caused, especially to a community as dedicated and passionate as this one. We never intended to tarnish the beloved legacy of "The Lord of the Rings.

Our commitment to you is twofold:

- We will immediately work on patches and updates to address some of the most pressing issues you've pointed out. While we cannot change every aspect of the game post-release, we promise to do our best to enhance your experience where we can.

- This experience has been a humbling lesson for us. Moving forward, we will be more cautious, more attentive, and more dedicated to preserving the integrity and spirit of Middle-earth in any future endeavors. We have learned the importance of not just understanding but truly respecting the source material and its fanbase.

We truly hope that in time, with your guidance and feedback, we can earn back your trust and deliver experiences that resonate with the depth, intricacy, and beauty of Tolkien's world.

From the deepest caverns of our hearts, we apologize. We are grateful for your passion and dedication, and we promise to strive for the standards that Middle-earth and its fans deserve.

Sincerely,

[Game Development Team Name]

ChatGPT is equal to zero effort

Much more interesting is the question: why does it matter if the apology was written by ChatGPT? Surely it should only matter if the apology reflects what the apologizing entity wants to communicate.

No one would care if an apology was written by hand, on a laptop, or on a desktop computer. And it would be enough to be angry about the generic content and typos without mentioning ChatGPT.

So why does it matter that ChatGPT may have been used as a typing aid?

The answer comes from a recent study: AI-generated messages can damage relationships by being perceived as shortcuts and dishonest. The study focused on personal relationships. But it also seems to apply to communications between businesses and their customers.

The question remains whether this is a temporary problem due to the novelty of these text calculators, which we now call AI, and will disappear as their use becomes commonplace.

But the study's lead author, communications professor Bingjie Liu of Ohio State University, says people may be developing an unconscious behavior of scanning messages for AI patterns and devaluing them when they detect such patterns, doing a "Turing test in their mind."