Germany's cybersecurity agency issues new guidelines to protect LLMs from persistent threats

The German Federal Office for Information Security (BSI) warns that even top AI providers are struggling to defend against so-called evasion attacks targeting language models.

In these attacks, malicious instructions are hidden inside otherwise benign content such as websites, emails, or code files. When the AI processes this content, it can be tricked into ignoring security safeguards, leaking data, or carrying out unintended actions.

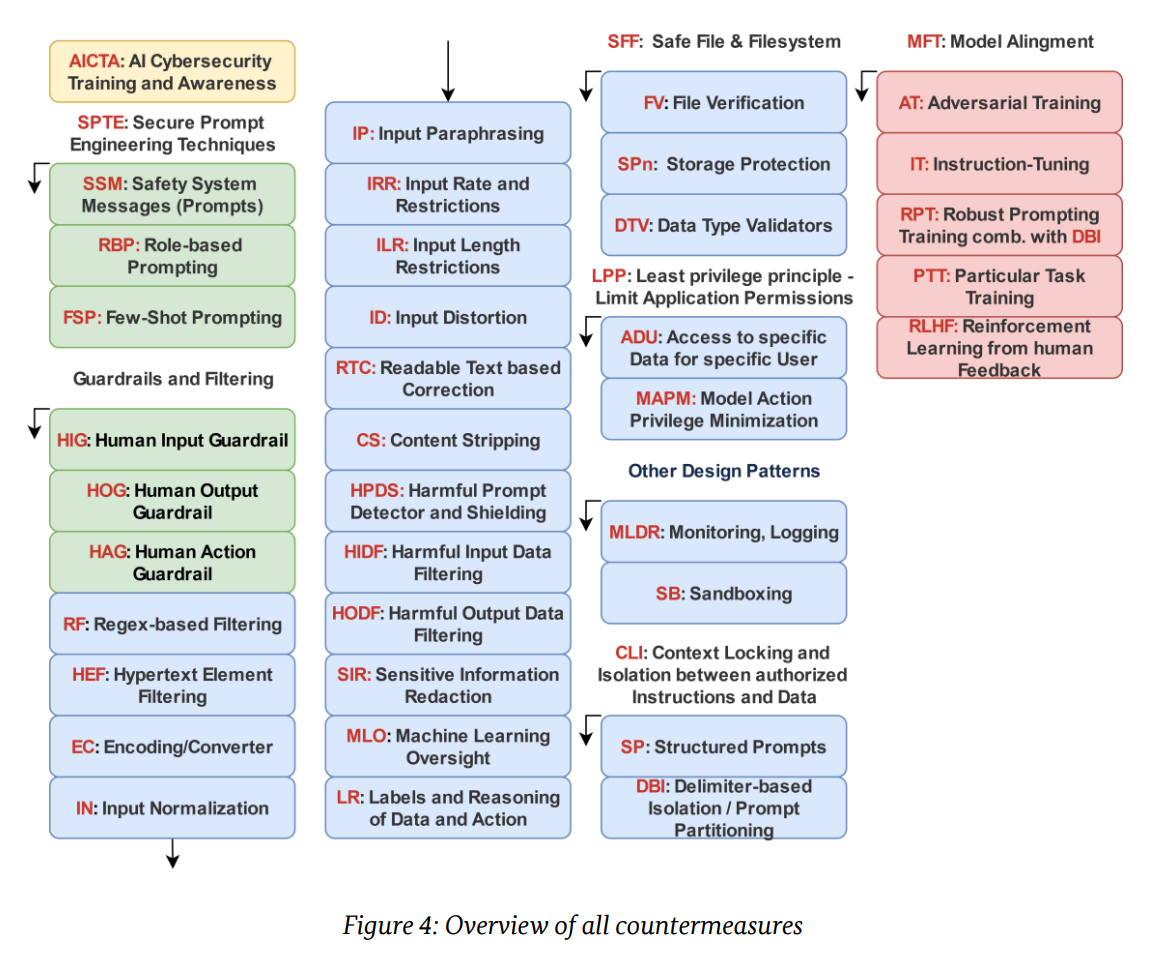

The BSI has released a new guide outlining countermeasures, offering technical filters, secure prompt design strategies, and organizational protections. Still, the agency makes it clear: "However, it must be kept in mind that currently there is no single bullet proof solution for mitigating evasion attacks," the BSI writes.

Agentic AI systems are especially at risk, according to recent studies. In one example, Google's Gemini leaked data after processing a manipulated calendar entry. In another, ChatGPT's Deep Research was compromised by hidden HTML instructions embedded in an email.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.