Google DeepMind presents three new advances in robotics research: AutoRT, SARA-RT and RT-Trajectory.

The new advances are designed to improve the data collection, speed, and generalization capabilities of robots in the real world. The goal is to create robots that can understand and perform complex tasks without having to be trained or built from scratch.

AutoRT: Robot training with large AI Models

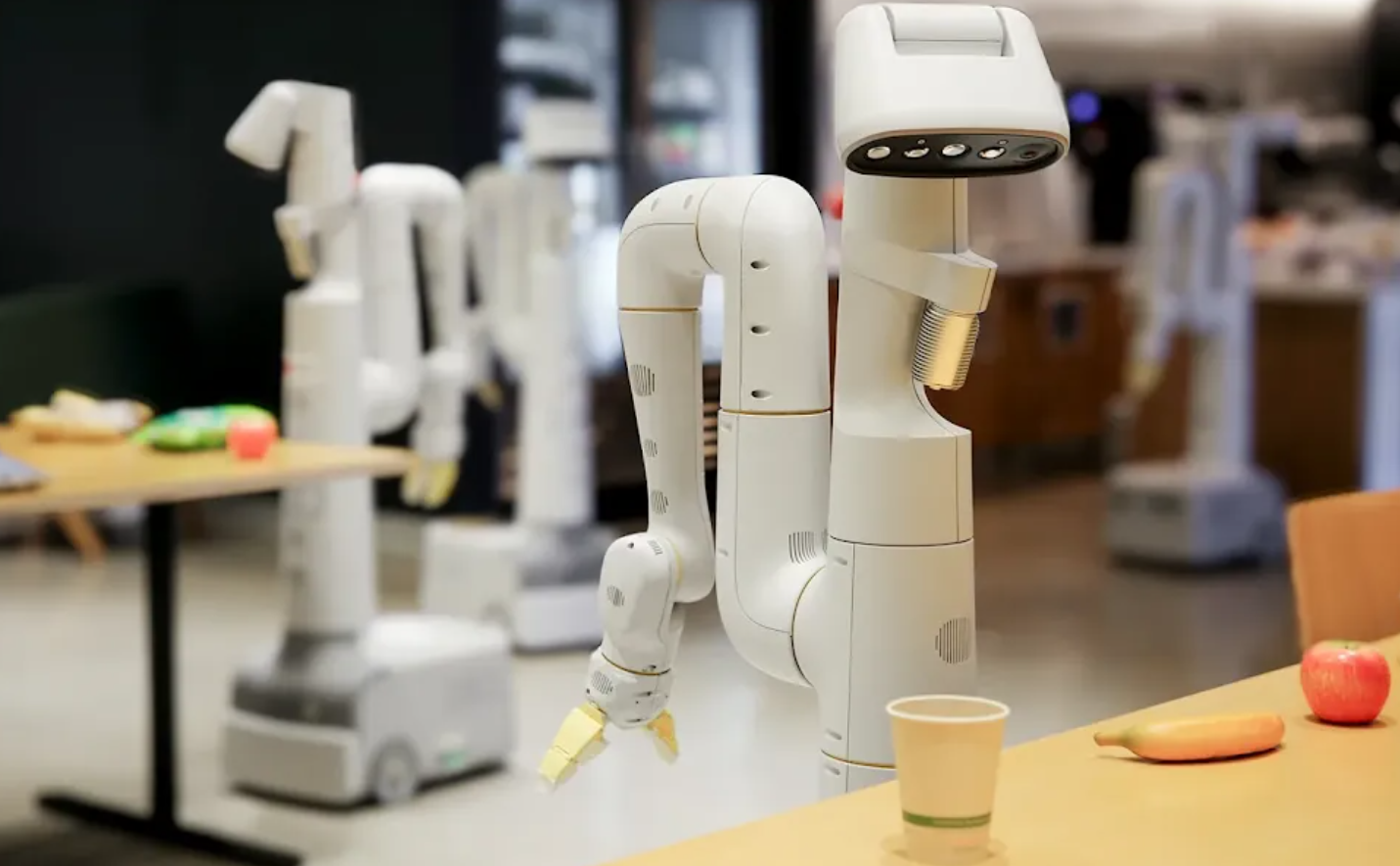

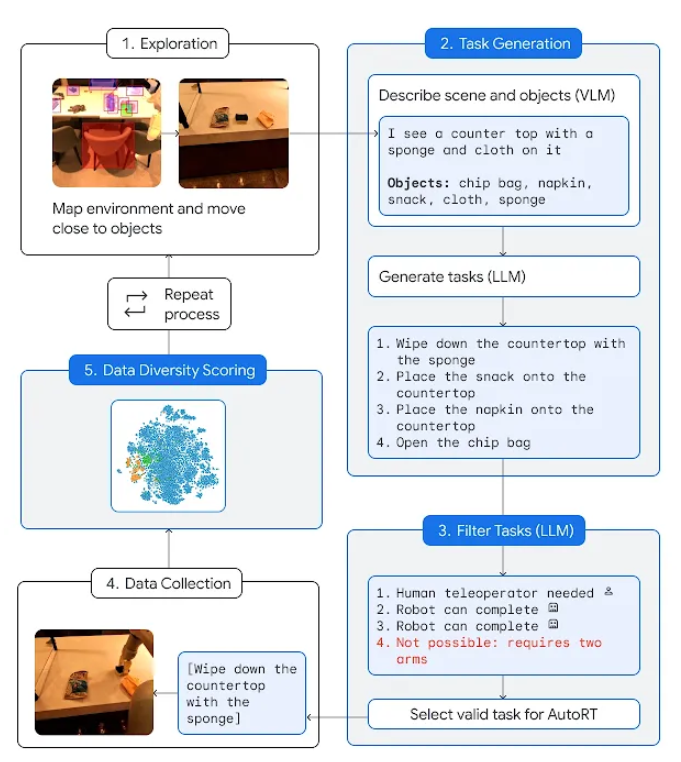

AutoRT uses large AI models such as Large Language Models (LLMs) and Visual Language Models (VLMs) in combination with specialized robot models to scale robot learning and train robots for real-world applications.

AutoRT can teach multiple robots simultaneously to perform different tasks in different environments. A VLM is used to understand the environment and the objects in view, and an LLM is used to suggest and select appropriate tasks for the robot to perform.

During a seven-month evaluation period, the system safely trained up to 20 robots simultaneously and a total of 52 unique robots. This resulted in a rich dataset of 77,000 robot trials in 6,650 individual tasks.

AutoRT uses safety rules, including a robot constitution, to provide safety guidance to the LLM-based decision-maker when selecting tasks for robots.

The rules are based on Isaac Asimov's Three Laws of Robotics. Human safety comes first, and the robot should avoid tasks that involve humans, animals, sharp objects, or electrical devices.

In addition, AutoRT uses established safety measures from classical robotics. For example, the robots will stop if the force on the joints exceeds a certain limit.

SARA-RT: Improving the efficiency of robotic transformers

SARA-RT (Self-Adaptive Robust Attention for Robotics Transformers) is a new system designed to make robotic transformers (RT) learn more efficiently.

Using a novel method for fine-tuning the model, which Google Deepmind calls "up-training", SARA-RT converts "quadratic complexity to mere linear complexity", thereby reducing the computational effort and increasing the speed of the original model while maintaining the same quality.

"We believe this is the first scalable attention mechanism to provide computational improvements with no quality loss," writes Google Deepmind.

SARA-RT-2 model for manipulation tasks. Robot’s actions are conditioned on images and text commands. The robot's actions are linked to images and text commands. | Text and Video: Google Deepmind

SARA-RT can be applied to various Transformer models, such as point cloud Transformers that process spatial data from robotic depth cameras. According to Google Deepmind, the method has the potential to massively expand the application of Transformer technology for robots.

RT Trajectory: Improved robot generalization

RT-Trajectory is a model that adds visual contours to robot motion descriptions in training videos to help robots generalize and better understand how to perform tasks.

By overlaying 2D trajectory sketches of the robot arm in training videos, RT-Trajectory provides the model with convenient low-level visual cues as it learns robot control strategies.

In a test of 41 unknown tasks, an arm controlled by RT-Trajectory more than doubled the performance of existing RT models, achieving a task success rate of 63% compared to 29% for RT-2.

Left: A robot, controlled by an RT model trained with a natural-language-only dataset, is stymied when given the novel task: “clean the table”. A robot controlled by RT-Trajectory, trained on the same dataset augmented by 2D trajectories, successfully plans and executes a wiping trajectory Right: A trained RT-Trajectory model given a novel task (“clean the table”) can create 2D trajectories in a variety of ways, assisted by humans or on its own using a vision-language model. | Text and Video: Google Deepmind

Google DeepMind envisions a future where these models and systems are integrated to create robots with the motion generalization of RT-Trajectory, the efficiency of SARA-RT, and the rich data collection of models like AutoRT. The ultimate goal of this research is to build more efficient and useful robots.