Google Deepmind introduces RT-2, a new model for robot control that learns from robot data and general web data, and uses these two sources of knowledge to generate precise robot instructions.

Since the advent of large language models, robotics has tried to leverage the capabilities of LLMs trained on web data. Their general knowledge combined with reasoning skills is valuable for everyday robot control. For example, they can use the chain-of-thought reasoning common to LLMs to navigate more efficiently in real-world environments.

RT-2 combines robot data, language, and vision for multistep and multimodal robot action

The new research builds on Robotics Transformer 1 (RT-1), introduced late last year, the first "large robot model" trained with robot demonstration data collected from 13 robots over a 17-month period in an office-kitchen environment.

In comparison, RT-2 shows improved generalization ability and semantic and visual understanding beyond the robot data seen during training. The research team used Vision Language Models (VLMs) based on PaLM-E and PaLI-X. As a result, RT-2 can derive commands from a combination of visual and textual input, whereas SayCan, for example, relies solely on language.

For RT-2, Google Deepmind combines RT-1 with a vision-language model trained on web data. | Video: Deepmind

This robustness is achieved through a three-step approach: First, RT-2 learns from web data, which provides the model with language foundations and everyday logic.

Second, it learns from robot data, which provides the model with a practical understanding of how it should interact with the world.

Finally, by combining these two sets of data, RT-2 can understand and generate precise commands for robot control based on real-world scenarios.

With RT-2, robots are able to learn more like we do — transferring learned concepts to new situations. Not only does RT-2 show how advances in AI are cascading rapidly into robotics, it shows enormous promise for more general-purpose robots.

Google Deepmind

For example, when older systems are asked to dispose of garbage, they must explicitly learn what garbage is, how to recognize it, and how to collect and dispose of it. In contrast, RT-2 can draw on its extensive knowledge of the Web to identify and dispose of garbage, and even perform actions for which it has not been explicitly trained. This includes abstract concepts such as understanding when a previously useful item (such as a banana peel or a bag of chips) becomes trash.

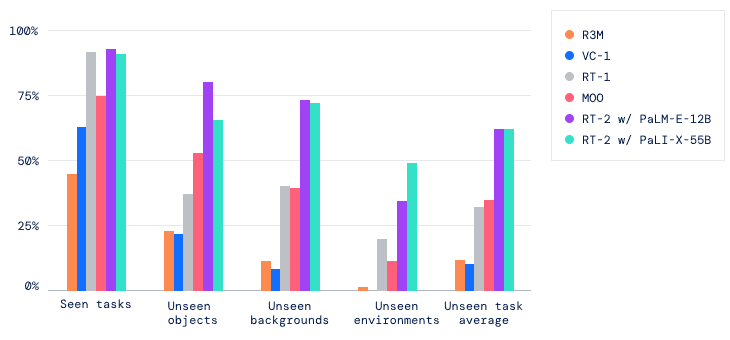

RT-2 is better at unfamiliar tasks

The research team also shows that RT-2 is capable of multistep reasoning using the "chain-of-thought" technique. For example, the robot can reason why a rock is a better improvised hammer than a piece of paper, or why a tired person might need an energy drink, and make better decisions.

By transferring acquired knowledge to new scenarios, RT-2 improves the adaptability of robots to different environments. In more than 6,000 robot tests, RT-2 demonstrated the same performance as its predecessor RT-1 in trained tasks and made great strides in untrained tasks, where the success rate nearly doubled from 32 percent to 62 percent.