Google DeepMind's new Gemma 2 model outperforms larger LLMs with fewer parameters

Google has unveiled updates to its Gemma 2 family of open-source language models, focusing on improved performance, safety, and transparency.

The highlight is a new compact 2-billion-parameter model that matches or exceeds the capabilities of much larger models. Gemma-2-2B demonstrates impressive performance despite its small size.

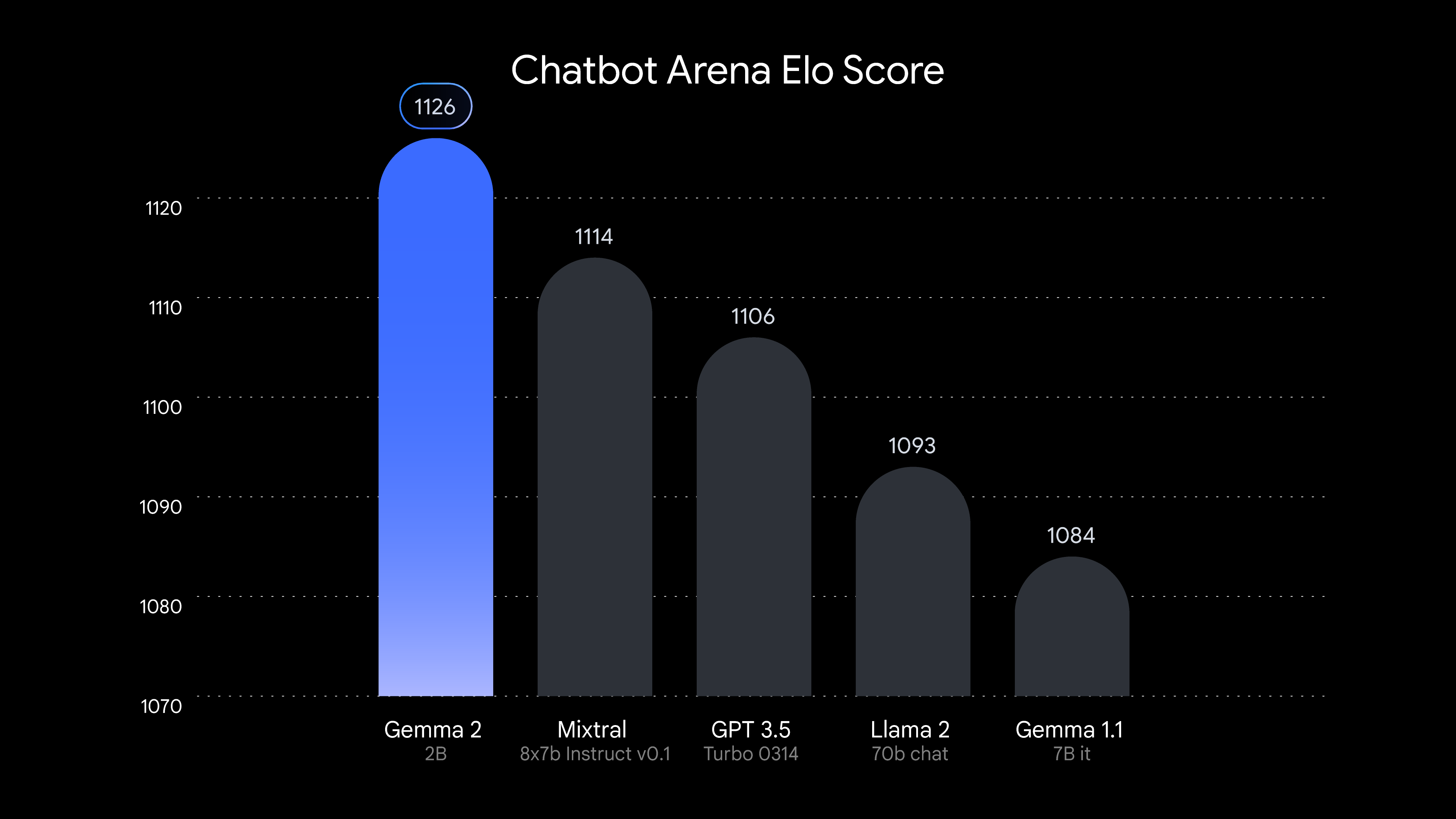

Google claims it outperforms some larger GPT-3.5 level models, including Mixtral-8x7B, in the LMSYS chatbot arena rankings. Most notably, it surpasses LLaMA-2-70B, which has 35 times more parameters.

Its efficiency allows Gemma-2-2B to run on a wider range of less powerful devices. It joins the previously released 9 billion and 27 billion parameter versions of Gemma 2.

Google DeepMind's approach aligns with a recent trend in language models: While top performance has plateaued around GPT-4 levels, newer models achieve similar results much more efficiently.

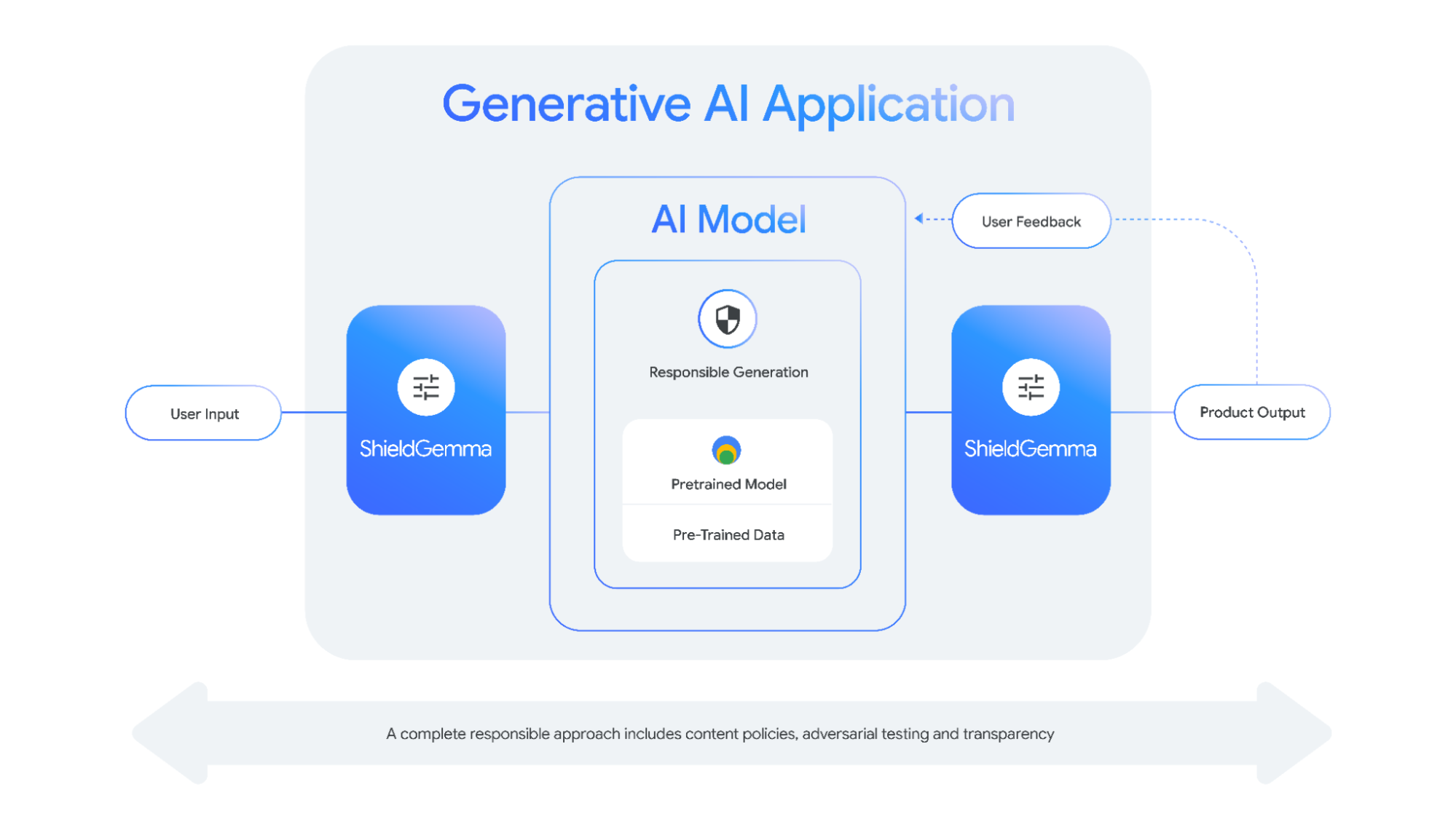

To improve safety, Google has introduced ShieldGemma, a set of content filtering classifiers based on Gemma 2. Available in 2, 9, and 27 billion parameter versions, these classifiers aim to detect and mitigate harmful content in AI inputs and outputs, focusing on hate speech, harassment, sexually explicit material, and dangerous content.

Gemma Scope aims to help better understand AI decisions

Google has also launched Gemma Scope, a tool designed to bring greater transparency to AI. It provides insight into the decision-making processes of Gemma-2 models, helping researchers better understand how the models recognize patterns, process information, and make predictions.

Gemma-2-2B is now available on platforms including Kaggle, Hugging Face and Vertex AI Model Garden and can be tried out in Google AI Studio or the free Google Colab plan. ShieldGemma and Gemma Scope are also freely accessible.

Google DeepMind initially released Gemma as an open-source model family in February.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.