Google Dreamix: This AI video editor can animate your teddy bears

Google's AI video editor Dreamix can modify videos by prompt or generate new videos directly from a single image.

Generative AI models that generate images or videos from text descriptions have made great strides in the past year thanks to diffusion models. After image models like OpenAI's DALL-E 2, Stable Diffusion and Google's Imagen, tech giants Meta and Google showed off video models like Make-A-Video or Imagen Video a few months later.

But while new methods like Prompt-to-Prompt or InstructPix2Pix, for example, make Stable Diffusion applicable to image processing, video models have so far been limited to synthesis.

Google's Dreamix is an AI video editor

Researchers at Google are now demonstrating Dreamix, a diffusion-based AI video editor that can modify existing videos using text descriptions or generate new videos from an initial image.

For video editing, Dreamix noises up the source images and passes them to a video diffusion model, which then generates new images from the noisy source images based on text prompts and assembles them into a video.

The source images thus provide a kind of sketch that captures, for example, the shape of an animal or its movements while leaving enough room for changes.

Video: Google

In addition to editing existing videos, Dreamix can also generate new videos. Google shows two applications: Video synthesis from a single image first generates additional images by making slight changes, such as in the pose of the object, and then applies them to the video model.

Video: Google

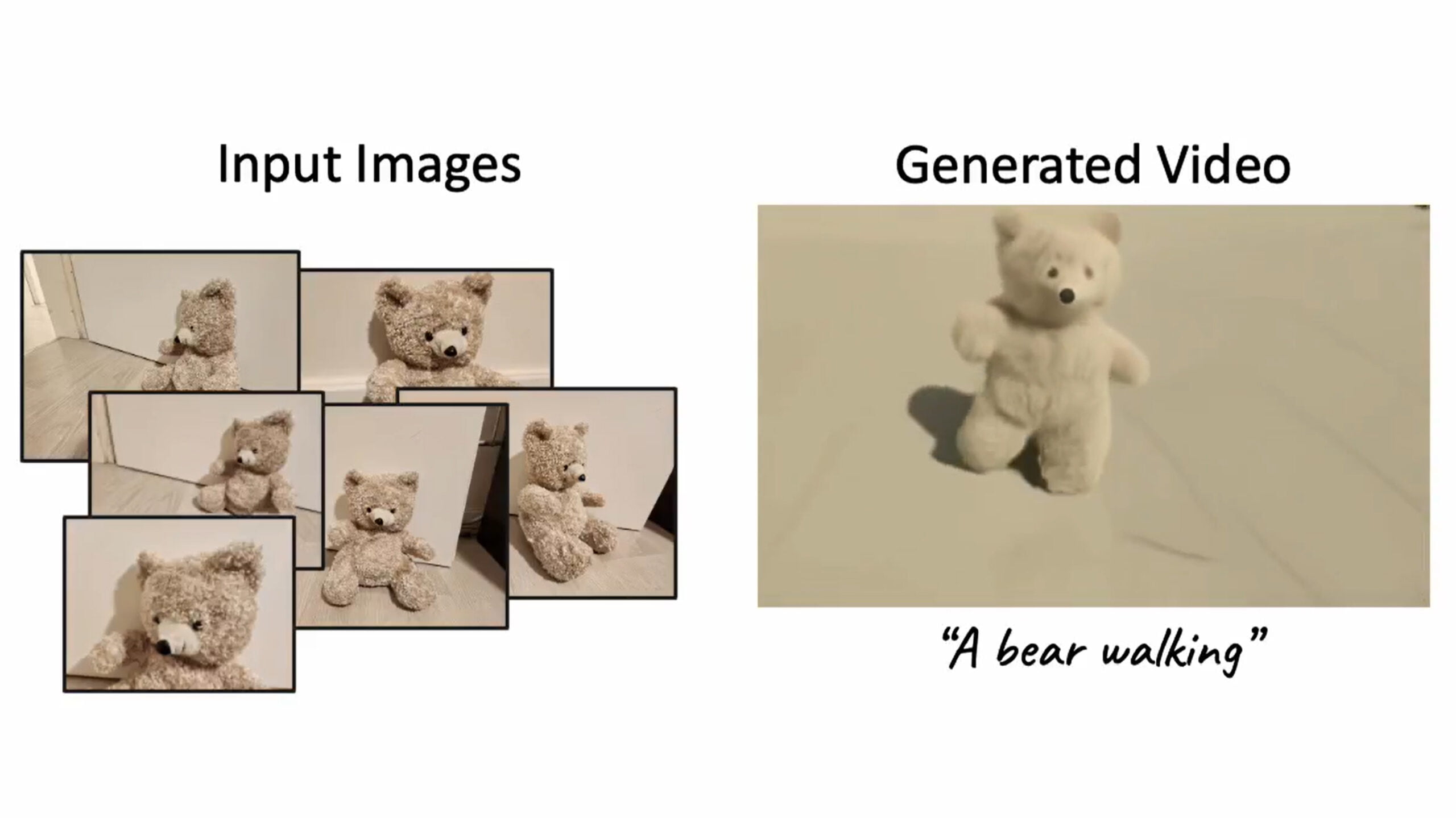

In addition, Dreamix can also generate subject-centric videos, where multiple images of, for example, a toy figure are used to create a video in which the game figure lifts weights.

Video: Google

Google Dreamix lays the foundation for a commercial product

In the videos shown by Google, Dreamix keeps the themes of the video templates, turns a road into a river and has it interact realistically with the tires of a car, or creates a short video with a teddy bear from several photos of it.

Video: Google

Most noticeable when modifying existing videos is the temporal stability gained from the template, which is still a problem with completely newly generated videos, such as those from Imagen Video.

In addition to the quality that needs to be improved, Google sees room for improvement in the required compute power for the used video diffusion models, as well as in the training data and evaluation models used.

The central goal of the work is to "advance research on tools to enable users to animate their personal content," the paper says.

By allowing them to use their own videos and images, Dreamix users could better align AI-generated content with their intentions, despite the biases present in diffusion models, according to Google. On the other hand, "malicious parties may try to use edited videos to mis-lead viewers or to engage in targeted harassment."

Video: Google

Further examples can be found on the Dreamix project page. Google is not currently planning to release the model.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.