Google opens updated Deep Research Agent to developers with new API

Key Points

- Google has unveiled an upgraded Deep Research Agent and, for the first time, is making it available to developers through a new API.

- The agent is capable of independently creating search queries, evaluating the results, and refining its searches to find relevant information.

- The also newly introduced Interactions API is designed to make it easier to build AI agents, featuring server-side state management and compatibility with the Model Context Protocol.

Google releases a more powerful version of its Deep Research Agent and opens it to developers for the first time. The company also introduces a new open-source benchmark for complex web searches.

Google is making its Deep Research Agent available to developers. For the first time, they can embed Google's most capable autonomous research features directly into their own apps. Google Deep Research first launched in the Gemini app in late 2024.

The agent takes an iterative approach to research: it formulates search queries, reads results, spots knowledge gaps, and searches again until it reaches a satisfactory answer. The new version is more powerful than the previous model and slightly outperforms the web search capabilities of the recently released Gemini 3 Pro.

Google says the agent's reasoning core runs on Gemini 3 Pro, trained specifically to reduce hallucinations and maximize report quality for complex tasks. AI errors remain a weakness across all current deep research systems—while you can't fully trust the results, Deep Research can still prove useful for exploratory source gathering.

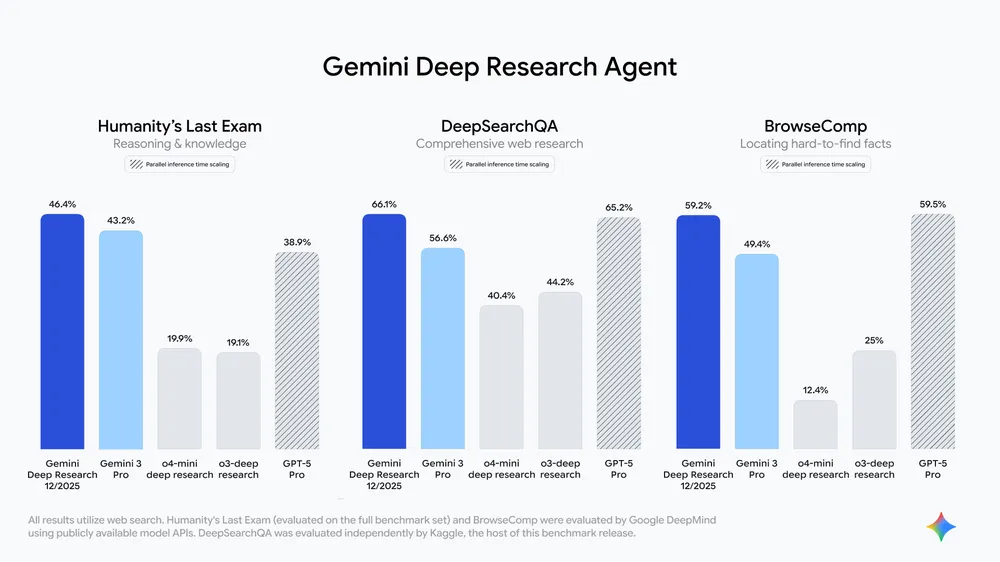

New benchmark scores show where Google stands

Google claims the new deep research agent hits state-of-the-art results on several benchmarks: 46.4 percent on the full Humanity's Last Exam (HLE), 66.1 percent on the new DeepSearchQA, and 59.2 percent on BrowseComp. The agent is also optimized to produce well-researched reports at lower cost.

Alongside the agent update, Google is releasing DeepSearchQA, a new open-source benchmark. The company argues that existing benchmarks often fail to capture the complexity of real, multi-step web searches. DeepSearchQA includes 900 hand-crafted causal chain tasks across 17 subject areas, where each step depends on the previous analysis.

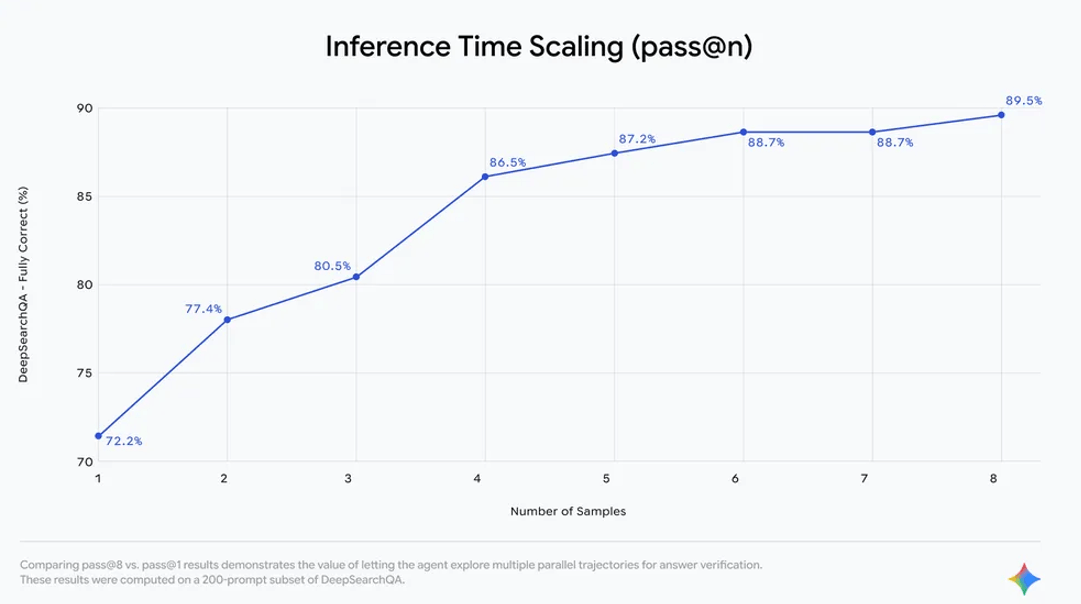

Unlike traditional fact-based tests, DeepSearchQA measures answer completeness. The benchmark evaluates both search precision and retrieval recall, serving as a diagnostic tool for measuring the benefits of extended thinking time. Google provides the dataset and leaderboard along with a technical report.

Developers get access to features like PDF, CSV, and document analysis, controllable report structures, granular source citations, and JSON schema outputs. Future updates will add native chart generation and expanded MCP support for custom data sources.

The new Deep Research will soon roll out to Google Search, NotebookLM, and Google Finance.

New Interactions API targets complex agent workflows

Alongside the Deep Research update, Google is launching the Interactions API, a standardized interface for working with models like Gemini 3 Pro and agents like Deep Research. The API is available as a public beta through Google AI Studio with a Gemini API key - yet another interface standard for developers to navigate in an already complex AI landscape.

Google explains that the previous generateContent interface was built for simple request-response text generation. New model capabilities like "thinking" and advanced tool usage needed a native interface. The generateContent API will stick around for standard production workloads. The Interactions API remains in beta and may still change, Google notes.

The new interface brings several features for complex agent applications: optional server-side state management, an interpretable data model for nested messages, background execution for long-running inference loops, and support for the Model Context Protocol (MCP). Google previously announced that MCP would become a core part of its cloud infrastructure.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now